What is GPT-4o? The Ultimate Guide to Real-Time AI

Introduction: The Next Evolution of Conversational AI

For years, artificial intelligence has promised a truly fluid, human-like interaction—one where the gap between human input and machine response feels negligible. With the AI model release 2024, OpenAI delivered a monumental step toward that goal: GPT-4o.

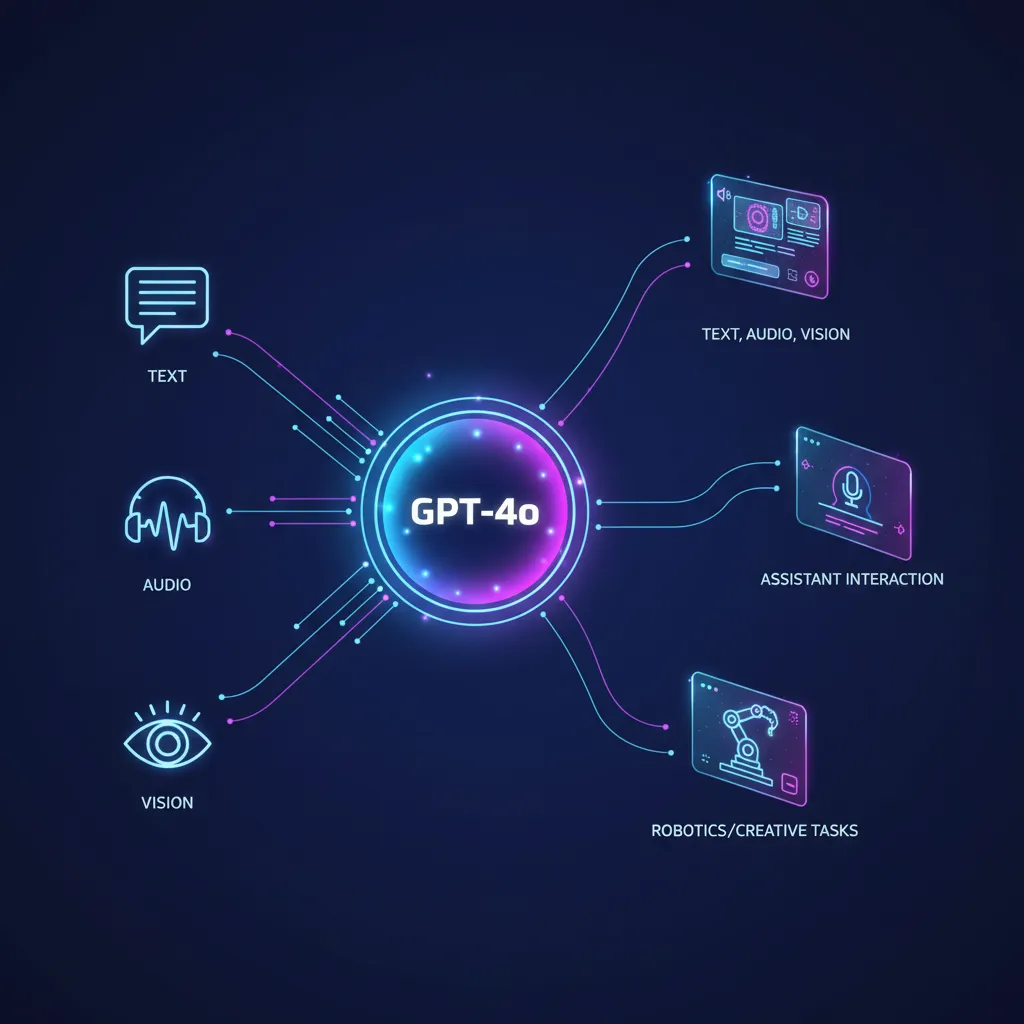

So, what is GPT-4o? It is not merely an incremental update; it is a fundamental architectural shift that redefines the capabilities of an intelligent assistant. GPT-4o, where the “o” stands for “omni,” is the first native omni-model AI from OpenAI, meaning it can process and generate content across text, audio, and vision inputs and outputs seamlessly within a single neural network.

This sophisticated design unlocks unprecedented speed and responsiveness, fundamentally transforming the user experience. Imagine an AI voice assistant that can detect your emotional tone, interrupt politely, and translate languages in real-time with virtually no latency. That is the core promise of the real-time AI assistant powered by GPT-4o.

This ultimate guide will break down this OpenAI new model, exploring its revolutionary GPT-4o features, comparing it directly to its predecessor (understanding the GPT-4o vs GPT-4 difference), demonstrating how to use GPT-4o, and looking into the profound implications this technology holds for the future of AI and everyday life. By the end, you will understand why GPT-4o represents a critical leap in AI technology trends and conversational design.

The Dawn of the Omni-Model: Understanding GPT-4o’s Architecture

The significance of GPT-4o lies in its fundamental architecture. Previous high-performing models, like GPT-4, processed different modalities (audio, text, image) via separate pipelines. For example, a spoken command would first be transcribed by one model (an automatic speech recognition model), then processed by the LLM (GPT-4), and finally synthesized back into speech by a text-to-speech model. This multi-step process introduced latency and lost nuance.

What the ‘O’ Stands For: Omnimodel Architecture

GPT-4o changes the game by being a single, natively multimodal AI model. When OpenAI coined the term “omni-model,” they referred to this unified approach. The ‘O’ for ‘Omni’ signifies that text, audio, and visual inputs are all treated as native data types by the same neural network.

This single-model approach has critical consequences for performance:

- Reduced Latency: The processing time is drastically cut because there are no handoffs between different specialized models. This allows GPT-4o to respond to audio input in as little as 232 milliseconds (ms), with an average response time of 320 ms—a speed comparable to human conversation.

- Increased Cohesion: Since the model processes everything simultaneously, it can better understand context and emotional cues. For example, it can interpret the tone of your voice (excited, confused, urgent) and factor that into its text response, leading to much more natural and empathetic interactions.

- Superior Performance Across Modalities: In head-to-head testing, GPT-4o capabilities showed significant improvements in non-English language performance, speed of vision analysis, and quality of audio output, confirming its position as a true next-gen AI.

Performance Revolution: Latency and Speed

The speed of interaction is perhaps the most transformative of the GPT-4o features. Latency reduction is the key ingredient in creating a genuinely useful conversational AI.

In the context of the previous models:

- GPT-4 (Audio Pipeline): Response times often ranged from 5 to 10 seconds due to the necessary transcription and synthesis steps.

- GPT-4o (Omni-Model): Response times are near-instantaneous, falling between 200ms and 400ms.

This speed not only makes the AI voice assistant function feel more seamless but also opens the door for complex, real-time applications, such as live coaching, simultaneous real-time translation AI, and immediate technical support without the frustrating pauses traditionally associated with AI interaction.

GPT-4o Features: The Core of the Real-Time AI Assistant

The core appeal of GPT-4o lies in its versatility and deep integration of various senses. It is designed to be an indispensable co-pilot across digital and physical tasks.

Unprecedented Multimodal AI Model Capabilities

As a true multimodal AI model, GPT-4o can handle inputs and outputs in any combination of text, audio, and vision, making the interaction feel less like talking to a machine and more like collaborating with an extremely knowledgeable partner.

| Modality | GPT-4o Capability | Real-World Impact |

|---|---|---|

| Vision | Real-time image analysis, graph interpretation, live video feed understanding. | Summarizing complex charts, explaining code screenshots, assisting the visually impaired. |

| Audio | Emotion detection, natural voice synthesis (with multiple tones/styles), interruptibility. | Fluid, emotional, and responsive AI voice assistant interactions, superior to any previous model. |

| Text | Matches GPT-4 Turbo performance on text-only tasks, excelling in reasoning and coding. | High-quality AI text generation, complex problem-solving, and precise summarization. |

[Related: https://hyperdaily.one/blog/gpt-4o-multimodal-ai-revolution-is-here/]

The Power of Visual Understanding

The AI vision capabilities of GPT-4o are far beyond simple image tagging. The model can process an image you show it and immediately understand the context.

Examples of Vision Capabilities from the GPT-4o demo:

- Mathematical Assistance: Showing GPT-4o a complex math problem written on a piece of paper, and having it guide you through the solution step-by-step, without giving away the final answer immediately.

- Code Debugging: Taking a photo of an error message on a terminal, and the model instantly explaining the error and suggesting fixes.

- Emotional Analysis: Showing the model your face, and it can comment on your mood or facial expressions in real-time.

Real-Time AI Voice Assistant Interactions

The improvements to the AI voice assistant interface are perhaps the most compelling showcase of GPT-4o capabilities. The responsiveness is transformative. Users can interrupt the model mid-sentence, shift the topic, or ask follow-up questions without waiting for the model to finish its previous thought.

This interruptibility and low latency fundamentally solve the “turn-taking” problem that plagues traditional digital assistants like Siri or Alexa. When you combine this with the model’s ability to inject human-like emotion and tone into its synthesized voice, the resulting interaction is profoundly more engaging and less robotic.

Real-Time Translation

One of the most impressive GPT-4o use cases demonstrated was its ability to act as a seamless, real-time translator between two people speaking different languages. Because the model processes audio so quickly and is trained globally, it can listen to a sentence in Italian and instantly generate a translation in English (and vice versa) with a natural, human cadence. This elevates the model beyond a simple tool to a genuine facilitator of global communication.

GPT-4o vs GPT-4: A Generational Leap

Understanding the jump from GPT-4 to GPT-4o is crucial for appreciating the significance of the OpenAI new model. While GPT-4 was a marvel of intelligence, it was often bottlenecked by its speed and cost structure, especially when processing complex multimodal inputs.

GPT-4o offers improvements across the board, making it faster, cheaper, and smarter, particularly in time-sensitive tasks.

Key Differences in Performance

| Feature | GPT-4 | GPT-4o (Omni) | Advantage |

|---|---|---|---|

| Input Modality | Handled separately (ASR -> LLM -> TTS) | Single, natively multimodal network | Speed, Cohesion |

| Audio Latency | 5.4 seconds average | 0.32 seconds average | Real-Time Interaction |

| API Speed | Slower (varies) | 2x Faster than GPT-4 Turbo | Developer Productivity |

| API Cost | High | 50% Cheaper than GPT-4 Turbo | Accessibility, Scalability |

| Vision Performance | Good, but not real-time | Excellent, near-instantaneous analysis | Live Assistance |

| Multilingual Support | Strong | Superior (especially in lower-resource languages) | Global Reach |

| Free Access | Limited access for free users | Significantly more free ChatGPT access | Democratization of AI |

The GPT-4o vs GPT-4 debate settles quickly when speed and cost are considered. For developers, the fact that the GPT-4o API is half the price and twice the speed of its predecessor is a massive accelerator for integrating sophisticated intelligent assistants into applications. For general users, the increased intelligence and reduction in latency make the experience fundamentally better, moving the interaction from transactional to truly conversational.

[Related: https://hyperdaily.one/blog/gpt-4o-project-astra-multimodal-ai-comparison/]

Accessibility for All: Free ChatGPT Access and the Desktop App

One of the major strategic shifts associated with the GPT-4o launch was OpenAI’s commitment to democratizing access to its most powerful AI.

Free ChatGPT Access for Everyone

Previously, access to the most advanced models (like GPT-4 and GPT-4 Turbo) was strictly limited to paid ChatGPT Plus subscribers. With the OpenAI GPT-4o release, a substantial portion of its capabilities—including the core intelligence, vision, and text processing—has been rolled out to free tier users.

While Plus subscribers still receive priority access, higher message limits, and early access to new GPT-4o features, the move to offer the base model’s intelligence for free ensures that this next-gen AI is accessible globally. This is crucial for education, developing countries, and small businesses looking to leverage high-quality AI text generation and reasoning capabilities.

The New Desktop App ChatGPT

To further enhance usability, OpenAI also announced the launch of a new desktop app ChatGPT for macOS (with Windows coming later). This application integrates the powerful GPT-4o directly into the user’s operating system, allowing for seamless interactions that leverage the model’s AI vision capabilities.

Key benefits of the desktop app ChatGPT:

- System-Wide Access: Easily invoke the assistant using a keyboard shortcut.

- Screen Sharing: Users can take a screenshot and instantly ask GPT-4o to analyze, summarize, or explain the content on their screen. This is immensely useful for technical support, coding, or data analysis tasks.

- Instant Voice Mode: The app supports the low-latency, real-time voice and audio features of GPT-4o, making it the perfect conduit for the sophisticated AI voice assistant.

Real-World GPT-4o Use Cases and Applications

The enhanced GPT-4o capabilities are not just theoretical; they translate into powerful, practical applications across nearly every industry. The combination of speed, multimodality, and affordability positions GPT-4o as the backbone for countless new services.

Enhancing Productivity and Education

In the professional and academic sphere, GPT-4o acts as a personal tutor, researcher, and productivity amplifier.

- Real-Time Coaching: A coder can speak aloud their thought process while debugging, and GPT-4o can offer immediate feedback and solutions, acting as a pair-programmer.

- Data Analysis: Showing the model a photo of a whiteboard covered in complex financial formulas or a dense graph, and asking it to summarize the implications or identify trends.

- Language Learning: Utilizing the real-time translation AI features to practice speaking a foreign language with an assistant that corrects pronunciation and fluency instantly.

[Related: https://hyperdaily.one/blog/ai-sustainable-fashion-eco-chic-future/]

The GPT-4o API for Developers: Building the Future

For engineers and startups, the GPT-4o API is perhaps the most exciting development. The improved speed (doubled throughput) and halved cost compared to GPT-4 Turbo means developers can build much more sophisticated and computationally demanding intelligent assistants without breaking the bank.

The API supports the full range of multimodal inputs and outputs, allowing developers to create applications that:

- Process streaming video and audio for security, monitoring, or accessibility services.

- Build interactive, voice-driven interfaces for applications where typing is impractical (e.g., in a car or manufacturing setting).

- Integrate complex vision analysis into existing software, such as medical imaging analysis or architectural design review.

This accessibility for AI for developers is set to fuel a wave of innovation, shifting the focus from slow, text-based interactions to fluid, sensory-rich experiences.

Intelligent Assistants and the Future of Interaction

The release of GPT-4o is a significant benchmark in the evolution of conversational AI. While other companies, such as Google with Project Astra comparison, are also aggressively pursuing real-time, multimodal AI, GPT-4o sets a high standard for responsiveness and integration.

The future points toward these assistants moving beyond tools and becoming true partners—digital entities that understand our intent, context, and environment. We are moving closer to the vision of a ubiquitous intelligent assistant that integrates with every facet of digital life, managing schedules, offering creative feedback, and facilitating learning.

Looking Ahead: The Future of AI and GPT-4o

The launch of GPT-4o confirms several major AI technology trends that will define the next decade of development. The race is no longer simply about intelligence (raw reasoning power), but about utility—how quickly and seamlessly the intelligence can be deployed.

The Importance of Human-Like Latency

The achievement of human-like latency (under 320ms) is more than a technical feat; it’s a psychological one. When an AI responds this quickly, our brains stop perceiving the interaction as a command-response loop and start perceiving it as a conversation. This shift is essential for widespread adoption of real-time AI assistant technology. It means users will be more likely to rely on the AI for complex, back-and-forth tasks where continuity and fluidity are paramount.

Ethical Implications of Multimodal Speed

As GPT-4o capabilities grow, especially in discerning emotion and analyzing live video feeds, the ethical responsibilities around deployment become more acute. Developers must prioritize data privacy and guard against misuse, ensuring that this powerful omni-model AI is used to assist, not intrude. The focus on transparency and safety must evolve just as rapidly as the technology itself.

[Related: https://hyperdaily.one/blog/unlocking-new-realities-ai-spatial-computing/]

The Competitive Landscape: Project Astra Comparison

OpenAI’s rivals are not standing still. Google’s Project Astra comparison showcases a similar vision: a highly responsive, personalized, and context-aware multimodal assistant. This competitive pressure guarantees that the pace of innovation in natural language processing and real-time vision will only accelerate. Users stand to benefit from increasingly capable, personalized, and efficient intelligent assistants that blur the line between virtual and real-world support. The race to deliver the best real-time AI assistant is driving the industry forward at breakneck speed.

The rapid succession of models and announcements confirms that the AI model release 2024 is truly historic, centered on making AI faster, more sensory, and dramatically more affordable for both users and developers.

Conclusion: The Ultimate Real-Time AI

GPT-4o is not just an upgrade; it is a declaration that the era of slow, disconnected AI is over. By unifying text, audio, and vision into a single, high-speed neural network, OpenAI has created a genuine omni-model AI that functions as a truly responsive, real-time AI assistant.

From offering free ChatGPT access to leveraging the powerful and affordable GPT-4o API for developers, this model promises to permeate everything from professional coding to personal communication and education. Its superior GPT-4o capabilities—especially the lightning-fast audio response and advanced AI vision capabilities—make complex interactions, like real-time translation AI, feel effortless and intuitive.

The integration of the desktop app ChatGPT ensures that this next-gen AI is always within reach, ready to analyze, converse, and assist instantly. As we continue to integrate this powerful conversational AI into our daily workflows, the way we work, learn, and create will be forever changed. The question is no longer if AI will become part of our lives, but how quickly we can adapt to its real-time presence.

Embrace the speed, explore the multimodality, and begin discovering how to use GPT-4o to unlock a new level of productivity and creative potential in the age of instantaneous artificial intelligence.

FAQs

Q1. What is GPT-4o?

GPT-4o (where the ‘o’ stands for ‘omni’) is OpenAI’s latest flagship multimodal AI model released in 2024. It is designed as a single native model capable of processing and generating content seamlessly across text, audio, and vision inputs and outputs, delivering intelligence comparable to GPT-4 Turbo but with much faster speeds and half the cost.

Q2. How is GPT-4o different from GPT-4?

The key difference between GPT-4o vs GPT-4 is architecture and speed. GPT-4 processed modalities sequentially (e.g., converting audio to text before processing), leading to high latency (5+ seconds). GPT-4o processes everything natively within one model, drastically reducing latency to an average of 320 milliseconds, making it a true real-time AI assistant. It is also twice as fast and 50% cheaper via the GPT-4o API.

Q3. Can I get free ChatGPT access to GPT-4o?

Yes. OpenAI offers significant free ChatGPT access to the base GPT-4o model. Free users gain access to the model’s core intelligence, AI vision capabilities, data analysis, and file upload features, although they have lower message caps and may experience limits during peak demand compared to paid Plus subscribers.

Q4. What are the key GPT-4o features in terms of audio?

GPT-4o features regarding audio include near-instantaneous response times (under 0.5 seconds), the ability to detect and respond to emotional tone in the user’s voice, interruptibility during conversation, and high-quality, natural-sounding voice synthesis. This makes the AI voice assistant capability far more fluid and human-like.

Q5. What does ‘omni-model AI’ mean?

An omni-model AI (or multimodal AI model) is a single, unified neural network trained to understand and generate outputs based on diverse input types—text, audio, and visual data—all at once. Unlike traditional models that require separate components for each data type, the omni-model provides a cohesive, integrated understanding of context across all senses, which is essential for advanced conversational AI.

Q6. How can developers use the GPT-4o API?

AI for developers is revolutionized by the GPT-4o API because of its double speed and reduced cost. Developers can use the API to build low-latency applications that integrate complex vision tasks (like live video analysis), real-time speech interaction, and high-volume AI text generation into their products more affordably and efficiently than ever before.

Q7. Is there a desktop app ChatGPT for GPT-4o?

Yes, OpenAI released a dedicated desktop app ChatGPT for macOS (with Windows forthcoming). This app allows users to seamlessly invoke GPT-4o with a shortcut, capture screenshots for instant AI image analysis, and use the low-latency voice mode, integrating the real-time AI assistant directly into their computer’s workflow.