What is GPT-4o? The Ultimate 2024 Guide

Introduction: Witnessing the Birth of Truly Omnimodal AI

The landscape of large language models (LLMs) is shifting faster than ever. Just when we thought models like GPT-4 Turbo had defined the peak of generative AI, OpenAI dropped a seismic announcement during their OpenAI Spring Update: the introduction of GPT-4o.

So, what is GPT-4o? The “o” stands for “omni,” and this single letter signifies a massive leap forward. GPT-4o is not just an incremental improvement; it is a fundamental redesign of how AI interacts with the world, unifying text, vision, and audio processing into a single, cohesive model. This OpenAI new model promises not only higher intelligence but also unprecedented speed and accessibility, signaling a true paradigm shift in conversational AI.

In this ultimate guide, we will unpack every aspect of GPT-4o. We’ll cover the revolutionary GPT-4o features, compare it head-to-head with its predecessor (GPT-4o vs GPT-4 Turbo), show you how to use GPT-4o, and analyze the seismic changes this model brings to both developers and everyday users, including the monumental shift toward GPT-4o free access for the masses. By the end, you’ll understand why many consider this the most significant step toward truly human-like AI interaction in 2024.

What is GPT-4o (GPT-4 Omni)? Defining the Multimodal Revolution

At its core, GPT-4o is OpenAI’s latest flagship multimodal AI model. Before GPT-4o, when you interacted with ChatGPT using voice, the process involved a complex pipeline:

- A smaller model transcribed your voice into text.

- GPT-4 or GPT-4 Turbo processed the text query.

- Another model converted the resulting text back into synthetic speech.

This handoff process introduced delays, latency, and loss of contextual nuance (like emotional tone or background sounds).

The Power of Unified Modality

The genius of GPT-4 Omni is that it processes text, audio, and vision through the same neural network. It means the model inherently understands the relationship between these inputs in real-time. This unification leads to three dramatic improvements:

- Lower Latency: Audio responses are nearly instantaneous. GPT-4o can respond to audio inputs in as little as 232 milliseconds (ms), with an average of 320 ms—a speed comparable to human conversation.

- Emotional and Contextual Understanding: The AI can detect subtle emotional cues, tone, and even background noise in your voice, integrating this information into its response generation. If you sound frustrated, the AI knows it, allowing for more empathetic and contextual dialogue.

- Seamless Integration of Vision and Audio: You can show the AI a math problem on paper via your camera and ask a question about it out loud simultaneously. The AI processes both the visual information and the audio query within the same instance. This is the definition of a genuinely integrated AI with vision capabilities.

The GPT-4o release date marked a historical moment, ushering in the age of seamless digital interaction that feels more like talking to a highly intelligent human than a piece of software.

The Core Features That Define GPT-4o

The excitement surrounding the OpenAI announcements wasn’t just about speed; it was about the practical capabilities unlocked by this new architecture. The GPT-4o features fundamentally change user expectations for an AI voice assistant and creative partner.

1. Real-Time Conversational AI

The primary breakthrough is the ability for a fluid, interruptible, and expressive real-time AI conversation. Unlike previous voice modes, which required users to wait for the AI to finish speaking before responding, GPT-4o allows genuine dialogue.

- Interruptibility: You can cut the model off, and it understands and adapts its response instantly.

- Expressiveness: The voice output is dramatically more human, capable of delivering responses in multiple distinct personalities and tones (dramatic, robotic, singing, expressive).

- Multilingual Excellence: GPT-4o handles 50 different languages significantly better than its predecessors, making real-time translation and multilingual communication much smoother.

2. Enhanced Vision Capabilities

GPT-4o’s vision is a significant upgrade. While GPT-4 could analyze images, the process was often slow. GPT-4o is nearly instantaneous, allowing for dynamic, real-time visual assistance.

Practical Examples of Vision:

- Tutoring: Show the model a graph or a complex textbook page, and it can instantly interpret and discuss the content, acting as a personal tutor.

- Data Analysis: Snap a photo of a messy whiteboard full of notes, and GPT-4o can transcribe, structure, and summarize the data points.

- Navigation and Real-World Help: Hold your phone camera up to a foreign street sign, and GPT-4o can translate it, read it aloud, and explain the context, effectively transforming it into a powerful visual guide.

3. Desktop Assistant and Screen Sharing

OpenAI introduced a new Desktop application for macOS (and soon Windows) that integrates GPT-4o directly into the operating system.

When invoked, the assistant can see and analyze whatever is on your screen. Need help writing a complex email? Show it the context of your previous threads. Struggling with a Python script? Show it the code editor. This tight integration makes GPT-4o one of the best AI tools 2024 for productivity.

GPT-4o vs GPT-4 Turbo: A Deep Dive into Performance and Cost

When evaluating the performance of any large language models, two factors dominate: speed (latency) and cost (API pricing). GPT-4o vs GPT-4 Turbo comparison shows not just an improvement, but a complete restructuring of value.

Performance Metrics: Speed and Latency

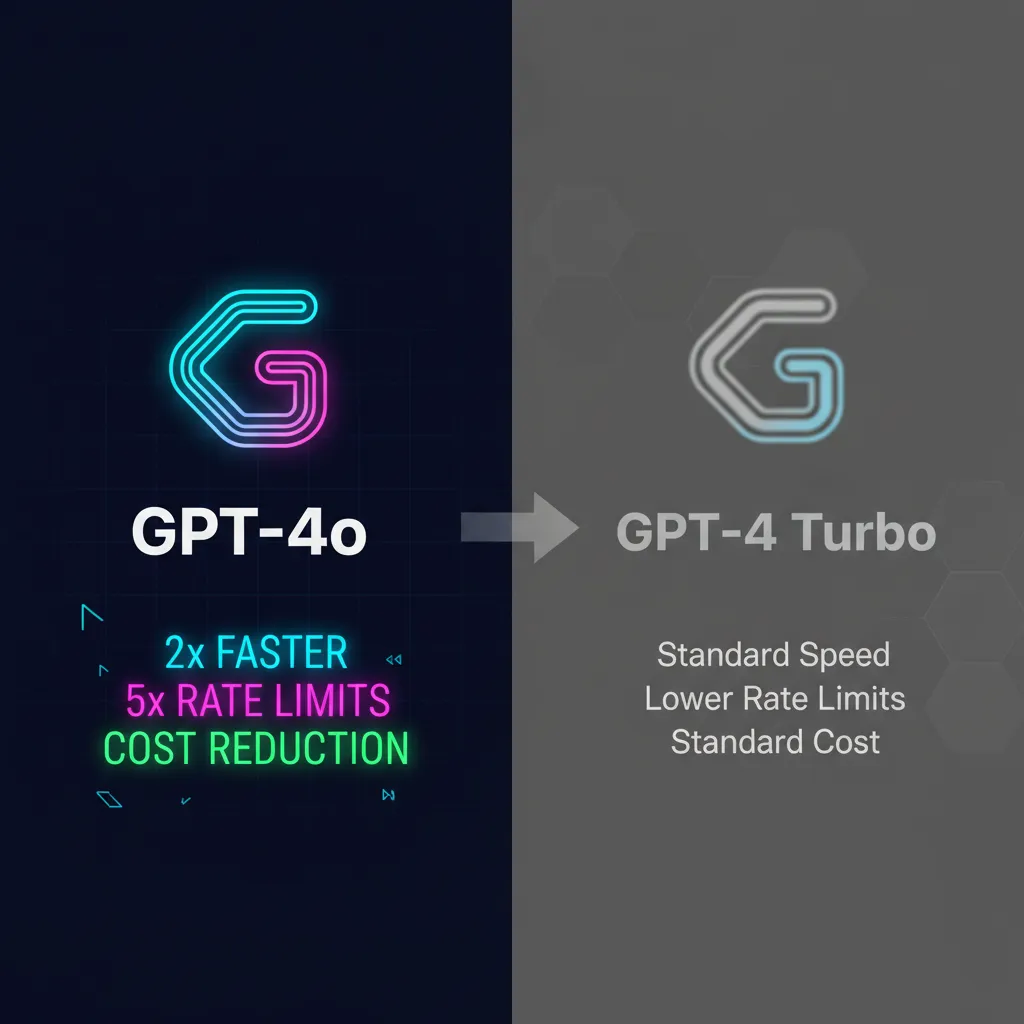

GPT-4 Turbo was already fast, but GPT-4o redefines the standard for speed, earning it the moniker of faster GPT model.

| Metric | GPT-4o (Omni) | GPT-4 Turbo | Improvement over GPT-4 Turbo |

|---|---|---|---|

| Speed | 2x Faster | Standard | Significant |

| Response Latency (Audio) | Avg. 320 ms | Several Seconds | Critical for conversational AI |

| Token Context Window | 128K | 128K | Equal (maintaining high context) |

| Unified Modality | Native Single Model | Pipelined (3 separate models) | Fundamental architectural shift |

| Intelligence (Benchmarks) | Slightly higher scores across MMLU/HumanEval | Very High | Marginal gain, but cost/speed benefit is huge |

Cost Efficiency: Cheaper AI Models

For developers, one of the most compelling aspects of the OpenAI announcements was the GPT-4o API pricing. OpenAI is dramatically reducing the cost for developers to build applications using their state-of-the-art models.

Key takeaway: GPT-4o is 50% cheaper than GPT-4 Turbo in terms of API input tokens, and 2x faster. This cost reduction makes high-performance AI accessible to a much broader range of projects and start-ups. It solidifies its position as one of the cheaper AI models offering elite performance.

This pricing strategy isn’t just good news for large corporations; it lowers the barrier to entry for AI for developers, fostering greater innovation in areas like decentralized infrastructure where cost efficiency is paramount. [Related: The Rise of DePIN: Decentralized Physical Infrastructure Networks Reshaping the Future]

Accessing GPT-4o: Free Tier and Premium Benefits

Perhaps the most impactful news for the average user was the democratization of high-level AI through the ChatGPT new update.

GPT-4o Free Access: A Massive Leap for the Free Tier

In a move that dramatically reshapes the competitive landscape, OpenAI is making GPT-4o the default model for most free users of ChatGPT.

Previously, free users were restricted to the less capable GPT-3.5 model. Now, free users gain access to the features of GPT-4o, including:

- Access to the highly capable intelligence of a GPT-4 class model.

- Basic data analysis and chart generation.

- The ability to use vision capabilities (analyzing images).

- File uploads for summarization and analysis.

- Memory capabilities (the model remembers past conversations).

It is crucial to note the caveat: free users will have a cap on the number of GPT-4o interactions they can have per day or per hour. Once the limit is reached, the model reverts to the older GPT-3.5. However, the fact that state-of-the-art capabilities are available for GPT-4o free access is a game-changer.

Premium Tier (Plus, Team, Enterprise)

Paid subscribers (ChatGPT Plus, Team, and Enterprise) receive several key advantages that make the subscription worthwhile:

- Higher Caps: Significantly higher usage limits for GPT-4o before throttling to GPT-3.5.

- Full Feature Access: Immediate and full access to the new desktop application, the enhanced ChatGPT voice mode with advanced features, and priority access to new, experimental capabilities.

- Faster Performance: Paid users often experience faster response times even during peak demand.

If you rely on continuous, heavy use of the best available model, the paid tiers remain the superior choice, but the availability of a powerful GPT-4 class model to everyone elevates the baseline quality of AI interaction globally.

Real-World Applications of the New OpenAI Model

The unified power of GPT-4o transcends simple text generation. By bridging audio, vision, and text, it opens up profound new avenues for personal assistance and professional productivity.

1. Education and Learning

For students and lifelong learners, GPT-4o acts as an infinitely patient, multimodal tutor.

- Live Translation: Use the voice mode to hold a conversation with someone speaking a different language. GPT-4o listens in real-time, translates instantly, and speaks the response in your chosen language, facilitating cross-cultural communication instantly.

- Code Debugging: Developers can show their screen or an image of their code and explain verbally where they are stuck, receiving immediate, relevant feedback.

- Complex Visual Explanations: Uploading diagrams, charts, or scientific models allows the model to analyze complex visual data and explain the underlying principles using its advanced text capabilities.

2. Enhanced Customer Service and Accessibility

The low latency and emotional understanding make GPT-4o perfect for automated customer service, providing human-like responsiveness without the long wait times.

Furthermore, its advanced AI voice assistant capabilities will prove invaluable for accessibility. Users who rely on verbal input or output can engage with technology in a much more natural, less frustrating manner.

3. Creative and Professional Workflows

For professionals, GPT-4o accelerates research, drafting, and content preparation.

- Multisource Summarization: You can show the model a web page, read key passages aloud from a physical book, and ask it to synthesize the information into a single memo.

- Design Feedback: Uploading design mockups or wireframes allows the model to provide instant, contextual feedback based on known design principles, acting as an automated design partner.

This versatility ensures GPT-4o is integrated into the operational core of many industries, from tech news AI reporting to deep scientific modeling. [Related: AI Career Mastering Future Job Market]

GPT-4o for Developers: API Access and Pricing

The true measure of a new model’s long-term impact often lies in its accessibility to AI for developers. As mentioned, the GPT-4o API pricing is a major draw.

Key Developer Advantages

- Performance per Dollar: With double the speed and half the cost of GPT-4 Turbo, GPT-4o provides 4x the value, allowing developers to allocate fewer resources to inference while still offering a premium experience.

- Multimodal Endpoints: The API integrates all three modalities (text, audio, vision) cleanly, simplifying the architecture required for building complex multimodal applications. No more stitching together multiple OpenAI services (e.g., Whisper for transcription, GPT for processing, TTS for speech output).

- Consistency: Deploying a single, unified model means less maintenance and more reliable performance when handling mixed inputs.

| API Pricing Comparison (Per 1 Million Tokens) | Input Tokens | Output Tokens |

|---|---|---|

| GPT-4o | $5.00 | $15.00 |

| GPT-4 Turbo (Legacy) | $10.00 | $30.00 |

This aggressive GPT-4o pricing strategy clearly aims to make it the default high-performance model for all new applications built using OpenAI infrastructure. This move also pressures competitors to respond with similarly aggressive pricing models, benefiting the entire future of artificial intelligence.

The Future Landscape: GPT-4o vs Google Gemini and Beyond

The release of GPT-4o throws down a significant gauntlet in the high-stakes battle between OpenAI and Google. The AI model comparison inevitably pits Google Gemini vs GPT-4o.

Gemini vs. GPT-4o: Multimodal Showdown

Google’s Gemini model (specifically Gemini 1.5 Pro) also boasts powerful native multimodality and an incredibly large context window (up to 1 million tokens).

| Feature | GPT-4o | Google Gemini 1.5 Pro |

|---|---|---|

| Core Modality | Unified (Text, Audio, Vision) | Unified (Text, Audio, Vision) |

| Speed/Latency | Extremely low latency (320ms audio response) | Very fast, especially for text and long-context processing |

| Context Window | 128K tokens | Up to 1 Million tokens (current public leader) |

| API Cost | Highly competitive (50% cheaper than GPT-4 Turbo) | Competitive, but generally higher than GPT-4o pricing |

| Real-Time Voice | Current benchmark leader for human-like real-time AI conversation | Strong voice capabilities, but GPT-4o currently leads on low latency and expressiveness |

While Gemini 1.5 Pro holds the advantage in processing massive amounts of data in a single prompt thanks to its huge context window, GPT-4o excels in real-time, interactive performance. If you are building an instantaneous AI voice assistant, GPT-4o might be the better choice. If you are analyzing a 500-page document or a 2-hour video, Gemini’s large context window currently gives it an edge.

The competitive tension between these titans drives the rapid innovation we see in tech news AI. As both companies continue to refine their models, consumers and developers benefit from ever more powerful and cheaper AI models.

Looking Ahead: The Conversational Interface

The future of artificial intelligence is clearly shifting away from static text boxes toward dynamic, multimodal, and omnipresent AI assistants. The success of GPT-4o is predicated on its ability to mimic human conversational flow.

This focus aligns perfectly with the burgeoning trend of integrating AI deeply into physical and decentralized infrastructure. Imagine smart cities where AI-driven sensors and conversational interfaces manage urban services seamlessly, or the role of conversational AI in guiding us toward a more sustainable and eco-friendly future. [Related: Smart Cities AI IoT Urban Futures]

The low latency and high quality of GPT-4o make it the ideal candidate to become the default interface for interacting with the digital world, whether it’s through a desktop app, a smartphone, or an augmented reality device.

How to Start Using GPT-4o Today

Accessing this revolutionary model is straightforward, whether you are a free user or a seasoned developer.

For Consumers (ChatGPT)

- Web Access: Log in to ChatGPT. If you are on the free tier, GPT-4o will automatically be enabled for a limited number of messages. If you are a Plus subscriber, select GPT-4o from the model dropdown menu.

- Mobile App: Ensure your ChatGPT mobile app is updated. The new ChatGPT voice mode and vision capabilities will be available through the app interface.

- Desktop App (macOS): Download the dedicated macOS app (Windows version pending). This allows you to invoke the assistant with a simple keyboard shortcut, giving it access to your screen and mic for seamless integration into your workflow.

For Developers (API)

Developers can access the model via the standard OpenAI API endpoints. To use the model, simply specify gpt-4o in your API calls. The reduced GPT-4o pricing and improved speed should encourage immediate migration from older GPT-4 models to capitalize on the performance gains.

For those looking to build new applications, focusing on the multimodal endpoints is crucial. Leveraging audio and vision inputs through the API opens up possibilities for revolutionary new user experiences that were previously too complex or expensive to implement. [Related: Mastering Prompt Engineering Unlock AI Potential]

Conclusion: The Arrival of the Omni Model

The launch of GPT-4o represents more than just a software update; it is a pivotal moment in the history of artificial intelligence. By unifying text, audio, and vision into a single, highly efficient, and blisteringly fast model, OpenAI has delivered a genuinely omnimodal AI.

We’ve established that GPT-4 Omni is significantly better than its predecessors, offering a faster GPT model, cheaper AI models for development, and GPT-4o free access for the majority of users. The low latency real-time AI conversation capabilities have redefined what we expect from an AI voice assistant.

Whether you’re leveraging the desktop application for increased productivity, experimenting with the GPT-4o API as an AI for developers, or simply enjoying the vastly improved free tier of ChatGPT, GPT-4o is set to be the dominant intelligence driving the best AI tools 2024.

The future of artificial intelligence is conversational, multimodal, and accessible. With GPT-4o, that future is here now.

FAQs

Q1. What is GPT-4o?

GPT-4o (Omni) is the latest flagship multimodal AI model from OpenAI, announced during the OpenAI Spring Update. It is unique because it processes text, audio, and vision inputs and outputs natively through a single neural network, allowing for extremely low latency and highly contextual interactions.

Q2. Is GPT-4o better than GPT-4 Turbo?

Yes, GPT-4o is better than GPT-4 Turbo in almost every measurable way. It is approximately 2x faster, 50% cheaper via the API, and offers natively unified multimodal capabilities (audio, vision, text), leading to far superior real-time AI conversation and vision processing.

Q3. Is GPT-4o free to use?

Yes, OpenAI has made GPT-4o free access available to all users on the free tier of ChatGPT, replacing GPT-3.5 as the default model. Free users have access to GPT-4o’s high intelligence, vision, and data analysis features, though usage is capped compared to paid subscriptions.

Q4. What does the “o” in GPT-4o stand for?

The “o” in GPT-4o stands for “Omni,” reflecting its capability as an omnidirectional or multimodal AI model that seamlessly handles all three major modalities: text, audio, and vision.

Q5. When was the GPT-4o release date?

GPT-4o was officially announced on May 13, 2024, during the OpenAI Spring Update. The model and its core functionalities were immediately rolled out to API users and gradually deployed to ChatGPT free and paid users in the following weeks.

Q6. How does GPT-4o enable real-time AI conversation?

GPT-4o handles the entire conversation pipeline—transcription, processing, and speech generation—within a single model. This drastically reduces the response time to an average of 320 milliseconds, which is comparable to human reaction time, allowing for fluid, interruptible real-time AI conversation similar to talking with another person.

Q7. How does GPT-4o compare to Google Gemini?

The AI model comparison shows both GPT-4o and Google Gemini 1.5 Pro are excellent multimodal AI models. GPT-4o currently leads on low latency and speed for conversational AI, while Gemini 1.5 Pro currently holds an advantage in its extremely large context window (up to 1 million tokens), making it superior for processing massive documents or long videos.