What is GPT-4o? The Future of AI is Here and It’s Free

Introduction: A New Era of Conversational AI

The world of artificial intelligence changes at breakneck speed, but every once in a while, a development hits that resets the entire playing field. The OpenAI announcement during their 2024 OpenAI Spring Update introduced one such seismic shift: GPT-4o.

So, what is GPT-4o? The “o” stands for “omni,” signifying its complete integration of text, vision, and audio capabilities. It’s not just a faster version of GPT-4; it’s a fundamentally redesigned multimodal ai model that interacts with users in a way that feels seamless, intuitive, and genuinely human.

This new openai new model sets a dizzying benchmark for the future of artificial intelligence, pushing past the segmented systems of the past. Imagine a single AI that can fluidly understand tone of voice, read complex visual data, and respond with human-like latency—all while being significantly more accessible than its predecessors. The most disruptive detail? A substantial portion of GPT-4o’s incredible power is now available for free chatgpt-4o users.

In this deep dive, we will explore the revolutionary features of this next generation ai, detailing its unprecedented performance, explaining how to use gpt-4o, and analyzing the implications for everything from productivity to education. Get ready to meet the new best ai assistant 2024.

The “Omni” Revolution: Defining GPT-4o

To understand why GPT-4o is so revolutionary, we must first look at its moniker. The “o” for omni, meaning all-encompassing, perfectly captures its core innovation: native multimodality.

Previous AI models, including GPT-4, handled text, voice, and vision through a complex pipeline. For example, a voice query would first be transcribed to text by a small model, processed by the powerful GPT-4 model, and then converted back to synthesized audio by yet another model. This multi-step process introduced lag and lost crucial context, such as the user’s tone or emotional state.

GPT-4 omni changes this entirely. It was trained end-to-end across text, audio, and vision, meaning it processes all three modalities as native inputs and generates outputs in any combination.

Key Takeaways of the GPT-4o Architecture:

- Unified Model: Unlike previous systems, GPT-4o is a single neural network handling all inputs and outputs.

- Real-Time Processing: This unification dramatically reduces latency, enabling true real-time ai conversation.

- Context Preservation: The model retains context across modalities, allowing it to understand nuances like emotion, hesitation, or visual cues.

This architectural shift isn’t just about speed; it’s about the quality and fluidity of interaction, making the distinction between human and AI interaction increasingly blurred.

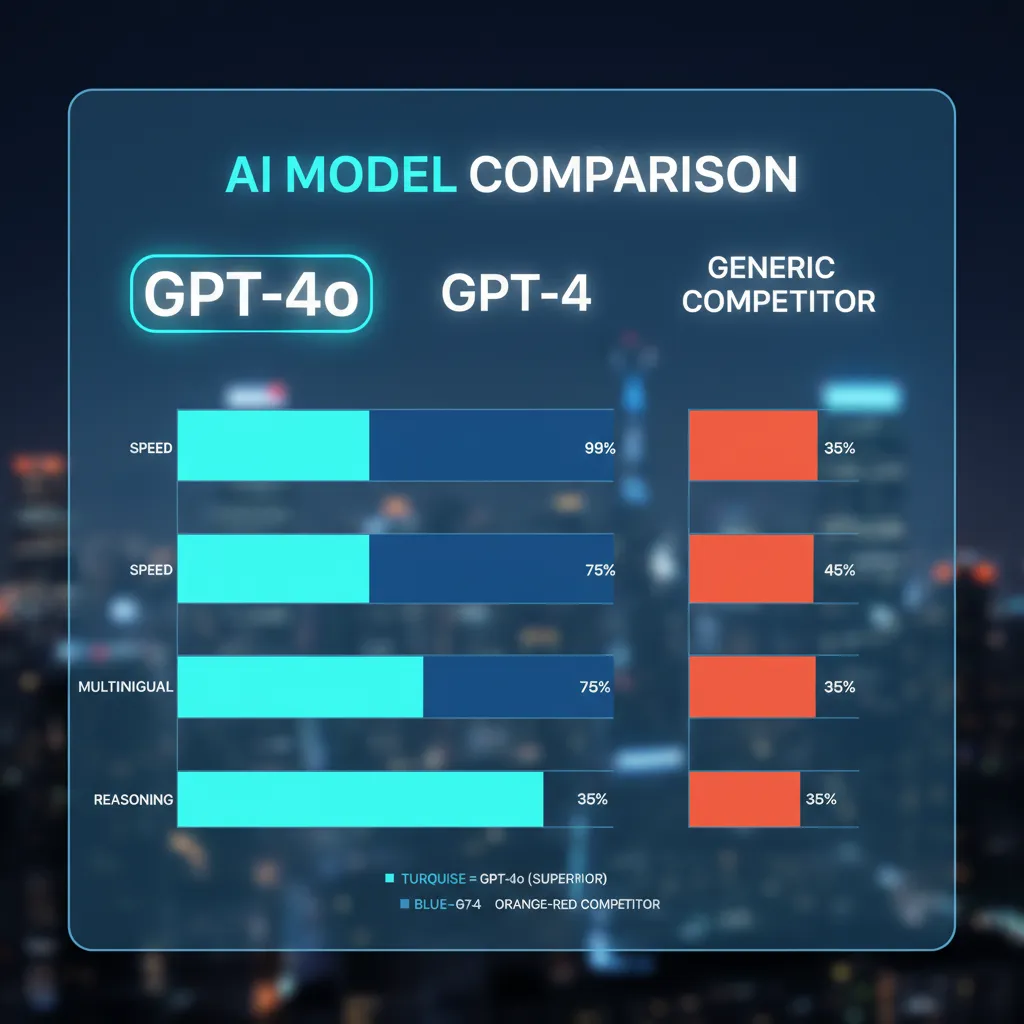

Unprecedented Performance: GPT-4o vs. GPT-4

For many users, the most critical metric is performance, and here, GPT-4o vs gpt-4 offers a staggering comparison. While GPT-4 was a remarkable leap in intelligence, GPT-4o offers that same high level of intelligence with significant boosts in efficiency and speed.

| Feature | GPT-4 (Base Model) | GPT-4o (Omni Model) | Impact |

|---|---|---|---|

| Intelligence Level | High | High (Matches/Exceeds) | Maintained intelligence while gaining speed. |

| Speed/Latency (Audio) | Several seconds (pipeline lag) | Avg. 232ms, as fast as 100ms | Enables true conversational ai. |

| Multimodality | Separate models for transcription/synthesis | Native, unified multimodality | Better context, emotional understanding. |

| API Pricing | Standard cost | 50% cheaper | Dramatically lowers cost for developers. |

| Rate Limits | Standard paid rates | 5x higher limits for Plus users | Massive productivity boost for subscribers. |

| Language Support | Strong text, weaker voice/vision in non-English | Significantly better non-English language performance across all modalities | Global accessibility improvement. |

This comparison highlights why gpt-4o performance has garnered so much attention. It provides the quality of GPT-4 but delivers it with the rapid response needed for modern, dynamic interactions.

Speed is the New Intelligence

The average response time of 232 milliseconds for audio interaction is transformative. This falls well within the range of natural human conversation, eliminating the awkward pauses that plagued previous voice assistants. This is the foundation of the true ai assistant experience we’ve been promised for years.

The Multimodal Powerhouse: Vision, Voice, and Text Mastery

The true magic of gpt-4o features lies in its ability to combine text, audio, and visual inputs in real-time, opening up use cases that were previously restricted to science fiction.

1. Real-Time Conversational AI

The enhanced audio capabilities are perhaps the most immediately impactful feature. GPT-4o doesn’t just transcribe; it processes the sound itself.

In the official gpt-4o demo, the AI showcased abilities like:

- Interruptibility: Users can talk over the AI, and it processes the interruption without losing context, just like a human.

- Emotion Detection: It can accurately gauge the user’s emotional state (e.g., happiness, frustration, surprise) and adjust its response tone accordingly.

- Dynamic Personalities: It can switch between different conversational styles (dramatic, robotic, singing) on demand, making interactions incredibly engaging.

This level of fluidity makes it the ultimate partner for dynamic, back-and-forth communication.

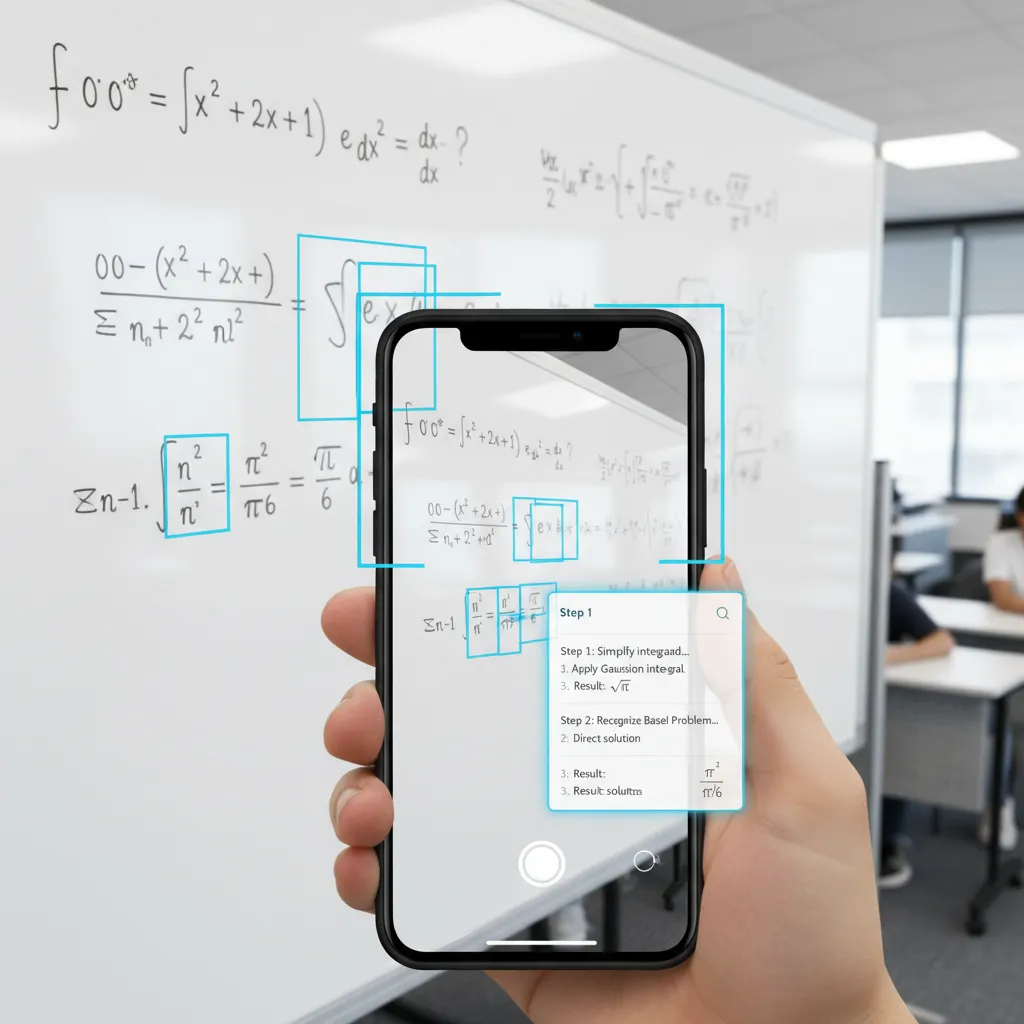

2. Vision Capabilities That See and Interpret

The gpt-4o vision capabilities are perhaps the most potent tool for practical daily applications. Since the model natively integrates vision, it can process visual data (photos or a live video feed) instantaneously alongside text and voice queries.

Imagine pointing your phone camera at something and asking the AI a question:

- Real-time Translation: Point your camera at a foreign language sign, and GPT-4o translates and reads it back to you in real-time.

- Complex Problem Solving: Point the camera at a hand-written math equation or a complex circuit board, and the AI can analyze the components and guide you step-by-step through the solution. This moves beyond simple object recognition into deep visual comprehension.

- Data Analysis: Snap a picture of a complex chart or graph, and ask GPT-4o to summarize the trends or extrapolate future data points.

These features drastically expand the utility of ChatGPT beyond a simple chatbot into a functional, highly intelligent visual assistant.

[Related: ai-in-gaming-revolutionizing-worlds-players-and-development]

3. API and Developer Tools

For developers, the gpt-4o api is a game-changer. Not only is it twice as fast, but it’s also half the price of the GPT-4 Turbo API. This reduction in cost and increase in speed means businesses can now deploy high-quality, real-time AI features at scale, making sophisticated ai technology trends 2024 accessible to startups and large enterprises alike.

OpenAI is actively encouraging innovation by providing detailed documentation and tutorials on leveraging the unified multimodal capabilities, particularly in areas requiring low latency and robust interpretation.

The Price of Power: Is GPT-4o Really Free?

One of the headlines that followed the gpt-4o release date was the promise of unprecedented accessibility. The key question dominating searches is: is gpt-4o free?

The short answer is: Yes, mostly, and that’s a massive strategy shift for OpenAI.

OpenAI is rolling out GPT-4o to all users, including those on the free tier of ChatGPT. This means millions of users who were previously locked behind a paywall (or limited to the less powerful GPT-3.5) now have access to a state-of-the-art model.

Understanding the Tier Structure:

1. Free Tier Access (GPT-4o)

- The Benefit: Free users can access GPT-4o’s core capabilities, including its superior intelligence, speed, and basic vision/data analysis.

- The Limit: Free access is usage-capped. Once the limit is hit (which varies based on demand), the user is automatically switched back to GPT-3.5 until the cap resets. Free users also don’t get unlimited use of advanced tools like data analysis or DALL-E image generation.

2. Plus and Team Tiers (Paid Access)

- The Benefit: Paid subscribers (Plus and Team) receive significantly higher usage limits—often 5x more than free users—before hitting their own caps. They also get priority access during peak times, and full, unrestricted use of the advanced tools.

- The Value: For power users, the subscription now delivers substantially more value, granting virtually unlimited access to the next generation ai.

By offering this tiered access, OpenAI is democratizing the most powerful AI model ever released, driving mass adoption while maintaining a strong incentive for subscription upgrades.

[Related: searchgpt-vs-google-sge-ai-search-revolution]

How to Get Access and Start Using GPT-4o

If you’re wondering how to get access to gpt-4o, the process is straightforward, though deployment timing may vary depending on your platform and location, considering the recent gpt-4o release date.

Step 1: Using the Web Interface

- Go to the main ChatGPT website.

- Log in or create a free account.

- In the chat interface, look for the model selector dropdown menu at the top of your screen.

- Select “GPT-4o.” (If you are on the free tier, this option will be available, but your usage will be limited.)

Step 2: Utilizing the New Desktop App

One of the major announcements accompanying the release was the introduction of a dedicated chatgpt desktop app.

Initially launched for macOS, this app is designed to seamlessly integrate the ai assistant into the operating system. With a simple keyboard shortcut (Option + Spacebar), users can instantly pull up the ChatGPT interface and ask questions, capture screenshots, or engage in voice conversations without needing a browser window.

This shift toward native application integration signals OpenAI’s move from a web tool to an essential utility, much like a search engine or operating system component.

Future Expansion: iOS and Windows

While the macOS app was the first to roll out, mobile apps for iOS and Android are being updated to fully incorporate the real-time audio and vision capabilities. A dedicated Windows application is also anticipated, further embedding GPT-4o into professional workflows.

Step 3: Integrating via the API

Developers seeking to integrate GPT-4o into their own applications need to adjust their API calls to specify the gpt-4o model identifier. The reduced gpt-4o pricing makes this an extremely attractive option for building scalable applications that rely on sophisticated reasoning and multimodal processing.

Real-World Applications of GPT-4o

The true measure of any AI is its utility. The gpt-4o demo highlighted numerous practical scenarios where its speed and multimodality create tangible value.

Enhancing Global Communication

GPT-4o excels in real-time language translation. During the live demonstration, the model seamlessly translated a conversation between two people speaking Italian and English, handling the input and output in audio in near real-time. This capability is critical for international business, travel, and cross-cultural communication, serving as the ultimate universal communicator.

Revolutionizing Customer Service and Support

For businesses, GPT-4o means faster, more empathetic, and more effective conversational AI agents. Because the AI can detect frustration in a customer’s voice or instantly analyze a complex screenshot showing an error message, support systems can resolve issues more quickly and with higher satisfaction rates. This elevates the standard of conversational ai in the enterprise space.

Education and Personalized Tutoring

Students can benefit immensely from the enhanced vision and real-time dialogue. Instead of typing out a complex physics problem, they can simply point the phone camera at it (using the advanced gpt-4o vision capabilities) and receive a step-by-step vocal explanation tailored to their questions.

This level of interactive, personalized guidance moves AI from being a homework answer generator to a dynamic tutoring partner.

[Related: ai-classroom-revolution-personalized-learning-future-skills/]

Navigating the Competition: GPT-4o vs. Google Gemini

The unveiling of GPT-4o occurred against the backdrop of an intensifying “AI arms race,” primarily between OpenAI and Google, with Google’s formidable Google Gemini vs gpt-4o model being the primary competitor.

While Google’s Gemini family (especially Ultra and the recent updates to Gemini 1.5) also champions multimodality and strong performance, GPT-4o currently holds a distinct edge in one crucial area: speed and native integration.

GPT-4o’s average audio response latency is a critical differentiating factor that fundamentally changes the user experience, making the interaction feel truly instantaneous. Furthermore, OpenAI’s aggressive decision to make GPT-4o available broadly and for free immediately puts pressure on Google to similarly democratize its high-end models, driving ai technology trends 2024 toward accessibility.

The competition ensures that the future of artificial intelligence will remain dynamic, benefiting consumers with ever-improving and increasingly accessible tools. The performance gains offered by both models reinforce the expectation that high-level reasoning and complex analysis should be instantaneous.

[Related: mastering-ai-workflow-productivity-automation]

The Broader Implications for the Future of Artificial Intelligence

The launch of GPT-4o is more than just a product update; it’s a milestone in AI’s path toward becoming a truly integrated partner in human life.

The Shift from Text Generator to Companion

GPT-4o’s capability to understand emotion and modulate its own vocal expression moves it beyond being a functional tool and toward becoming a digital companion. This raises profound questions about the ethics of emotional connection with AI and the design of AI systems that can genuinely anticipate human needs.

Redefining Digital Workflows

The ChatGPT desktop app and the enhanced openai developer tools suggest a future where AI is not something you navigate to, but something that is always with you. Whether it’s drafting complex legal documents, debugging code with your voice, or summarizing an incoming email visually, the friction between task and AI intervention is disappearing. This is a massive boon for productivity and efficiency in the digital age.

[Related: smart-cities-ai-iot-urban-futures]

The Dawn of AI-Native Interfaces

The gpt-4o demo showcased the potential for hands-free computing—interacting with information and systems purely through voice and vision. This foreshadows a future where keyboards and screens might take a backseat to natural language, making technology accessible to more people in more environments. The best ai assistant 2024 is truly built for the age of ubiquitous computing.

Conclusion: The Omni Future is Now

GPT-4o represents a significant inflection point in the timeline of AI development. By unifying text, audio, and vision into a single, highly optimized model, OpenAI has not only delivered higher gpt-4o performance but has also cracked the code on what genuine, real-time conversational ai feels like.

The accessibility shift—making a model of this caliber available for free chatgpt-4o users—ensures that the power of gpt-4 omni will rapidly permeate global digital life.

Whether you are a developer leveraging the dramatically reduced gpt-4o pricing and improved API speed, or a casual user simply exploring the gpt-4o features through the new desktop app, the message is clear: the future of artificial intelligence is multimodal, immediate, and here right now. The time to learn how to use gpt-4o and integrate this transformative multimodal ai model into your workflow is today.

FAQs (People Also Ask)

Q1. What does the “o” in GPT-4o stand for?

The “o” in GPT-4o stands for “omni,” which means “all” or “all-encompassing.” This name reflects the model’s ability to natively process and generate outputs across text, audio, and vision modalities within a single unified neural network, eliminating the lag associated with separate models.

Q2. Is GPT-4o actually free for users?

Yes, a major aspect of the openai announcement was making GPT-4o available to all free users of ChatGPT. While free users receive access to the model’s core intelligence and speed, their usage is subject to caps. Paid subscribers (Plus and Team) receive significantly higher usage limits and priority access.

Q3. How much faster is GPT-4o than GPT-4?

GPT-4o processes text and code significantly faster than GPT-4, and its key advantage is speed in audio interactions. While GPT-4 relied on a slow pipeline of three different models, GPT-4o achieves an average audio response time of just 232 milliseconds—matching the speed of natural human conversation and enabling true real-time ai conversation.

Q4. What are the key vision capabilities of GPT-4o?

The gpt-4o vision capabilities allow the model to interpret images and live video streams instantly. Key uses include analyzing handwritten math problems, interpreting complex graphs and charts, describing objects in detail, and performing real-time, visual language translation by looking at signs or screens.

Q5. What is the ChatGPT desktop app and who can use it?

The chatgpt desktop app is a native application (initially launched for macOS users) that integrates ChatGPT directly into the operating system. It allows users to quickly launch the assistant using a keyboard shortcut (Option + Spacebar) to ask questions, share screenshots, and engage in voice chats instantly. It is intended to seamlessly embed the ai assistant into daily work routines.

Q6. How does GPT-4o handle emotion in conversations?

Because GPT-4o is a single, unified multimodal model, it processes the raw audio input, allowing it to detect nuances in the user’s tone, pitch, and rhythm. This means the model can accurately infer a user’s emotional state (e.g., happiness, sadness, or confusion) and adjust its own vocal output and conversational strategy accordingly, making the interaction much more empathetic and realistic.

Q7. Is GPT-4o better than Google Gemini?

Both google gemini vs gpt-4o represent cutting-edge ai technology trends 2024. While both are highly capable multimodal models, GPT-4o currently holds an advantage in terms of real-time responsiveness and accessibility, particularly with its incredibly low latency for voice interactions and its broad rollout to free users following the openai spring update. The competition continues to drive innovation in performance and features.

Q8. Where can developers find information on the GPT-4o API?

Developers can find comprehensive documentation, including technical specifications and tutorials, on the official OpenAI developer website. The gpt-4o api is popular because it offers twice the speed of GPT-4 Turbo at half the cost, making it highly efficient for developing high-volume, real-time applications.