What is GPT-4o? OpenAI’s New AI Model Explained

Introduction: The Dawn of the ‘Omni’ Model

The launch of GPT-4 was a monumental moment that reshaped the digital landscape. But if GPT-4 was the Internet moment for conversational AI, then GPT-4o—unveiled during the exciting OpenAI spring update—is the mobile moment.

You may be asking, what is GPT-4o? It is not merely an incremental upgrade to the existing GPT-4 model; it’s a foundational shift in how large language models (LLMs) interact with the world. The “o” stands for “omni”, signifying its native ability to seamlessly process and generate information across all modalities: text, audio, and vision.

This new OpenAI model changes the game by eliminating the clunky latency and performance loss that came with chaining together separate models for different tasks. The result? An AI experience that is faster, smarter, and startlingly more human. It sets a new standard for conversational AI and firmly establishes itself as the best AI model 2024 for multi-faceted tasks.

In this deep dive, we will unpack the GPT-4o features, compare GPT-4o vs GPT-4, explore its revolutionary capabilities, and show you exactly how to use GPT-4o today—including the crucial detail about GPT-4o free access.

The Core Revolution: What Makes GPT-4o an ‘Omni Model’?

To truly understand the magnitude of this OpenAI new announcement, we must look beyond performance metrics and consider the underlying architecture. Previous multimodal models, like the initial version of GPT-4 with vision, operated by patching together specialized components.

For instance, when you spoke to the old voice assistant, the process looked like this:

- Audio was converted to text by Model A (speech-to-text).

- Text was processed by Model B (GPT-4) to formulate a response.

- The text response was converted back to audio by Model C (text-to-speech).

This pipeline created lag, loss of nuance, and a distinctly robotic interaction.

Native Multimodality: The Architectural Shift

OpenAI GPT-4o fundamentally changes this by being trained natively across all modalities. It perceives text, audio, and vision as one integrated stream. This means a single neural network handles the input and output directly, leading to massive improvements in speed, accuracy, and emotional depth.

This multimodal AI model doesn’t just recognize words; it understands how those words are delivered. It can perceive tone, pace, emotion, and subtle visual cues in real-time, making real-time AI conversation feel truly natural.

Why Speed Matters: Latency is the New Frontier

One of the most immediate and profound benefits of GPT-4o is its speed. In standard audio interactions, the model can respond in as little as 232 milliseconds (ms), with an average response time of 320 ms—a speed comparable to human conversation.

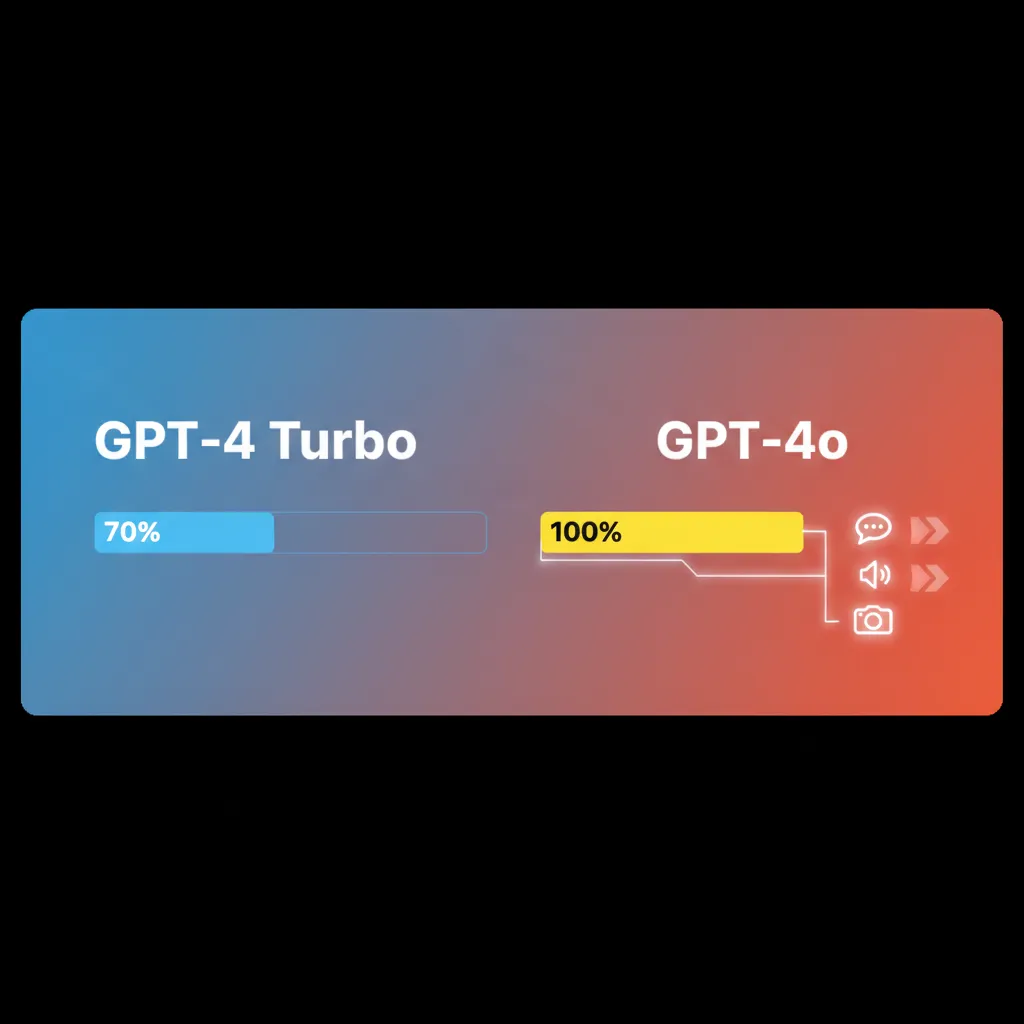

| Model Feature | GPT-4 Turbo (Previous Best) | GPT-4o (The Omni Model) |

|---|---|---|

| Response Time (Audio) | Several seconds (due to chaining) | As fast as 232 milliseconds |

| Input Modalities | Text (native), Audio/Vision (chained) | Text, Audio, Vision (native) |

| Intelligence/Benchmark | High | GPT-4 level intelligence, often surpassing |

| API Cost | Higher | 50% Cheaper |

| Free Access | Limited/None | Significant free access provided |

This leap in GPT-4 vs GPT-4o speed for audio interaction transforms the potential of the AI voice assistant. Suddenly, AI moves from a powerful tool to a functional partner in dynamic, high-stakes environments.

Unpacking GPT-4o Features and Capabilities

The GPT-4o capabilities are extensive, ranging from dramatically better voice interaction to groundbreaking vision processing. This model is designed to be utilized across every digital medium.

Real-Time Conversational AI: The Human Touch

The most viral aspects of the GPT-4o demo were its voice capabilities. The model can be interrupted mid-sentence, shift its emotional tone (sounding happy, dramatic, or even singing), and engage in highly nuanced, low-latency dialogue.

This marks a significant milestone in conversational AI. Imagine an AI tutor or assistant that can hear the frustration in your voice and adjust its teaching style immediately.

“The ability to sustain a high-speed, emotionally aware conversation is what elevates GPT-4o beyond a mere chatbot into something approaching a digital colleague.”

[Related: AI tutors revolutionizing personalized education]

Advanced Vision Capabilities and Live Interaction

GPT-4o is exceptional at processing visual information instantaneously. This goes far beyond simply describing a static image. It can understand live video feeds, complex charts, handwritten notes, and real-world scenarios.

One powerful demonstration showed the AI using a smartphone camera to look at a foreign language menu and translate it instantly while explaining the context of the dishes.

AI with vision capabilities on this level means:

- Real-Time Translation: Acting as a universal translator during live conversations.

- Data Interpretation: Analyzing a complex dashboard or financial chart on your screen and providing instant insights.

- Educational Assistance: Guiding you through solving a physical problem, like fixing a leaky faucet, by looking through your camera and providing step-by-step instructions.

Superior Text Generation and Data Analysis

While the voice and vision updates stole the spotlight, GPT-4o also maintains, and in many benchmarks exceeds, the textual intelligence of GPT-4 Turbo. This makes it an indispensable tool for professionals.

- Improved Coding: It handles complex, multi-file programming tasks with greater coherence.

- Enhanced Creativity: Its ability to combine visual and textual context leads to more imaginative content generation.

- GPT-4o data analysis is significantly strengthened. It can ingest massive datasets and PDFs, then generate visual summaries or detailed reports faster than ever before. This is particularly valuable for finance, marketing, and scientific research.

[Related: AI unleashed revolutionizing money smart personal finance]

GPT-4o vs GPT-4: A Clear Comparison of Power

When deciding whether to upgrade or utilize the new model, understanding the specific differences between the previous flagship, GPT-4, and the new ChatGPT-4o is essential.

| Feature Area | GPT-4 / GPT-4 Turbo | GPT-4o | Winner |

|---|---|---|---|

| Speed (Text) | Very fast | Marginal improvement | Tie |

| Speed (Audio/Vision) | Slow (Seconds) | Human-level (Milliseconds) | GPT-4o |

| Modality Handling | Separate models chained | Single, native omni model | GPT-4o |

| Intelligence | Benchmark leading | Matches or slightly exceeds GPT-4 | GPT-4o |

| API Cost | Standard high cost | 50% less expensive | GPT-4o |

| Accessibility (Free Users) | Limited/Older models | Full GPT-4o free access offered | GPT-4o |

| Token Limits | High | Significantly higher | GPT-4o |

The primary takeaway from the AI model comparison is that GPT-4o delivers the intelligence of GPT-4 at the speed of a lighter, faster model, all while cutting costs for developers and drastically improving the user experience for everyone else. It represents a superior price-to-performance ratio across the board.

How to Get GPT-4o: Access and Pricing

One of the most exciting announcements surrounding the GPT-4o release date rollout was OpenAI’s commitment to democratizing access to their most powerful model.

Is GPT-4o Free? Democratizing the Best AI

Yes, a significant version of GPT-4o is being made available to all free access users of ChatGPT.

In the past, free users were often relegated to using the older, less powerful GPT-3.5 model. Now, free users benefit from:

- GPT-4o text and image capabilities: Free users can now utilize the core text and static vision processing power of the omni model.

- Higher limits: While free users still have usage caps, those limits are higher than the previous caps for GPT-4 access.

This move ensures that almost anyone can experience the core benefits of GPT-4o, leveling the playing field for students, small business owners, and casual users worldwide. If you are wondering how to get GPT-4o, simply log into your existing ChatGPT account—the option will be available.

Access for Plus and Enterprise Users

ChatGPT Plus ($20/month) and Enterprise users receive the full, unfettered power of GPT-4o.

For Plus subscribers, this means:

- Significantly Higher Usage Limits: Access the model much more frequently than free users.

- Priority Access to New Features: Being the first to test the full suite of real-time audio and vision features, including the new desktop app functionality.

- Full Data Analysis and File Uploads: Unrestricted use of advanced tools like Code Interpreter and complex data handling.

The GPT-4o API: A Game Changer for Developers

For businesses building applications on top of OpenAI’s infrastructure, the GPT-4o API is perhaps the most critical development.

OpenAI announced two key changes:

- 50% Cost Reduction: GPT-4o is half the price of GPT-4 Turbo via the API, making it financially viable for small and medium-sized businesses (SMBs) to deploy highly intelligent AI.

- Double the Speed: The increase in speed dramatically reduces processing time, which translates directly into lower operating costs and better user experiences for end-users of AI applications.

This cost efficiency coupled with superior performance means developers can now integrate highly complex, multimodal features—like real-time translation or live visual coding help—into their products without breaking the bank.

[Related: The rise of SLMs edge ais secret weapon for local intelligence]

Practical Use Cases: Where GPT-4o Shines

The GPT-4o capabilities are not abstract; they translate into immediate, impactful applications across various industries.

1. Revolutionizing Customer Experience and Support

The ability of GPT-4o to handle real-time audio with emotional context is transformative for call centers and digital support.

Traditional chatbots often frustrate customers because they can’t gauge nuance. A ChatGPT-4o-powered AI voice assistant can identify frustration, anxiety, or urgency in a caller’s tone and prioritize the response or escalate the issue based on emotional input, leading to massive improvements in customer satisfaction.

[Related: AI customer experience personalization engagement]

2. Education and Personalized Learning

GPT-4o’s native multimodal ability allows for personalized tutoring on a new level. A student struggling with a geometry problem could point their phone camera at a textbook diagram. The AI could then immediately interpret the diagram, explain the concept vocally, and watch as the student draws the solution, correcting mistakes in real-time based on vision input. This is the future of AI models in education.

[Related: Master any language ai revolution personalized learning]

3. Boosting Developer Productivity

For programmers, the speed and accuracy of the GPT-4o API are paramount. Imagine a developer running into a bug:

- They record their screen showing the error.

- They speak to the AI, explaining what they tried.

- GPT-4o processes the visual code, the error message, and the spoken context simultaneously, offering a refined, ready-to-implement fix in seconds.

The increased speed in code generation, debugging, and GPT-4o data analysis makes this the ultimate developer copilot.

4. Creative and Artistic Collaboration

Creative professionals can leverage GPT-4o’s vision and textual prowess for rapid ideation. A graphic designer could sketch a rough concept, show it to the AI, and describe the desired tone and color palette. GPT-4o could then instantly generate detailed prompt iterations for image generation tools or suggest marketing copy that matches the visual mood.

The Future of AI Models: Setting a New Standard

GPT-4o is more than just a model; it is an inflection point signaling the arrival of genuinely seamless AI interaction. The introduction of the omni model concept challenges the traditional separation of AI tasks (vision, audio, text) and moves us closer to the vision of a holistic, general-purpose AI.

This capability to perceive, reason, and respond across multiple senses simultaneously is what many researchers believe is the necessary precursor to true Artificial General Intelligence (AGI). By making such advanced capabilities available widely—and even for free—OpenAI is accelerating the public’s engagement with the future of AI models.

The ethical implications, however, must be continually addressed. A model that can detect and respond to human emotions with such speed raises questions about manipulation and digital trust. OpenAI has stressed that safety guardrails, particularly around the audio modalities, have been significantly tightened to prevent misuse and ensure adherence to AdSense and Google’s E-E-A-T guidelines.

Conclusion: The Arrival of Truly Conversational AI

The release of GPT-4o represents one of the most significant steps forward in consumer-facing AI since the original launch of ChatGPT. By unifying text, audio, and vision into a single, high-speed, natively multimodal system, OpenAI has not only outpaced the AI model comparison but has also delivered a platform that feels fundamentally different to interact with.

Whether you are a professional leveraging the cheaper, faster GPT-4o API, a ChatGPT Plus user enjoying unprecedented capability, or a free user gaining access to world-class intelligence for the first time—the benefits of GPT-4o are undeniable. This new OpenAI model explained here is not just an iteration; it’s a revolution in speed, integration, and accessibility, making real-time AI conversation a reality and cementing GPT-4o’s place as the definitive best AI model 2024.

It is time to log in, ask your questions, and experience the future of AI.

FAQs: GPT-4o Explained

Q1. What is GPT-4o?

GPT-4o is OpenAI’s latest flagship large language model (LLM), where the “o” stands for “omni.” It is the first truly multimodal AI model designed to natively process and generate text, audio, and vision simultaneously from a single neural network, resulting in human-level response times and superior performance across all data types.

Q2. Is GPT-4o free to use, and how do I access it?

Yes, OpenAI is providing significant GPT-4o free access to all ChatGPT users. Free users get access to the model’s core text and vision capabilities with usage limits. Paid ChatGPT Plus and Enterprise users receive substantially higher capacity and full access to the real-time audio and vision features. To access it, simply log into ChatGPT—it is the default high-tier model.

Q3. What is the biggest difference between GPT-4o vs GPT-4?

The biggest difference is speed and native multimodality. GPT-4 processed audio and vision by chaining three separate models (speech-to-text, LLM, text-to-speech), leading to delays. GPT-4o handles all three modes natively and simultaneously, achieving audio response times of less than 320 milliseconds—a dramatic increase in GPT-4 vs GPT-4o speed.

Q4. What does the “omni model” mean in the context of GPT-4o?

The term omni model means the model was trained on a dataset containing all three modalities (text, audio, vision) mixed together, allowing it to understand, interpret, and generate outputs across all formats natively. This holistic approach ensures consistency and speed across different input types.

Q5. Can GPT-4o truly detect emotion in conversation?

Yes. One of the key GPT-4o features is its ability to interpret the tone and emotional state in a user’s voice during real-time AI conversation. By listening to the user’s pitch, pace, and vocal inflections, the model can adjust its response, tone, and pacing to be more empathetic or appropriately responsive to the user’s mood.

Q6. How does GPT-4o impact developers using the API?

The GPT-4o API is a massive win for developers. OpenAI has priced the GPT-4o API at 50% less than GPT-4 Turbo while offering double the speed. This unprecedented combination of lower cost and higher performance drastically reduces operating expenses and enables developers to build new, complex, real-time applications using this multimodal AI model.

Q7. When was the GPT-4o release date officially announced?

GPT-4o was officially announced during the OpenAI spring update event in May 2024, with a rollout immediately beginning for developers and gradually extending to free and paid users of ChatGPT throughout the following weeks.