What is GPT-4o? The Next Era of AI is Here

Introduction: The Paradigm Shift of the Omni Model AI

For years, we’ve interacted with AI through a segmented lens: we type text into a chatbot, upload an image for analysis, or speak into a voice assistant that pauses and processes. These systems, while impressive, often felt like a series of disjointed steps chained together.

That era is officially over.

The OpenAI Spring Update heralded the arrival of GPT-4o, a breakthrough model that marks a seismic shift in how we define and interact with artificial intelligence. The “o” in GPT-4o stands for “omni,” signifying its native integration of text, audio, and vision capabilities into a single, unified neural network. This isn’t just an iteration; it’s the next generation AI.

GPT-4o is a stunning leap toward truly seamless, real-time AI interaction. It can perceive the world, understand emotional nuance, and respond instantly, making it feel less like a tool and more like a capable, intelligent colleague. If you’ve been wondering what is GPT-4o, this comprehensive guide will unpack its features, technical architecture, applications, and why it represents the future of AI. We’ll show you why this OpenAI new model is transforming everything from education to enterprise development, setting a new bar for human-level AI experiences.

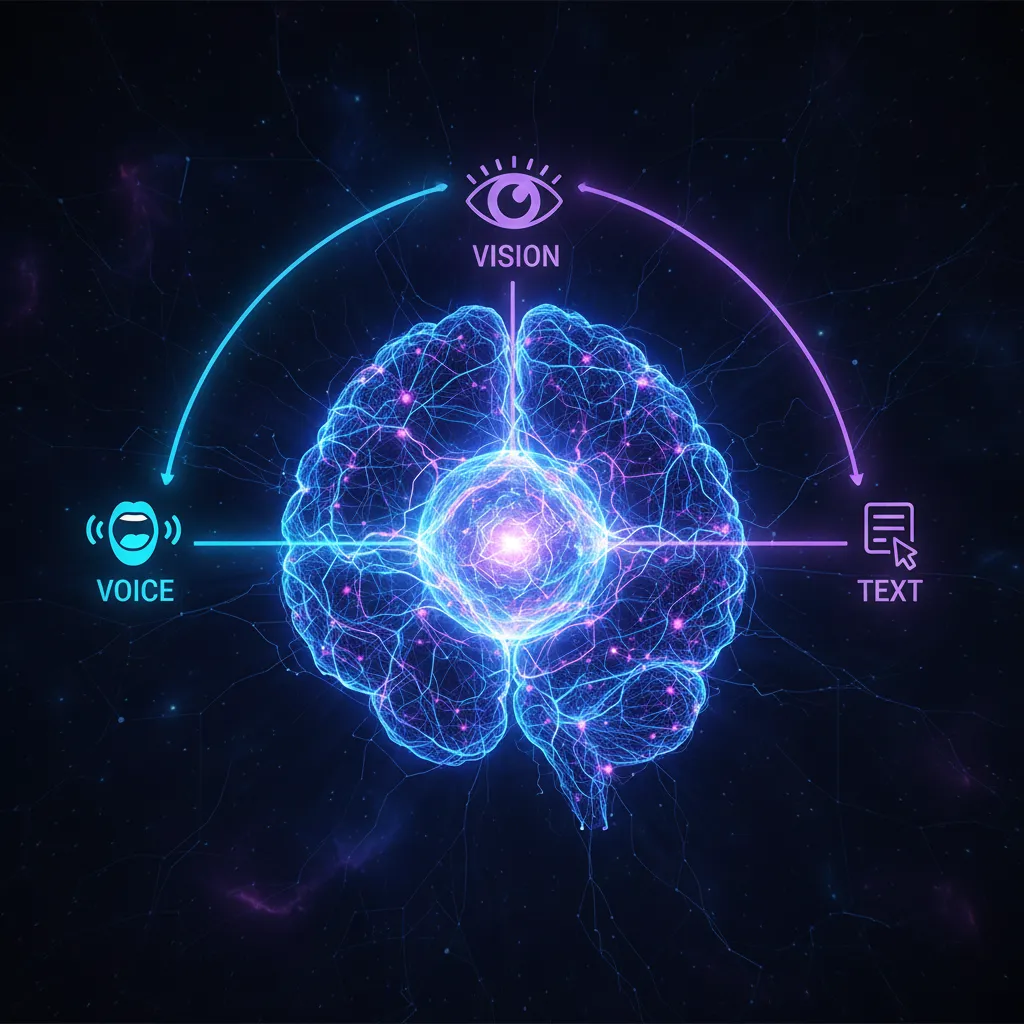

Unpacking the “O”: The Architecture Behind the Omni Model

To truly appreciate what is GPT-4o, we must first understand the fundamental architectural change it introduces. Previous multimodal models, like the initial GPT-4 Voice Mode, relied on a complex pipeline: audio input was transcribed by one specialized model, the text was processed by GPT-4, and the resulting text was synthesized back into audio by a third model. This “daisy chain” approach inevitably introduced latency, emotional flatness, and sometimes, loss of context.

GPT-4o shatters this limitation.

This omni model AI was trained end-to-end across text, vision, and audio. It is a single model that understands and generates all three modalities simultaneously. This is the crucial distinction that unlocks its phenomenal speed and emotional sensitivity.

A Single, Native Multimodal Architecture

In the world of AI, a native architecture means that the model doesn’t need to translate between separate systems. When a user speaks, GPT-4o processes the audio directly, interpreting the tone, pitch, and speed alongside the linguistic content. When a user shows it an image or a graph, the visual data flows straight into the same network that handles the textual reasoning.

The result of this unified design is profound:

- Reduced Latency: GPT-4o can respond to audio inputs in as little as 232 milliseconds (ms), with an average of 320 ms—a speed comparable to human conversation. This is a massive improvement over GPT-4’s 5.4-second average for the voice pipeline.

- Emotional Intelligence: Because the model directly processes the audio characteristics, it can detect emotions like surprise, fatigue, or frustration, and adjust its output tone and content accordingly. This sophisticated form of AI emotional intelligence is critical for developing truly helpful conversational AI.

- Coherent Multitasking: GPT-4o can seamlessly switch between tasks. It can listen to you, watch what you are doing on your screen (via a shared view), analyze the image, and discuss it instantly, all without noticeable lag.

The Breakthrough in Speed and Real-Time Interaction

The latency reduction is perhaps the most immediately impactful GPT-4o feature. In a world where even a half-second delay can break the feeling of a natural conversation, achieving near-instantaneous responses transforms the user experience. This speed enables capabilities that were previously relegated to science fiction.

Imagine holding a phone conversation with someone speaking a foreign language. With GPT-4o, the model acts as a direct, simultaneous interpreter.

/image-topic.webp

This capability for AI real-time translation is a potent demonstration of real-time AI. It doesn’t just translate words; it carries the emotional context across languages, paving the way for truly global, instantaneous communication. The practical application of this for travel, business, and accessibility is immense.

[Related: eco-tourism-unpacked-sustainable-adventures]

GPT-4o Features: Beyond Text Generation

While GPT-4 remains a benchmark for pure text generation, GPT-4o excels in the holistic processing of all media types. Its features go far beyond typical text-based chat, defining it as a superior AI voice assistant and visual analyst.

Real-Time Emotional Voice Interaction

The voice capabilities of GPT-4o are arguably its most viral and revolutionary aspect showcased during the OpenAI announcement. The model can:

- Respond with Character: It can shift its output tone from theatrical and dramatic to calm and instructional, based on user request or perceived emotional need.

- Handle Interruptions: Unlike traditional assistants, GPT-4o is adept at handling mid-sentence interruptions without losing context, mirroring natural human dialogue flow.

- Tutor and Coach: In voice mode, it can listen to a student struggling with a problem, see the work they are doing (if granted vision access), and guide them through the process using supportive and context-aware language.

This level of natural, conversational AI interaction fundamentally changes expectations for digital assistants. We are moving from giving commands to having collaborative dialogues.

Advanced Computer Vision Applications

The AI vision capabilities of GPT-4o dramatically expand its utility beyond text and voice. Computer vision applications now run faster and more accurately than previous models.

Key Vision Use Cases:

- Data Analysis and Interpretation: Show GPT-4o a complex financial graph or a complicated spreadsheet. It can instantly summarize trends, point out anomalies, and even draft a report explaining the data—all in real-time.

- Code and Design Review: Developers can share a screenshot of their code or a user interface design, and GPT-4o can provide instant feedback on errors, optimization possibilities, and accessibility issues. It functions as an advanced AI assistant for developers.

- World Interpretation: If you point your phone camera at a physical object, the model can identify it, explain its function, and even suggest usage instructions or where to buy it. This turns the camera into a dynamic knowledge portal.

Enhanced Performance for Developers

For those interacting with the GPT-4o API, the benefits are quantifiable and significant:

| Metric | GPT-4 Turbo | GPT-4o (Text/Vision) | Improvement |

|---|---|---|---|

| Speed | Standard | 2x Faster | Dramatically increased throughput |

| Cost (Input) | $10.00 / 1M tokens | $5.00 / 1M tokens | 50% reduction |

| Cost (Output) | $30.00 / 1M tokens | $15.00 / 1M tokens | 50% reduction |

| Rate Limits | High | 5x Higher | Better scalability for enterprise |

This dual advantage—better performance at half the price—is a massive catalyst for innovation. Startups and large enterprises alike can now embed sophisticated natural language processing and multimodal AI into their products more cost-effectively, fueling a rapid adoption of the new model.

[Related: the-rise-of-depin-decentralized-physical-infrastructure-networks-reshaping-the-future/]

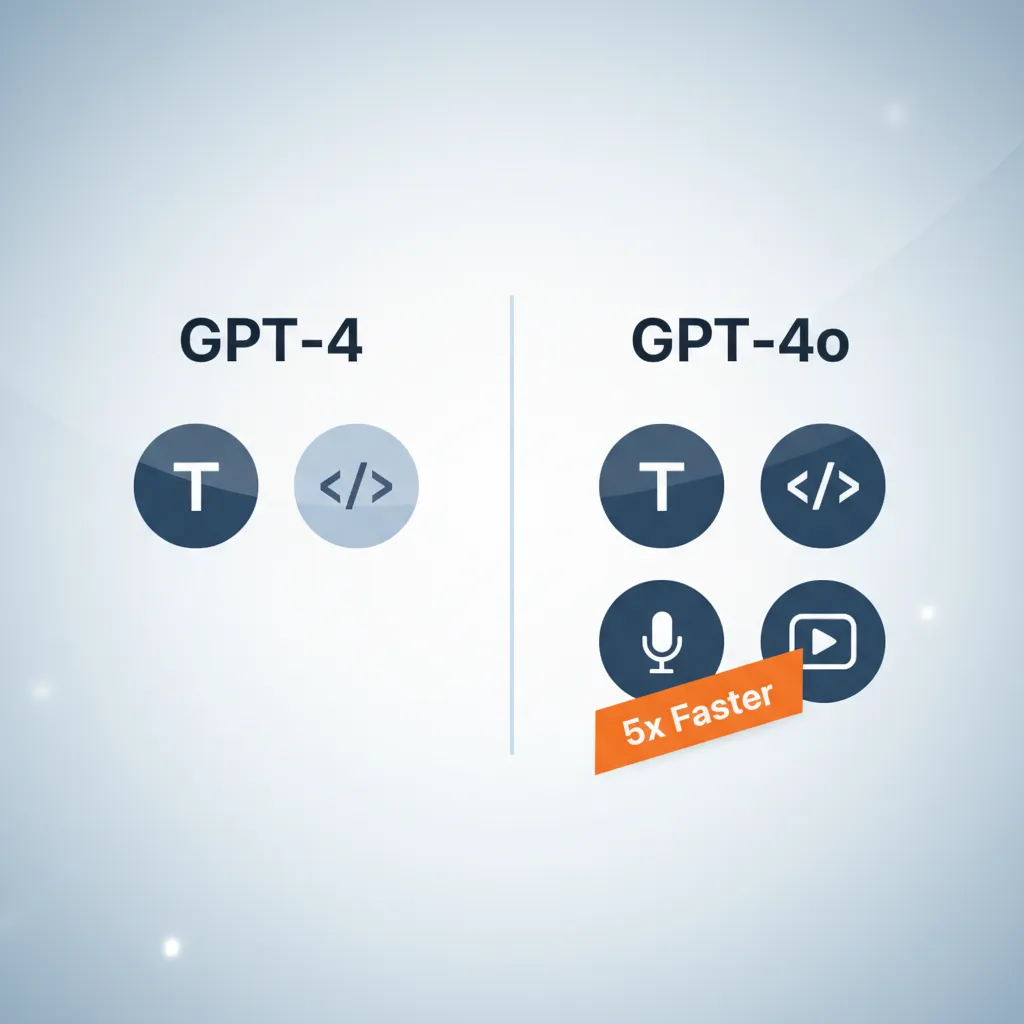

GPT-4o vs GPT-4: The Generational Leap

Understanding the leap from GPT-4 to GPT-4o is crucial for appreciating the significance of this release. While GPT-4 remains highly capable, especially for intensive, long-form reasoning, GPT-4o is optimized for speed, accessibility, and integrated multimodal interaction.

The most important distinction is the handling of non-text inputs:

GPT-4 (and earlier voice modes):

- Voice and vision processing required chaining separate, slower models.

- High latency, leading to unnatural, staggered conversations.

- Limited ability to interpret subtle tones or emotional context accurately during input.

GPT-4o (the Omni Model):

- Unified model for native, simultaneous processing of text, voice, and vision.

- Near human-level latency (under 320ms).

- Exceptional emotional and visual context interpretation.

- Better multilingual capabilities.

This comparison confirms that GPT-4o is a significant step toward truly integrated intelligent assistants.

Accessibility and Availability: Is GPT-4o Free?

One of the most user-friendly aspects of the ChatGPT new model is its wide accessibility.

Yes, a major takeaway from the OpenAI Spring Update is that GPT-4o is being rolled out to all users, including those on the free tier of ChatGPT.

How to use GPT-4o:

- Free Users: Have access to the model for text and image tasks, though potentially with usage caps and fewer advanced features than paid tiers.

- Plus/Team/Enterprise Users: Receive expanded access, higher rate limits, and priority use of the new voice and vision features as they roll out fully across platforms.

- API Users: Developers can use the GPT-4o API immediately at half the cost of GPT-4 Turbo.

This democratization of advanced AI capabilities ensures that a broader audience can experience the benefits of GPT-4o, driving faster iteration and feedback across the ecosystem.

Practical Applications: Where GPT-4o Shines

The sheer flexibility and speed of GPT-4o mean its real-world utility spans almost every sector. The GPT-4o demo presentations revealed applications that moved well beyond basic Q&A.

Education and Tutoring: The Personalized AI Mentor

Perhaps no sector benefits more immediately than education. GPT-4o can act as a tireless, patient, and highly personalized tutor.

Imagine a high school student struggling with geometry. They can hold up their homework sheet to the camera, and the AI assistant will not just give the answer, but identify where the student went wrong by analyzing the handwritten steps visually. Using voice, the model can then guide them through the correction process, encouraging critical thinking rather than simple memorization.

/image-topic.webp

This level of detailed, multi-sensory guidance creates a powerful learning environment. [Related: deep-work-mastery-unlock-focus-boost-productivity-distracted-world/]

Revolutionizing Customer Service and Accessibility

The low latency and superior emotional understanding make GPT-4o an ideal foundation for next-generation customer service and accessibility tools.

- Elevated Customer Support: AI agents can listen to a customer’s voice, detect frustration or urgency, and immediately escalate or tailor their response with appropriate empathy and speed.

- Accessibility: For users with visual or motor impairments, the ability of GPT-4o to analyze live visual feeds (like reading a medicine label or navigating a complex control panel) and verbally guide the user in real-time opens up new pathways to independence.

- Global Commerce: Instant, high-quality AI real-time translation breaks down language barriers in business and interpersonal settings, accelerating global connectivity.

[Related: ai-personalized-health-future-wellness/]

Creative Workflows and Data Analysis

For creative professionals, GPT-4o enhances brainstorming and prototyping:

- Design Feedback: A graphic designer can upload a mockup, ask for feedback on color contrast and user flow, and receive instant, visually-grounded suggestions.

- Storyboarding: A writer can speak a scene description, and the AI can generate not just the script, but visually descriptive storyboard suggestions to accompany it.

- Complex Data Extraction: Researchers can input PDFs, handwritten notes, charts, and spoken hypotheses into a single prompt, allowing the AI to synthesize heterogeneous data points into a cohesive report faster than ever before.

The Broader Implications: Navigating the Future of AI

The arrival of GPT-4o underscores several critical tech trends 2024 and beyond, particularly the accelerating move toward integrated, human-like interfaces.

Shifting Towards Human-Level Interaction

The seamless, low-latency, emotionally aware performance of GPT-4o brings us closer than ever to the ideal of human-level AI. The technology is designed to fade into the background, making the interaction feel natural and intuitive.

This shift has profound implications for how we define productivity and collaboration.

- AI as a True Co-Pilot: Instead of merely automating repetitive tasks, the new model acts as a truly cognitive partner—one that can observe, reason, and communicate with the subtlety we expect from another human.

- Evolving User Interfaces: The reliance on cumbersome graphical user interfaces (GUIs) may decline as users transition to natural language (voice and vision) as the primary input method.

The Critical Need for Ethical Governance

As models gain AI emotional intelligence and near-human speed, the stakes for ethical deployment rise exponentially. This new era of multimodal AI requires robust safety measures.

- Bias in Vision and Voice: Just as text models can harbor linguistic biases, GPT-4o’s training data might introduce biases in interpreting visual cues or voice tones, potentially leading to unfair or incorrect assessments.

- Deepfakes and Authenticity: The model’s stunning ability to mimic human speech and tone requires strong safeguards against misuse in generating deceptive content.

- Data Privacy in Real-Time: Since the model can process live video feeds and ongoing conversations, transparency regarding data usage and retention is paramount.

The industry must continue to prioritize responsible development alongside rapid innovation, ensuring that this next generation AI is beneficial for all. [Related: navigating-ai-ethics-governance-bias-trust-ai-era/]

The Catalyst for the Future of AI Ecosystem

GPT-4o is a powerful catalyst. Its cost reduction and performance boost in the API mean that specialized AI services will proliferate rapidly. We will see an explosion of niche applications built on its core capabilities—everything from hyper-personalized health monitors that analyze subtle physical changes (via vision) and correlate them with reported mood (via voice), to financial tools that can interpret complex market charts and explain the implications in plain language.

The future of AI is collaborative, and GPT-4o is the platform upon which countless new, integrated experiences will be built. This is not just about a single model; it’s about empowering the entire ecosystem to reach a new level of innovation.

/image-topic.webp

Conclusion: Entering the Omni Era

What is GPT-4o? It is the definitive answer to the call for a truly unified, real-time artificial intelligence. By natively combining text, audio, and vision into a single, highly efficient architecture, OpenAI has not just created a better chatbot; they have introduced an omni model AI that fundamentally redefines the relationship between humans and machines.

The shift from slow, chained systems to a rapid, emotionally intelligent conversational AI assistant unlocks unprecedented potential across every field, making tasks previously impossible or too cumbersome suddenly feasible. Whether you are using the free tier of ChatGPT to translate a conversation abroad, or a developer leveraging the dramatically reduced GPT-4o API costs to build the next great AI assistant, the impact of this model is undeniable.

The OpenAI new model has solidified its place as one of the most critical tech trends 2024, pushing us squarely into the age of integrated, human-level AI interaction. The era of the fragmented interface is over; the omni model is here, and the possibilities for seamless human-AI collaboration are endless. Start experimenting with how to use GPT-4o today, and witness the future of AI unfold.

FAQs: Your Most Pressing Questions About GPT-4o

Q1. What is the “o” in GPT-4o?

The “o” in GPT-4o stands for “omni,” signifying that the model is natively multimodal, trained across text, vision, and audio simultaneously. Unlike previous systems that chained different models together for different modalities, GPT-4o processes and generates all three using a single, unified neural network, leading to reduced latency and superior coherence.

Q2. Is GPT-4o a completely new model, or just an update to GPT-4?

GPT-4o is a completely new model architecture. While it achieves GPT-4 Turbo level performance in text and coding benchmarks, its key innovation is the native integration of voice and vision. It is specifically designed for speed and real-time interaction, positioning it as the next generation AI beyond the previous iterations of GPT-4.

Q3. How does GPT-4o handle real-time voice conversations compared to earlier voice assistants?

GPT-4o handles voice with significantly lower latency, achieving response times averaging 320 milliseconds—similar to natural human conversation. Crucially, it processes the raw audio directly, allowing it to understand the tone and emotion of the speaker and adjust its response accordingly, making it a much more natural and effective AI voice assistant.

Q4. Is GPT-4o free to use, and how can I access it?

Yes, OpenAI has made GPT-4o widely accessible. It is available to all users on the free tier of ChatGPT for text and image tasks, though paid subscribers (Plus, Team, Enterprise) benefit from higher usage limits and priority access to the fully integrated voice and vision features as they roll out.

Q5. What is the main difference between GPT-4o and GPT-4?

The main difference lies in modality and speed. GPT-4o is a truly multimodal AI designed to process text, audio, and vision within a single architecture, resulting in faster responses and superior performance on non-text tasks. GPT-4 is primarily a text model, and its voice/vision capabilities relied on slower, less integrated chains of models. GPT-4o also offers the same performance level as GPT-4 Turbo at half the cost via the GPT-4o API.

Q6. Can GPT-4o analyze images and video?

Yes. GPT-4o has highly advanced AI vision capabilities. It can analyze complex still images, graphs, documents, and code screenshots. Its real-time performance means it can also observe and interpret live video feeds or shared screens to provide instant analysis and interactive guidance, making it useful for computer vision applications like real-time tutoring and debugging.

Q7. What kind of latency improvement did GPT-4o achieve?

GPT-4o achieved a breakthrough in conversational latency. While previous models took an average of 5.4 seconds to complete the voice-to-text-to-speech pipeline, GPT-4o can respond in as fast as 232 milliseconds, dramatically enhancing the feeling of a natural, real-time AI dialogue.