OpenAI’s Sora: The AI That Creates Video From Text

Introduction: The Tipping Point in Generative AI

For years, generative AI has been revolutionizing how we create text (with models like GPT) and static images (with DALL-E and Midjourney). However, the creation of high-fidelity, coherent, long-form video—a medium that requires mastering space, time, and physics—remained the “holy grail” of artificial intelligence.

Then came OpenAI Sora.

Sora is more than just a new tool; it’s a paradigm shift. Unveiled by Sam Altman’s OpenAI team, Sora is a groundbreaking text-to-video AI model capable of generating complex, realistic, and imaginative scenes from simple text Sora AI prompts. The Sora AI video examples demonstrated upon its initial release stunned creators, policymakers, and technologists alike, displaying clips of a quality, complexity, and length previously thought impossible by competing models.

This article serves as your definitive guide to understanding this revolutionary technology. We will explore what is Sora AI, delve into how does Sora work at a technical level, discuss its profound creative potential of Sora and its inevitable impact on animators and filmmakers, and provide the most up-to-date information on the Sora AI release date and how to access Sora AI. We’ll also critically examine the current Sora AI limitations and the critical Sora AI safety concerns surrounding this powerful tool.

The age of truly realistic AI video generation is here, and Sora is leading the charge.

What is Sora AI? Explaining the Generative Video Revolution

OpenAI Sora is a large-scale generative model designed to produce video content solely from natural language prompts. Unlike earlier, stuttering attempts at AI video, Sora can generate scenes up to a minute long while maintaining subject consistency, spatial coherence, and realistic physics.

At its core, Sora doesn’t just render pixels; it understands and simulates the physical world. OpenAI states that Sora is a “world simulator,” meaning it can generate complex environments with multiple characters, specific motions, and accurate background details, all woven into a single, seamless narrative clip.

The release of Sora marks the arrival of the best text-to-video AI 2024, significantly raising the bar for all players in the field. When we talk about generative video AI, we are talking about technology that empowers users to become instant directors, bypassing the logistical and financial hurdles of traditional filmmaking.

The Technology Behind the Magic: How Does Sora Work?

To understand why Sora is so much better than its predecessors, we must look at its underlying architecture—a blend of diffusion models and the transformative power of “patches.”

Sora is essentially a sophisticated diffusion model for video. Diffusion models work by starting with noisy data (static) and iteratively refining it until a clear image or video is generated, guided by the input prompt.

The key innovation that differentiates Sora is its use of a transformer architecture video approach adapted from large language models (LLMs). Instead of processing raw pixels, Sora segments videos into smaller units called “patches,” which are similar to the tokens used in GPT for text.

- Patches as Data Units: Sora breaks down videos and images into spatial-temporal patches. This unified representation allows the model to be trained on a vast and diverse range of video durations, resolutions, and aspect ratios. By training on these patches, Sora learns the complex relationships between how objects move and interact over time and space.

- Transformer Scaling: The model then uses a Transformer—the same architecture powering GPT—to operate over these patches. The scalability of the Transformer architecture is what enables Sora to handle videos up to 60 seconds long and maintain high consistency, a critical limitation for older models.

This “unified patching” strategy means Sora processes videos and images in a consistent manner, allowing it to learn general representations of visual data more effectively. This is the secret sauce behind the model’s ability to grasp real-world physics, a key selling point for OpenAI’s new model.

“Sora’s ability to generate longer, visually impressive clips is a direct result of its sophisticated patching architecture, treating space and time as continuous elements in the video sequence.”

[Related: AI Unleashed: Revolutionizing Money, Smart Personal Finance]

Access and Availability: Sora AI Release Date and Waiting List

Following its initial demonstration, the most pressing question for creatives globally became: When is the Sora AI release date, and how can I access Sora AI?

As of the last update, OpenAI has confirmed that Sora is not yet publicly available. The company has taken a cautious, multi-phase rollout approach, prioritizing safety and ethical considerations before mass deployment.

Current Status and Beta Testing

Initially, access was granted exclusively to a limited number of researchers, experts, and artists for red-teaming and safety evaluation. This closed-beta phase focuses on identifying vulnerabilities related to bias, misuse, and generating harmful content—crucial steps in addressing the Sora AI safety concerns.

- Red Teaming: Security experts are actively trying to “break” the system and prompt it to create inappropriate or misleading content.

- Creative Professionals: A select group of artists, visual designers, and filmmakers were given early access to provide feedback on usability and creative output. This is where many of the initial, jaw-dropping Sora AI demo clips originated.

Projected Commercial Availability and Pricing

OpenAI has not provided a firm Sora AI release date for the general public or API access. Sam Altman, the CEO of OpenAI, has repeatedly emphasized that the release will only occur once the safety mechanisms are robust enough to mitigate risks associated with deepfakes and mass misinformation.

Speculation suggests a public or commercial release may occur in phases:

- API Access: Similar to GPT-4, the first widespread availability will likely be through an API for developers and large corporate partners who can afford a higher initial Sora AI pricing and have built-in content moderation tools.

- Consumer Interface: A simplified, DALL-E style interface is expected for the general public later, possibly alongside tiered Sora AI pricing (e.g., pay-per-generation or subscription).

There is currently no official public Sora AI waiting list for general users. The best way to stay informed about how to access Sora AI is to monitor the official OpenAI blog and announcements, especially concerning developments around its enterprise products.

The New Standard: Sora AI Video Examples and Creative Potential

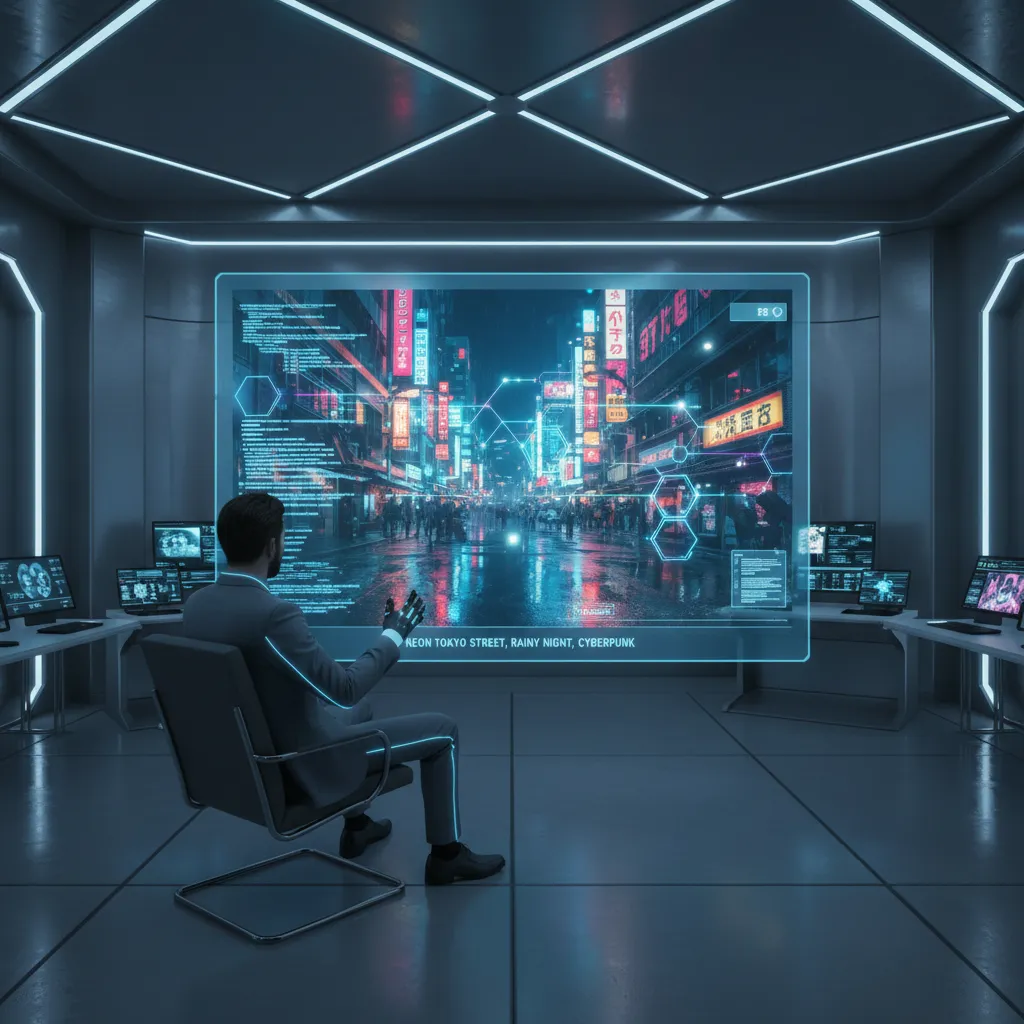

The true power of Sora is best understood through the quality of the videos it generates. The early Sora AI video examples showcased everything from hyper-realistic historical street scenes to fantastical, impossible scenarios rendered with stunning fidelity.

Cinematic Quality and Physics Simulation

What makes the output so remarkable is not just the clarity, but the temporal coherence—the objects and characters behave as they should throughout the duration of the clip.

- Complex Movements: Sora excels at generating intricate camera movements, such as sweeping drone shots or tight handheld movements, all while keeping the subjects in focus and anatomically correct.

- Physical Consistency: In many clips, water behaves like water, reflections look real, and shadows fall accurately as the scene progresses. While not perfect (more on limitations later), this simulation of physics is a massive leap forward.

- Storytelling Potential: Sora can maintain characters and visual style across multiple shots derived from the same prompt, hinting at the future possibility of generating entire, short-form narratives.

Crafting Effective Sora AI Prompts

Like any powerful generative model, the output of Sora is highly dependent on the input. Learning to write effective Sora AI prompts will be the new skill required for virtual filmmaking.

A successful prompt must be highly descriptive, covering four key elements:

| Element | Description | Example Detail |

|---|---|---|

| Subject | Detailed description of the main object(s) or character(s). | ”A shaggy brown dog wearing a small blue backpack…” |

| Action/Motion | What is happening and the style of movement. | ”…is running across a golden, dusty savannah.” |

| Environment/Setting | The location, lighting, time of day, and mood. | ”Golden hour lighting, volumetric dust particles, wide-angle shot.” |

| Cinematic Style | Camera work, film stock, and resolution. | ”Shot on 70mm film, high dynamic range, cinematic grade, shallow depth of field.” |

Effective prompt engineering is the key to unlocking the full creative potential of Sora. It transforms the user from a button-presser into a conceptual director, focusing entirely on narrative and aesthetic vision.

[Related: AI Career: Mastering the Future Job Market]

Head-to-Head: Sora vs. The Leading AI Video Competitors

Before Sora arrived, the text-to-video AI model space was competitive, dominated primarily by Runway and Pika. The immediate question upon seeing the Sora AI demo was how it stacked up against these established players.

Sora vs. Runway Gen-2

RunwayML’s Gen-2 was, for a long time, the industry standard for consumer-accessible, high-quality AI video generation.

| Feature | OpenAI Sora | Runway Gen-2 |

|---|---|---|

| Max Length | Up to 60 seconds (with maintained consistency) | Up to 4 seconds (standard) or 16 seconds (advanced methods) |

| Resolution | Native high-resolution output (1920x1080) | Good, often requires upscale from lower base resolution |

| Coherence | Exceptional temporal and spatial consistency across long clips | Consistent, but often loses track of objects or physics in longer sequences |

| Model Type | Transformer-based Diffusion Model (World Simulator) | Traditional Diffusion Model |

The primary difference lies in the length and fidelity. While Runway Gen-2 is a powerful tool for short clips and artistic transformation (video-to-video), Sora’s architecture allows it to generate significantly longer, more complex, and narratively coherent scenes, making Sora vs RunwayML a comparison between an advanced prototype and a commercially mature product.

Sora vs. Pika Labs

Pika Labs gained popularity for its accessibility, community focus, and ability to generate short, stylized animations and clips quickly.

Pika is often faster and easier to use for quick concepting. However, it typically lacks the photorealism and detailed physical modeling that Sora demonstrates. Pika is excellent for artistic and stylized video generation, whereas Sora aims for maximum photorealism and complex simulation. For professionals seeking high-production value, Sora sets the benchmark, making it the clear leader in the discussion of best text-to-video AI 2024 for quality.

OpenAI’s goal with Sora appears to be replacing stock footage or even entire scenes in blockbuster movies, whereas Pika and Runway are currently focused on short-form content, social media, and concept art.

Navigating the Challenges: Limitations and Safety Concerns

While the potential of Sora is immense, it is still a work in progress. Critically analyzing the current Sora AI limitations and inherent ethical challenges is paramount, especially for a tool poised to reshape the AI in creative industries.

Current Technical Limitations

Even in the most impressive Sora AI demo reels, researchers have pointed out specific areas where the model struggles:

- Modeling Complex Physics: While Sora is good at general physics, it occasionally fails at complex object interactions. For example, generating a person eating or interacting with delicate objects might result in physics-defying oddities.

- Temporal Consistency Over Extended Time: While better than competitors, in very long sequences, objects can still spontaneously appear or disappear, or change form slightly (known as “pop-in” or “morphing”).

- Spatial Understanding Errors: The model sometimes confuses left and right or struggles with precise instructions regarding camera placement or spatial relationships within a crowded scene.

These limitations are not trivial. They demonstrate that while Sora can synthesize visual data impressively, it doesn’t yet possess a flawless, deep, internal model of the three-dimensional world—a challenge OpenAI is actively working to overcome.

The Impact on Creative Industries and Animators

The introduction of such a powerful tool naturally sparks fear and excitement within the creative sector.

The Sora AI impact on animators, visual effects artists, and camera operators will be profound. The ability to generate complex B-roll footage, conceptualize environments, or quickly prototype cinematic ideas could eliminate tedious and costly pre-production steps. However, it also threatens entry-level positions reliant on generating basic animations or stock videos.

The consensus among industry leaders is that these tools will not replace human creativity but will instead become essential assistants. Artists will evolve from being frame-by-frame executors to high-level directors and prompt engineers, focusing on unique concepts that the AI executes.

[Related: Elden Ring: Shadow of the Erdtree Beginner’s Guide]

Ethical Implications and Misinformation

The most significant hurdle before the general Sora AI release date is addressing Sora AI safety concerns. The potential for misuse is staggering:

- Deepfakes and Misinformation: Sora’s photorealism makes it an unparalleled tool for generating highly convincing, fictitious events, which poses a serious threat to media integrity and democratic processes.

- Copyright and Licensing: The source data used to train Sora is vast. Ensuring the generated output does not infringe on existing copyrights is a major legal and ethical challenge.

- Bias in Training Data: If the training data contains biases regarding race, gender, or culture, Sora will inevitably perpetuate those biases in its generated videos, requiring careful filtering and moderation.

OpenAI has stated it is building detection tools, watermarks, and internal policies to monitor and flag harmful content before the model is widely distributed. This is a critical step that will dictate the timeline for how to access Sora AI commercially.

Sora AI Use Cases: Transforming Industries

The applications for creating videos with AI are virtually limitless, extending far beyond Hollywood blockbusters.

1. Filmmaking and Pre-Production

- Storyboarding and Concept Art: Directors can instantly prototype complex shots and sequences, dramatically speeding up the visualization process.

- Virtual Scouting: Generate realistic depictions of locations based on text, saving travel and time costs for location scouting.

- B-Roll and Stock Footage: Generating unique, high-quality establishing shots or background plates on demand eliminates the need for generic stock video licenses.

2. Advertising and Marketing

- Rapid Ad Creation: Marketers can test dozens of visually distinct advertising concepts in hours instead of weeks, tailoring campaigns to micro-audiences instantly.

- Personalized Content: The potential exists to generate highly personalized video content for individual users at scale.

3. Education and Simulation

- Historical Recreation: Educators can generate historically accurate (or speculative) visualizations of events, making lessons more engaging.

- Scientific Visualization: Creating complex 3D simulations of molecular interactions or astronomical events from simple input prompts.

4. Gaming and Virtual Worlds

- Dynamic Assets: Sora could be integrated into game engines to dynamically generate environments, cutscenes, or even non-playable character actions based on real-time narrative changes. The future of gaming might see worlds built on the fly using OpenAI new model technology.

The ability to move from abstract idea to cinematic realization in seconds is the core value proposition, proving the immense significance of OpenAI Sora review and continued development.

[Related: AI Revolutionizing Biodiversity Conservation]

Conclusion: The Horizon of Visual Storytelling

OpenAI’s Sora represents a monumental leap in artificial intelligence, moving the creative world from static imagery into the dynamic, complex realm of video. It is a powerful generative video AI that not only renders scenes but attempts to simulate the world they inhabit, offering a glimpse into the future of filmmaking AI.

While the current Sora AI limitations—such as occasional physics errors and safety risks—prevent immediate public rollout, the underlying technology is undeniable. The sophisticated use of the transformer architecture video model combined with the patching technique has established Sora as the definitive leader in text-to-video AI model technology today.

For professionals and enthusiasts monitoring the Sora AI waiting list and waiting for the official Sora AI release date, the message is clear: the ability to generate hyper-realistic, minute-long video clips on demand will fundamentally change how stories are told and how visual content is produced across every industry. Embrace the change, learn the art of detailed Sora AI prompts, and prepare for a creative landscape where imagination is the only barrier to production.

The curtain has been pulled back on the future, and it moves.

FAQs (People Also Ask)

Q1. What is OpenAI Sora, and why is it significant?

OpenAI Sora is a highly advanced text-to-video AI model capable of generating high-definition, minute-long videos from simple text descriptions (prompts). It is significant because it is the first model to demonstrate exceptional temporal consistency, spatial coherence, and realistic physics simulation over extended video sequences, making it a powerful “world simulator.”

Q2. Is Sora AI publicly available, and what is the Sora AI release date?

No, Sora AI is not yet publicly available. OpenAI is currently in a closed testing phase, providing access only to researchers and creative professionals for red-teaming and safety evaluations. A definitive Sora AI release date has not been announced, as OpenAI prioritizes addressing Sora AI safety concerns related to misuse and deepfakes before mass commercial rollout.

Q3. How does Sora compare to other AI video generators like RunwayML or Pika Labs?

Sora significantly outperforms models like Runway Gen-2 and Pika Labs primarily in video length, coherence, and photorealism. While competitors typically generate short clips (under 16 seconds), Sora can produce clips up to 60 seconds while maintaining consistent characters, detailed physics, and high resolution, positioning it as the best text-to-video AI 2024 for quality and complexity.

Q4. What are the main limitations of the Sora AI model?

The primary Sora AI limitations include difficulty accurately modeling complex physical interactions (e.g., someone eating or shattering glass), occasional errors in temporal consistency over very long clips (objects spontaneously changing), and confusing spatial relationships (left vs. right). OpenAI is continuously working to refine the model’s understanding of real-world physics.

Q5. What type of technical architecture does Sora use?

Sora utilizes a combination of diffusion models for video and a transformer architecture video approach. It breaks down videos into space-time “patches” (similar to tokens in LLMs) and uses a scalable transformer network to process these patches, allowing it to efficiently learn from vast amounts of varied video data and scale up to long, complex generations.

Q6. Will Sora AI replace filmmakers and animators?

While Sora will radically change the process of creating videos with AI, the consensus is that it will not replace human creatives but will fundamentally change their roles. Animators and filmmakers will likely transition into high-level conceptual directors and prompt engineers, leveraging Sora for rapid prototyping, pre-visualization, and generating highly detailed B-roll footage. It democratizes the tools of cinema.

Q7. How much will Sora AI cost (Sora AI pricing)?

OpenAI has not released official Sora AI pricing details. Based on their past product launches (like GPT-4 and DALL-E), it is anticipated that access will likely involve a tiered system: a premium API cost for large-scale enterprise use and potentially a subscription or pay-per-generation model for individual users, though this is currently speculation.