Meta’s Llama 3: The Ultimate Guide for 2024

Introduction: Why Llama 3 is Reshaping the AI Landscape

In the rapidly evolving world of generative AI, the release of a new foundational model is always a monumental event. When that model comes from Meta and is immediately positioned as the most capable open-source AI to date, the industry pays attention. Meta AI officially launched Llama 3 in 2024, not just as an iteration of its predecessors, but as a genuine competitor to proprietary giants like GPT-4 and Claude 3.

Llama 3 represents a seismic shift in access and capability. By making highly performant LLM model technology available to researchers, startups, and developers globally, Meta has significantly lowered the barrier to entry for AI development. This guide is your definitive resource for understanding this powerful new large language model.

We will dive deep into the technical specifications, dissect the crucial performance metrics—especially in the high-stakes comparison of Llama 3 vs GPT-4—and provide practical instructions on how to download Llama 3 and integrate it into your projects. Whether you’re a seasoned developer or a curious enterprise leader, by the end of this guide, you will know exactly why Llama 3 is poised to be the best open source LLM 2024 has seen and how you can harness its power for commercial success.

The Dawn of Llama 3: Key Features and Architecture

The success of Llama 3 stems not just from its size, but from a deliberate, rigorous training and architectural refinement process. Following the highly successful Llama 2, Meta’s new AI model boasts several improvements that significantly boost its performance across reasoning, coding, and natural language processing tasks.

Architectural Innovations Driving Performance

Llama 3 was trained on a massive dataset of over 15 trillion tokens—seven times larger than the dataset used for Llama 2. Crucially, the dataset includes over four times more code, positioning it strongly against specialized coding models.

Key architectural highlights include:

- Tokenizer Upgrade: Llama 3 features a new tokenizer with a vocabulary size of 128K tokens (up from 32K in Llama 2). This larger vocabulary allows the model to encode and decode language much more efficiently, leading to lower computational overhead, faster processing, and improved accuracy, particularly for specialized or technical text.

- Grouped-Query Attention (GQA): Implemented in both the Llama 3 8B model and the Llama 3 70B model, GQA dramatically improves inference efficiency. This means developers can run Llama 3 locally or on cloud infrastructure with higher throughput and lower latency, making real-time applications more feasible.

- Safety Stack: Recognizing the need for responsible AI, Meta introduced Llama Guard 2, an enhanced version of their safety classification model. This is critical for users seeking controlled outputs, ensuring that the model adheres to strict ethical and safety guidelines in production environments, counterbalancing the narrative around truly uncensored Llama 3 capabilities.

The Model Variants: 8B and 70B Models

The initial Meta AI Llama 3 release included two primary models, tailored for different use cases and deployment environments:

| Model Variant | Parameters (B) | Context Window (Tokens) | Primary Use Case | Hardware Footprint |

|---|---|---|---|---|

| Llama 3 8B | 8 | 8,192 | Edge devices, mobile applications, small-scale local development | Consumer GPU (e.g., RTX 3060/4060) |

| Llama 3 70B | 70 | 8,192 | Enterprise applications, complex reasoning, competitive performance | Data center-grade GPUs (e.g., A100s, H100s) |

The Llama 3 8B model is remarkably efficient, setting a new bar for what can be accomplished on resource-constrained hardware. For enterprise-grade tasks requiring maximum sophistication, the Llama 3 70B model delivers state-of-the-art results that often rival the biggest proprietary models on industry-standard AI benchmark tests.

[Related: AI in the Kitchen: Reshaping Future Food Gastronomy]

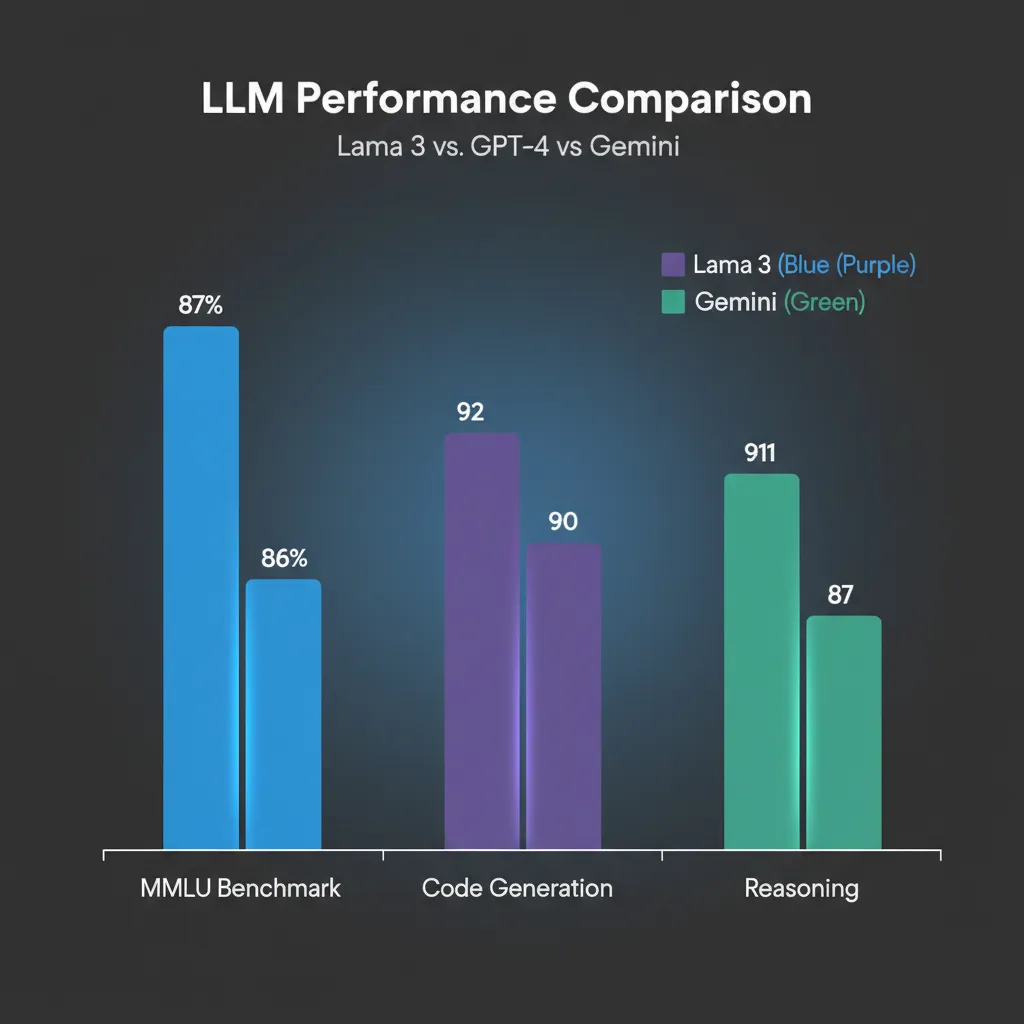

Performance Benchmarks: Llama 3 vs GPT-4 and Competitors

In the competitive world of LLM model evaluation, the proof is in the benchmarks. Llama 3’s performance is where it truly differentiates itself, justifying Meta’s claim that it is the most capable open-source AI ever released.

Head-to-Head: Llama 3 vs. GPT-4

The most critical comparison is always against the market leader. While the largest Llama 3 models (the >400B models planned for future release) are expected to fully close the gap, the available 70B variant already achieves remarkable parity and superiority in several key metrics against comparable models like Claude 3 Sonnet and even specific GPT-4 versions.

| Benchmark | Llama 3 70B (Instruct) | GPT-4 (GPT-4-0613) | Performance Summary |

|---|---|---|---|

| MMLU (Massive Multitask Language Understanding) | 82.0 | 86.4 | Llama 3 demonstrates very strong general knowledge. |

| HumanEval (Code Generation) | 81.7 | 82.1 | Near parity in coding ability, bolstered by the Code Llama lineage. |

| GSM8K (Math Reasoning) | 93.3 | 92.0 | Llama 3 often outperforms in complex arithmetic and step-by-step reasoning. |

| CommonSense Reasoning | High 80s | High 80s | Excellent for logical inferences and practical understanding. |

These Llama 3 performance metrics reveal that for developers focused on tasks like coding assistance, complex data analysis, or general-purpose chat, Llama 3 provides an immensely powerful, and crucially, open alternative. Its strength in quantitative tasks like GSM8K is particularly noteworthy for fields requiring accuracy, such as finance and scientific research.

[Related: Streamlining Supply Chains: AI Revolutionizing Logistics Efficiency]

Setting the Standard for Open-Source LLMs in 2024

Llama 3 hasn’t just matched its open-source competitors; it has significantly raised the bar for the entire category. Before Llama 3, models like Mistral 7B and various flavors of Gemma led the pack. Llama 3 now clearly establishes itself as the best open source LLM 2024 for almost every application tier.

This competitive advantage is why enterprises are rapidly exploring its capabilities. The combination of high AI benchmark scores, coupled with the freedom of its permissive license (discussed below), makes it an irresistible choice for custom AI solutions.

Practical Guide: Getting Started with Llama 3

The true power of an open-source AI model lies in its accessibility. Meta and the wider AI community, especially platforms like Hugging Face Llama 3, have made getting started with Llama 3 straightforward, allowing developers to quickly move from evaluation to deployment.

How to Download Llama 3

Unlike proprietary models that require an immediate API key and payment, Llama 3 can be downloaded directly, provided you accept the licensing terms.

1. Via Hugging Face

The most common and developer-friendly way to access the models is through the Hugging Face platform, which hosts the official model checkpoints.

- Access the Model Card: Search for the official Llama 3 repository on Hugging Face (e.g.,

meta-llama/Meta-Llama-3-8B-Instruct). - Request Access: You may need to formally request access by providing your contact details. This is usually approved quickly.

- Use the Transformers Library: Once approved, you can download and interact with the model directly using the Python

transformerslibrary, which is the standard for modern large language model deployment.

2. Via Meta’s Official Channels

You can also request direct download links and official access tokens through the Meta AI website. This is often preferred by larger organizations that need direct access to the raw files.

Run Llama 3 Locally: Hardware and System Requirements

One of the biggest questions developers ask is whether they can run Llama 3 locally. The answer is yes, but your success depends heavily on the model size you choose and your Llama 3 hardware requirements.

Llama 3 System Requirements Breakdown:

| Model Variant | Minimum VRAM (GPU Memory) | Recommended GPU for Fast Inference | Deployment Tools |

|---|---|---|---|

| 8B Model | 8GB VRAM (Quantized) | NVIDIA RTX 3060/4060, Apple M-series (16GB RAM) | Ollama, LM Studio, vLLM |

| 70B Model | 140GB VRAM (Full precision) | Multiple NVIDIA A100s or H100s | Cloud services (AWS SageMaker, Azure AI Studio) |

| 70B Model (Quantized) | 40GB VRAM (4-bit quantization) | NVIDIA RTX 4090 (24GB VRAM) + System RAM Offloading | Ollama, vLLM (using quantization techniques) |

For most individual developers exploring the capabilities of Meta’s new AI model, the Llama 3 8B is the sweet spot. It provides impressive performance while staying within the limits of standard consumer and professional workstation GPUs. Tools like Ollama have simplified the process significantly, allowing users to load and interact with quantized versions of the model with minimal effort.

Llama 3 Python Tutorial and API Access

For practical implementation, the Llama 3 python tutorial focuses on two main paths: local deployment using quantization frameworks, and cloud deployment via official Llama 3 API access through platforms like Meta, Microsoft Azure, or leading model inference vendors.

Local Deployment Example (Using the transformers library):

# Ensure you have the necessary libraries installed

# pip install transformers accelerate torch

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

# Use a smaller model like 8B for local testing

model_id = "meta-llama/Meta-Llama-3-8B-Instruct"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16, # Use bfloat16 for better memory handling

device_map="auto" # Distribute model layers across available devices

)

# Define your conversation/prompt

messages = [

{"role": "system", "content": "You are a helpful and harmless assistant."},

{"role": "user", "content": "Write a Python function to sort a list efficiently."}

]

input_ids = tokenizer.apply_chat_template(

messages,

add_generation_prompt=True,

return_tensors="pt"

).to(model.device)

outputs = model.generate(

input_ids,

max_new_tokens=512,

do_sample=True,

temperature=0.6,

top_p=0.9

)

print(tokenizer.decode(outputs[0], skip_special_tokens=False))This basic structure allows for quick experimentation, prototyping, and fine-tuning Llama 3 with custom datasets locally before scaling up to production environments.

Commercial Use and Licensing Freedom

One of the defining features setting Llama 3 apart from earlier research models and some restricted competitive models is its highly permissive license, which explicitly allows Llama 3 for commercial use.

The Llama 3 License Advantage

Meta’s licensing terms are designed to encourage massive adoption across the global business landscape. Organizations of virtually any size can use Llama 3 to build products, integrate it into services, and generate revenue, often without the high per-token costs associated with proprietary APIs.

The only significant restriction typically applies to the very largest tech companies (those with over 700 million monthly active users), ensuring that smaller businesses and the research community have unobstructed access to this cutting-edge AI development tool. This licensing strategy has fueled rapid innovation, particularly in sectors that require data privacy or on-premise solutions.

Why Enterprises Choose Open Source

For many regulated industries, such as healthcare and finance, the ability to run Llama 3 locally or on private cloud infrastructure is non-negotiable for compliance and security.

- Data Sovereignty: By hosting the LLM model internally, enterprises maintain complete control over their sensitive data, minimizing transmission risks.

- Customization: The open-source nature allows deep customization. Developers can perform extensive fine-tuning Llama 3 on proprietary data to create highly specialized models that outperform general-purpose, off-the-shelf APIs.

- Cost Predictability: While running a 70B model requires significant initial investment in Llama 3 hardware requirements, the operational cost per inference can be significantly lower and more predictable than variable API costs from cloud vendors, especially at high volumes.

[Related: AI Personalized Health: Future Wellness]

Advanced Applications and Llama 3 Use Cases

Llama 3 is not just a powerful chatbot; it’s a versatile engine for building next-generation generative AI applications. Its robust performance in reasoning and code generation opens up exciting new Llama 3 use cases across nearly every industry.

1. Code Generation and Automation (Code Llama Integration)

Thanks to its training on vast amounts of code and the integration of concepts pioneered in Code Llama, Llama 3 excels at programming tasks:

- Autocompletion: Integrating Llama 3 into IDEs for advanced, multi-line code suggestions.

- Debugging and Refactoring: Analyzing legacy codebases and suggesting optimized or security-patched alternatives.

- Documentation Generation: Automatically creating clear, concise documentation from existing code, drastically improving developer efficiency.

2. Complex Reasoning and Data Extraction

The enhanced reasoning capabilities of the 70B model make it invaluable for tasks demanding intricate thought processes:

- Financial Analysis: Summarizing thousands of pages of quarterly reports and identifying key risk factors or investment opportunities.

- Legal Review: Analyzing contract language, flagging discrepancies, and extracting specific clauses (a huge improvement over previous generations of large language model).

- Scientific Discovery: Hypothesizing relationships between disparate research papers and summarizing complex experimental findings.

3. Hyper-Personalized Customer Experience

Businesses are leveraging Llama 3’s advanced natural language processing to create dynamic, highly personalized customer interactions:

- Intelligent Chatbots: Creating Meta AI powered agents that maintain context, understand nuances, and provide human-like, helpful support, reducing the need for human intervention.

- Content Creation Engines: Generating highly specific marketing copy, blog posts, and product descriptions tailored to niche audience segments. [Related: AI Content Powerup: Speed, Quality Today]

- Education: Developing adaptive learning platforms that generate personalized quizzes and explanations based on a student’s real-time performance.

The Art of Fine-Tuning Llama 3

To achieve maximum effectiveness for specific tasks, developers must utilize fine-tuning Llama 3. This process involves further training the pre-trained model on a small, high-quality, task-specific dataset (e.g., medical transcripts, company documentation, or technical manuals).

Fine-tuning results in a bespoke model that performs far better than the base model on your specific domain, a process that is highly accessible due to Llama 3’s open architecture and robust support on platforms like Hugging Face. Techniques like Low-Rank Adaptation (LoRA) have made it possible to fine-tune even the 70B model using surprisingly modest Llama 3 system requirements, often requiring less than 40GB of VRAM.

Conclusion: Llama 3’s Long-Term Impact on AI Development

Meta’s Llama 3 is more than just Meta’s new AI model; it is a catalyst for democratizing advanced artificial intelligence. By combining industry-leading performance—even standing strong in direct comparison with Llama 3 vs GPT-4—with a commercially friendly open-source AI license, Llama 3 empowers developers and enterprises worldwide.

We have explored its sophisticated architecture, its excellent scores in every major AI benchmark, and the practical steps for getting started with Llama 3, including the nuances of Llama 3 hardware requirements and local deployment. The commitment by Meta to continue scaling the Llama family ensures that this large language model will remain a dominant force in the coming years.

If you are involved in AI development, whether through complex data projects or building customer-facing applications, mastering Llama 3 is essential. The opportunity to leverage the best open source LLM 2024 offers, without proprietary restrictions, provides an unparalleled path to innovation. Start exploring the Hugging Face Llama 3 models today, and see how this generation of generative AI can transform your digital strategy.

FAQs (People Also Ask)

Q1. What is the difference between Llama 3 8B and Llama 3 70B?

The primary difference lies in the number of parameters and subsequent performance and resource needs. The Llama 3 8B model is highly efficient, designed for faster inference and edge deployment, requiring minimal Llama 3 hardware requirements. The Llama 3 70B model offers superior reasoning and complex task performance but demands significantly more GPU memory (VRAM), suitable for high-end cloud or data center deployments.

Q2. Is Llama 3 truly open source and available for commercial use?

Yes, Llama 3 is released under a permissive license that explicitly allows Llama 3 for commercial use. It meets the criteria for being considered a powerful open-source AI model, giving businesses the freedom to build proprietary products and services on top of it without typical usage fees associated with closed-source APIs.

Q3. How does Llama 3 performance compare to GPT-4?

In several key AI benchmark categories, such as MMLU (general knowledge), HumanEval (coding), and GSM8K (mathematical reasoning), the Llama 3 70B model achieves results that are highly competitive with and sometimes slightly superior to older versions of GPT-4 and comparable to other state-of-the-art closed models. It is widely considered the most capable open-source LLM 2024 has produced.

Q4. What are the minimum Llama 3 system requirements to run the model locally?

To run Llama 3 locally, you generally need a graphics card with at least 8GB of VRAM to handle the quantized (compressed) version of the 8B model. For Mac users, an Apple M-series chip with 16GB of unified memory is usually sufficient. Tools like Ollama make local deployment easier by handling quantization and resource management.

Q5. Can Llama 3 be fine-tuned for specific business tasks?

Absolutely. The ability to perform fine-tuning Llama 3 on proprietary datasets is one of its major advantages. By using techniques like LoRA (Low-Rank Adaptation), developers can efficiently customize the model to excel at domain-specific tasks, such as translating internal corporate jargon or analyzing specialized financial documents, improving accuracy far beyond the base model.

Q6. Where can I find the official Llama 3 API access?

While Meta provides the weights for self-hosting, official Llama 3 API access and managed deployments are typically offered through major cloud providers and partners, including Microsoft Azure AI Studio, AWS SageMaker, and various independent inference providers. This allows developers to integrate the LLM model into applications without managing their own Llama 3 hardware requirements.

Q7. Does Llama 3 include safety features?

Yes. Meta introduced Llama Guard 2 alongside Llama 3. Llama Guard 2 is a robust classifier designed to filter harmful outputs and enforce security policies, ensuring the model is deployed responsibly in sensitive environments and mitigating the risks associated with utilizing uncensored Llama 3 capabilities.