Llama 3.1 Unveiled: Meta’s New Open-Source AI Challenger Explained

The relentless pace of AI innovation just kicked into a higher gear. Just when the world was getting acquainted with the impressive capabilities of Llama 3, Meta has once again redefined the landscape with the launch of Llama 3.1. This isn’t just an incremental update; it’s a significant leap forward that firmly establishes Meta’s position as a dominant force in the AI arms race, championing the cause of open-source development against proprietary giants.

Llama 3.1 arrives as a direct challenger to models like OpenAI’s GPT-4o and Google’s Gemini family, bringing state-of-the-art performance into the hands of developers, researchers, and enterprises worldwide. With a colossal new 405 billion parameter model, enhanced coding abilities, and a massive context window, this release is more than just a new tool—it’s a catalyst for the next wave of generative AI applications.

In this comprehensive guide, we’ll dive deep into everything you need to know about Meta’s latest model. We’ll unpack its groundbreaking features, analyze its performance benchmarks against top competitors, and explore the practical applications that will shape industries. Whether you’re a developer eager to build, a business leader looking to innovate, or simply an AI enthusiast, this is your definitive look at the future of AI with Llama 3.1.

What is Llama 3.1? The Next Chapter in Open-Source AI

At its core, Llama 3.1 is the latest iteration of Meta’s family of large language models (LLMs). It builds upon the powerful foundation of Llama 3, which was already celebrated for its performance and efficiency. However, Llama 3.1 introduces a new flagship model and several key enhancements that elevate its capabilities to a whole new level.

Meta’s strategy continues to revolve around the principles of open-source AI models. By making these powerful tools publicly available, Meta is not just releasing a product; it’s fostering a global ecosystem of innovation. This approach allows anyone, from individual hobbyists to large corporations, to access, customize, and build upon state-of-the-art AI technology, accelerating AI research breakthroughs and the development of novel applications.

The Llama 3.1 family includes three distinct sizes, each tailored for different use cases:

- Llama 3.1 8B: A highly efficient model perfect for on-device applications, quick tasks, and scenarios where resource constraints are a factor.

- Llama 3.1 70B: The versatile workhorse, offering a fantastic balance of high performance and manageable deployment costs, ideal for a wide range of enterprise applications.

- Llama 3.1 405B: The new titan. This massive model is designed for maximum performance, capable of tackling the most complex reasoning, comprehension, and generation tasks with unprecedented nuance and accuracy.

This release solidifies Meta’s commitment to providing a powerful, free open-source AI alternative, directly challenging the closed, API-only models that have dominated the market.

Core Features: What Makes Llama 3.1 a Game-Changer?

Llama 3.1 isn’t just bigger; it’s smarter, more capable, and more versatile. Let’s break down the key Llama 3.1 features that represent a significant evolution in next-gen AI capabilities.

The 405 Billion Parameter Powerhouse

The star of the show is undoubtedly the 405B model. With 405 billion parameters, it’s one of the largest and most powerful open-source models ever released. Parameters in an AI model are akin to the connections in a human brain; more parameters generally allow for a more nuanced and sophisticated understanding of data.

This massive scale enables the 405B model to:

- Grasp complex, multi-layered reasoning: It can follow intricate instructions and solve problems that require deep contextual understanding.

- Generate highly coherent and creative text: From writing professional reports to crafting compelling stories, the Llama 3.1 text generation is remarkably human-like.

- Reduce hallucinations: Larger models with better training are less likely to invent facts, making them more reliable for enterprise and research use cases.

Expanded Context Window: 128K Tokens

Llama 3.1 now supports a 128,000-token context window. A “token” is a piece of a word, roughly equivalent to 4 characters of text. This means the model can now process and “remember” approximately 90,000-100,000 words of information in a single prompt.

This is a monumental upgrade. It allows users to input entire books, lengthy research papers, extensive codebases, or detailed financial reports for analysis, summarization, or querying. The Llama 3.1 benefits of this are immense for professionals who work with large volumes of text.

Specialized Excellence: Code Llama 70B

Beyond general language tasks, Meta has also released a fine-tuned version specifically for programming: Code Llama 3.1 70B. This model is a powerhouse for Llama 3.1 code generation, showing exceptional performance on coding benchmarks.

For developers, this means a more capable AI assistant that can:

- Write complex functions and algorithms from natural language prompts.

- Debug existing code with high accuracy.

- Translate code between different programming languages.

- Provide detailed explanations for code snippets.

This makes Llama 3.1 for developers an indispensable tool, streamlining workflows and boosting productivity. Related: AI in Gaming: Revolutionizing Worlds, Players, and Development

Enhanced Steerability and Safety

A powerful AI is only useful if it’s controllable and safe. Meta has invested heavily in improving the “steerability” of Llama 3.1. This means developers have finer-grained control over the model’s output style, tone, and format, making it easier to integrate into specific applications. Furthermore, significant improvements have been made to reduce false refusals, where the model unnecessarily declines to answer a harmless prompt.

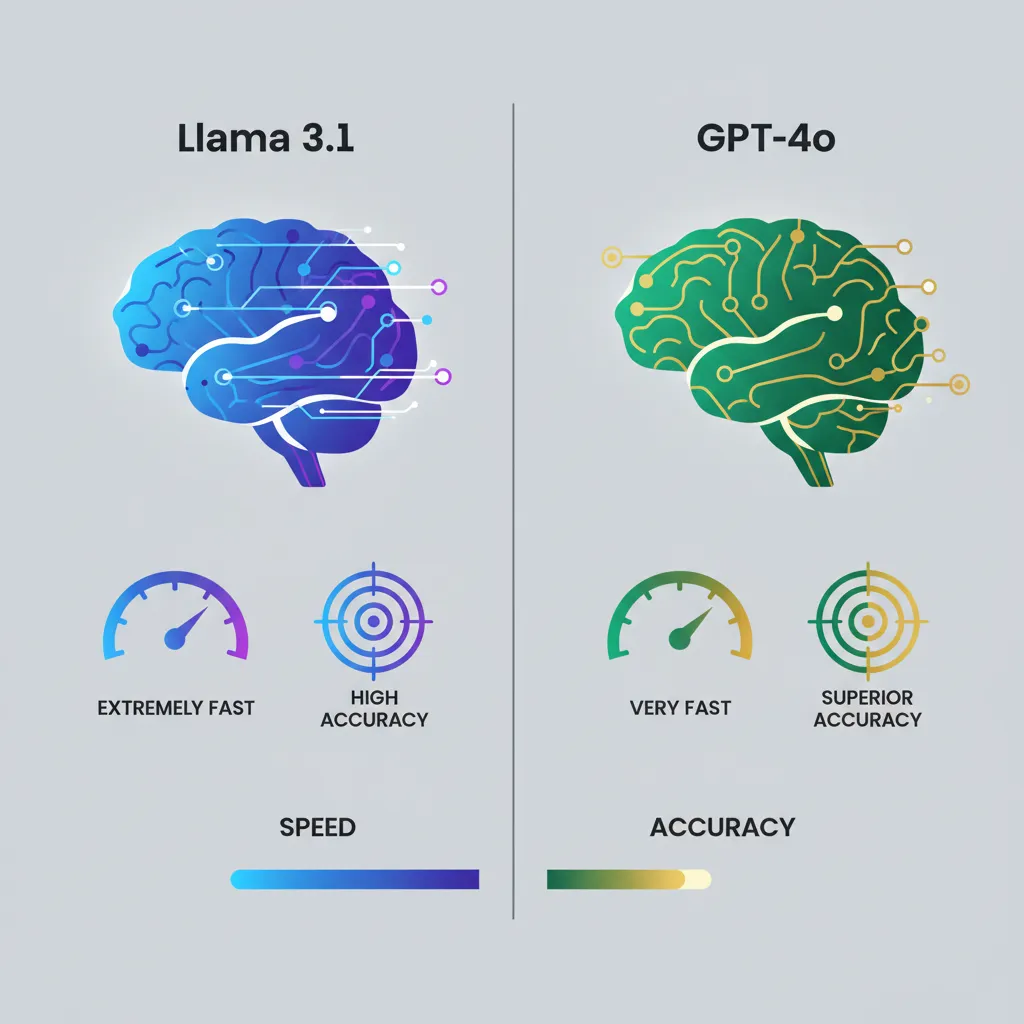

The Ultimate Showdown: Llama 3.1 vs. GPT-4o and Claude 3.5 Sonnet

The inevitable question is: how does Meta Llama 3.1 stack up against the competition? The AI model comparison 2024 is fierce, and Llama 3.1 enters as a top contender.

While direct, real-world comparisons are still emerging, benchmark data provides a clear picture of its capabilities.

Head-to-Head Benchmark Analysis

Llama 3.1 benchmarks show that the 405B model is highly competitive with, and in some cases surpasses, leading proprietary models like GPT-4o and Claude 3.5 Sonnet.

| Model | Key Strength | MMLU Score (Knowledge) | GPQA Score (Reasoning) | HumanEval (Coding) | Access Model |

|---|---|---|---|---|---|

| Llama 3.1 405B | Top-tier reasoning, Open Source | ~88.7 | ~57.8 | ~89.4 (Code Llama) | Open Source |

| OpenAI GPT-4o | Multimodality, Speed, Integration | ~88.7 | ~52.8 | ~90.2 | Proprietary |

| Claude 3.5 Sonnet | Speed, Cost-Efficiency, Vision | ~88.7 | ~57.7 | ~92.0 | Proprietary |

| Llama 3.1 70B | Performance/Cost Balance, Open Source | ~85.3 | ~51.9 | ~89.4 (Code Llama) | Open Source |

(Note: Scores are based on publicly reported data and can vary slightly based on evaluation methods.)

What do these benchmarks mean?

- MMLU (Massive Multitask Language Understanding): This tests general knowledge across 57 subjects. Llama 3.1 405B performs at the absolute top tier, proving its vast knowledge base.

- GPQA (Graduate-Level Google-Proof Q&A): This is a difficult benchmark for reasoning ability. Llama 3.1 405B’s exceptional score highlights its advanced reasoning capabilities, a key area of improvement.

- HumanEval: This tests the model’s ability to write functional code. The specialized Code Llama 3.1 model is elite, rivaling the best proprietary models.

The takeaway from the Llama 3.1 performance data is clear: the open-source community now has a free, accessible model that performs on par with the best closed-source options available. This is a massive win for AI challenger models.

The Unbeatable Open-Source Advantage

Beyond raw numbers, the most significant differentiator in the Llama 3.1 vs GPT-4o debate is its open nature. Developers and businesses are not locked into a single provider’s API, pricing structure, or terms of service.

The benefits of Llama 3.1 open source include:

- Customization: Businesses can fine-tune the model on their private data to create highly specialized applications.

- Cost Control: Running the model on your own infrastructure or a choice of cloud providers can be significantly more cost-effective at scale.

- Privacy and Security: Sensitive data can be processed on-premise without ever leaving the company’s secure environment.

- Transparency: Researchers can study the model’s architecture and behavior, fostering trust and accelerating innovation.

Related: What is Apple Intelligence? Your Guide to Apple’s iOS 18 AI Vision

Practical Applications: Putting Llama 3.1 to Work

The true impact of Generative AI Llama 3.1 will be seen in its real-world applications. Its enhanced capabilities unlock a wide range of sophisticated Llama 3.1 use cases.

For Developers: A New Frontier for Building

For the developer community, Llama 3.1 is a treasure trove. The combination of the powerful 405B model and the specialized Code Llama variant provides an unparalleled toolkit.

- Complex Application Logic: Developers can build more intelligent agents and bots that can handle multi-step tasks and complex user queries.

- Advanced Data Analysis: Use the 128K context window to feed large datasets and generate insightful code for analysis and visualization.

- Hyper-Personalization: Building with Llama 3.1 allows for the creation of deeply personalized user experiences by fine-tuning the model on user data.

- Rapid Prototyping: The ease of Llama 3.1 implementation means ideas can be turned into working prototypes faster than ever.

The availability of robust Llama 3.1 developer tools and its integration with platforms like Hugging Face and major cloud providers further lowers the barrier to entry. Related: AI PCs Are Here: How They’re Transforming Computing in 2024

For Enterprises: Driving Business Transformation

Enterprise AI solutions Llama 3.1 are poised to deliver significant value. Businesses can now deploy state-of-the-art AI without being beholden to a single tech giant.

- Superior Customer Support: Power AI chatbots that can understand customer history and resolve complex issues with less human intervention.

- In-depth Market Research: Analyze thousands of customer reviews, reports, and social media comments to extract deep market insights.

- Automated Content Creation: Generate high-quality marketing copy, technical documentation, and internal communications at scale.

- R&D and Scientific Discovery: Process vast amounts of research literature to identify trends, connections, and new avenues for exploration.

This Llama 3.1 enterprise guide is just the beginning. The ability to privately host and customize the model makes it a secure and powerful option for any organization. Related: AI Trading Bots: Your Guide to Automated Investing

How to Use Llama 3.1: Your Getting Started Guide

Ready to dive in? Here’s how to use Llama 3.1 today. Meta has made the models accessible through various channels to cater to different needs.

- Meta AI: The easiest way to experience Llama 3.1 is through Meta’s own platforms, including Meta AI on Facebook, Instagram, WhatsApp, and the web.

- Hugging Face: The developer’s hub for AI, Hugging Face, hosts the Llama 3.1 models, allowing for easy download and integration via their popular

transformerslibrary. - Cloud Providers: Llama 3.1 is available on major cloud platforms like AWS, Google Cloud, and Microsoft Azure. These platforms provide scalable infrastructure and managed endpoints for easy deployment.

- Local Deployment: The smaller 8B and 70B models can be run on powerful local machines, giving developers complete control for experimentation and development.

The open ecosystem ensures that whether you’re a beginner or an expert, there’s a clear path for Llama 3.1 implementation.

The Broader Impact: The Future of AI is Open

The release of Llama 3.1 is more than just a technological milestone; it’s a statement about the future of AI. By pushing the performance of Meta AI open source models to match and exceed proprietary ones, Meta is ensuring that the power of this transformative technology remains accessible to all.

This democratization fosters competition, drives down costs, and sparks creativity in a way that a closed ecosystem cannot. We are entering an era where the most advanced AI model advancements are not locked away in corporate vaults but are shared resources for global progress. Llama 3.1 is a powerful catalyst for that future, empowering a new generation of builders to create a more intelligent and connected world. Related: The Rise of Small Language Models: Powering Edge AI

Conclusion: A New Titan Has Arrived

Meta Llama 3.1 has firmly planted its flag at the summit of the AI landscape. With its colossal 405B model, it delivers performance that rivals the very best proprietary systems, while its open-source nature provides unparalleled freedom and flexibility. The enhanced coding capabilities, massive context window, and improved steerability make it a profoundly practical and powerful tool for developers, enterprises, and researchers alike.

This release is a testament to the power of open collaboration and a bold challenge to the status quo. It proves that state-of-the-art AI can be both powerful and accessible. As the community begins to build with, fine-tune, and innovate upon Llama 3.1, we are certain to see an explosion of new applications that were previously unimaginable. The next era of AI is here, and it’s open for everyone.

What incredible solutions will you build with the power of Llama 3.1? The possibilities are endless.

Frequently Asked Questions (FAQs)

Q1. What is Meta Llama 3.1?

Llama 3.1 is the latest family of open-source large language models from Meta AI. It includes three sizes (8B, 70B, and a new state-of-the-art 405B parameter model) and features significant improvements in reasoning, code generation, and a larger 128K context window.

Q2. How does Llama 3.1 differ from Llama 3?

Llama 3.1 introduces the much larger 405B model, which offers superior performance on complex tasks. It also expands the context window to 128K tokens (from 8K in Llama 3), includes a new and improved specialized coding model (Code Llama 70B), and enhances model steerability and safety.

Q3. Is Llama 3.1 better than GPT-4o?

On many industry-standard benchmarks, the Llama 3.1 405B model performs at a level comparable or even superior to GPT-4o, especially in areas like graduate-level reasoning (GPQA). The key difference is that Llama 3.1 is open-source, offering greater flexibility, customization, and cost control, whereas GPT-4o is a proprietary model accessed via API.

Q4. What are the main new features of Llama 3.1?

The main new features are the introduction of the powerful 405B parameter model, an expanded 128,000-token context window for processing large documents, a new version of Code Llama for advanced code generation, and overall better performance and model control.

Q5. Is Llama 3.1 free for commercial use?

Yes, Llama 3.1 is a free open-source AI model. It is available under a generous license that allows for both research and commercial use, empowering developers and businesses to build and deploy applications without licensing fees.

Q6. What are the different sizes of the Llama 3.1 model?

The Llama 3.1 family comes in three sizes to suit different needs:

- 8B (Billion parameters): For on-device and efficient applications.

- 70B (Billion parameters): A balanced model for general-purpose, high-performance tasks.

- 405B (Billion parameters): The largest model, designed for maximum capability on the most complex reasoning and generation tasks.

Q7. How can developers access and use Llama 3.1?

Developers can access Llama 3.1 through several channels. It’s available for download on platforms like Hugging Face, can be deployed via major cloud providers such as AWS, Google Cloud, and Azure, and can be integrated into projects using popular AI development libraries.