Llama 3.1 Guide: Specs, Features & Access

Introduction: The New Apex of Open-Source AI

The landscape of large language models 2024 is being continuously reshaped by rapid releases, but few releases generate the sheer seismic activity of a new Meta model. The unveiling of Llama 3.1 marks a pivotal moment, not just for Meta, but for the entire ecosystem of advancements in open-source AI.

This isn’t merely an incremental update; Meta Llama 3.1 represents a calculated leap forward, challenging the perceived dominance of closed-source giants like OpenAI and Anthropic. Meta has successfully positioned Llama 3.1 as the undisputed state-of-the-art LLM in the open-source community, driving intense innovation and forcing a competitive repricing of capabilities across the industry.

For developers, researchers, and tech enthusiasts, understanding what is Llama 3.1—its core specifications, transformative features, and immediate accessibility—is crucial for navigating the AI industry trends of the coming year.

In this definitive guide, we will unpack the technical might of the flagship Llama 3.1 405B model, provide a thorough AI model comparison against rivals like GPT-4o, detail the Llama 3.1 features, and, most importantly, show you exactly how to access Llama 3.1 today, whether you’re a consumer chatting with Meta AI or a developer building the next generation of applications.

I. The Technical Revolution: Understanding Llama 3.1 Model Specs

The power of any modern language model lies in its underlying architecture and scale. With Llama 3.1, Meta has pushed the boundaries of what is achievable in a publicly available model, signaling a strong commitment to fostering an open-source AI model community that can truly compete.

The Powerhouse: Unpacking the Llama 3.1 405B Model

The star of this release is unquestionably the Llama 3.1 405B variant. While Llama 3 introduced groundbreaking performance across smaller model sizes, the 405 billion parameter model of Llama 3.1 represents Meta’s foray into the high-end, extremely powerful LLM space.

The “405B” variant is not just larger; it is a significantly more refined model, trained on an even larger, more diverse, and meticulously curated dataset. Its size allows for superior nuance, complex reasoning, and world knowledge, making it an unprecedented tool for tackling high-stakes tasks in enterprise and research settings.

Key Llama 3.1 model specs across the model family include:

| Model Variant | Parameters (approx.) | Target Use Case | Key Differentiator |

|---|---|---|---|

| Llama 3.1 8B | 8 Billion | Edge/Mobile Deployment, Quick Prototyping | Highest performance-to-size ratio |

| Llama 3.1 70B | 70 Billion | Production-Ready Applications, Standard Enterprise | Balanced power and deployability |

| Llama 3.1 405B | 405 Billion | State-of-the-Art Research, Complex Reasoning | Unrivaled performance in open-source |

This tiered structure ensures that the power of Llama 3.1 is available to almost every segment of the industry, from low-latency mobile apps to high-throughput cloud services.

Massive Context: The Llama 3.1 Context Window Explained

One of the most significant upgrades in the Llama 3.1 model specs is the vastly expanded context window. The context window defines how much information—or how many tokens—the model can process and remember in a single interaction.

Where Llama 3 offered a 8K context window, Llama 3.1 dramatically scales this up to 100K tokens.

This shift is transformative for tasks requiring extensive memory and understanding of long documents, complex codebases, or extended conversational history. For AI for developers, this means the model can review, debug, and improve massive files of code without losing track of dependencies or architecture. For enterprise users, it means summarizing entire manuals, legal contracts, or years of transcribed meetings in a single prompt.

This 100K token capacity puts Llama 3.1 at the forefront of memory-intensive tasks, an area where models often struggle.

II. Llama 3.1 Features: Beyond Simple Chat

Llama 3.1 features are built around two core concepts: superior native code reasoning and advanced steps toward true multimodality. Meta engineered the model not just to generate human-like text, but to become a highly competent professional assistant.

Llama 3.1 as a Next-Gen Coding Assistant AI

The open-source community, particularly developers, had high expectations for Llama 3.1’s coding prowess, and Meta delivered. The fine-tuning phase included an unprecedented amount of high-quality, diverse code, resulting in a coding assistant AI that exhibits exceptional proficiency in:

- Code Generation: Producing highly optimized, idiomatic code across dozens of languages (Python, JavaScript, Rust, C++).

- Code Translation: Effortlessly converting legacy codebases from one language to another, maintaining logic and structure.

- Debugging and Explaining: Identifying subtle bugs within complex files and providing detailed, contextual explanations for proposed fixes—a capability significantly enhanced by the 100K Llama 3.1 context window.

The performance of Llama 3.1 on benchmarks like HumanEval and MBPP has solidified its position as the top performer among readily available, downloadable LLMs, making it a critical tool for developers worldwide.

The Path to True Multimodal AI Capabilities

While the initial release of Llama 3.1 is primarily focused on text and code, Meta has confirmed significant strides toward robust multimodal AI capabilities. Llama 3.1 is internally structured to better integrate vision and audio processing in future iterations, or even through immediate, high-quality multimodal instruction tuning.

This means that the core model architecture is primed to handle inputs like images, video, and audio seamlessly, promising a future where a developer can hand the model a screenshot of an error message or a diagram of a database schema and receive instant, contextualized text responses. This expansion is essential for keeping pace with rivals and moving the entire generative AI news cycle forward.

[Related: ultimate-guide-generative-ai-content-creation/]

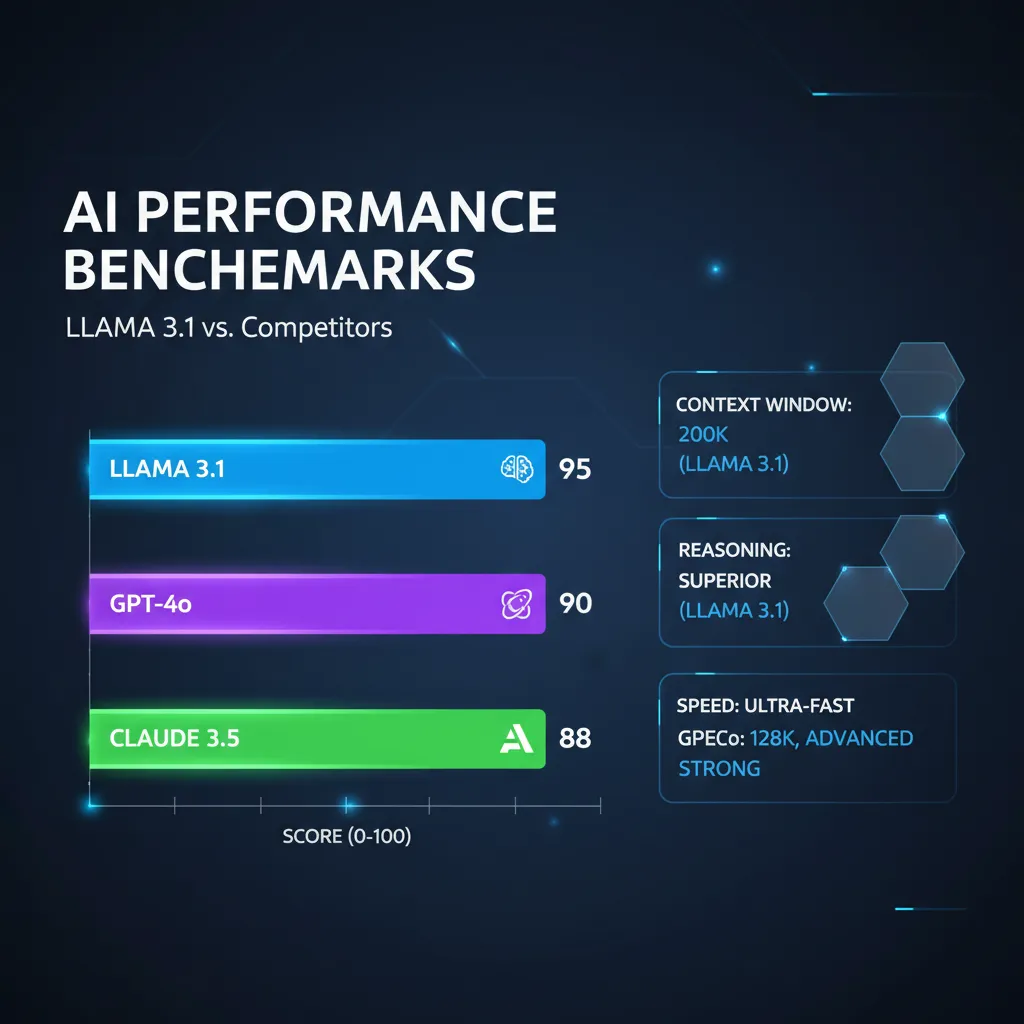

III. The Competitive Edge: Llama 3.1 vs GPT-4o Benchmarks

In the rapidly evolving world of next-gen LLM competition, performance metrics are everything. The central question for most users and enterprises is: How does Llama 3.1 vs GPT-4o stack up?

The answer is complex, but the data is compelling: Llama 3.1 (specifically the 405B variant) has effectively closed the gap and, in several key areas, surpassed the closed-source leaders, particularly within the specific tasks Meta optimized for.

State-of-the-Art Performance Metrics

When analyzing Llama 3.1 benchmarks, researchers look at several standardized tests that measure core model capabilities:

- MMLU (Massive Multitask Language Understanding): Measures general world knowledge and reasoning across 57 subjects. Llama 3.1 shows a noticeable lift in accuracy over its predecessor and often ties or slightly exceeds GPT-4o’s performance on the 405B scale.

- HumanEval: Measures code generation capabilities. Llama 3.1 shows dominant performance here, confirming its effectiveness as a coding assistant AI.

- GSM8K: Measures complex mathematical and logical reasoning. Llama 3.1 shows marked improvement in step-by-step thinking and accurate calculation.

The overarching takeaway from the Llama 3.1 performance data is that the model provides “frontier” performance—the kind that was previously exclusive to models accessible only via paid APIs.

Direct AI Model Comparison

When performing a direct AI model comparison between the top commercially available closed models and the flagship open-source AI model from Meta, the lines are blurring faster than ever.

| Feature / Model | Llama 3.1 (405B) | GPT-4o (OpenAI) | Claude 3.5 Sonnet (Anthropic) |

|---|---|---|---|

| Model Access | Open-Source (Community/Commercial License) | Closed API/Service | Closed API/Service |

| Max Context Window | 100K Tokens | 128K Tokens | 200K Tokens |

| Core Strength | Reasoning, Code Generation, Openness | Multimodal Excellence, Speed | Long Context, Safety, Detailed Writing |

| Cost | Free to Deploy (Hardware required) | Pay-per-Token | Pay-per-Token |

| Llama 3.1 Benchmarks | Leading in open-source, competitive with closed models in core logic. | Excellent across all domains. | Strong performance in long-form text analysis. |

The choice between Llama 3.1 vs GPT-4o often comes down to control and customization. If a company requires total data sovereignty, model Llama 3.1 fine-tuning, and the ability to run the model on proprietary hardware without paying per token, Llama 3.1 is the clear, compelling choice. Its massive Llama 3.1 context window also makes it extremely versatile for enterprise applications that manage large documents.

[Related: googles-ai-overviews-future-of-search-is-here/]

IV. How to Access Llama 3.1: Pathways for Consumers and Developers

One of the greatest differentiators of Meta’s strategy is its dedication to making its next-gen LLM accessible across both consumer and professional platforms. Whether you want to try it out on your phone or build it into a massive cloud application, there is a clear path forward.

Consumer Access via Meta AI

For the average user looking for immediate and effortless interaction, the primary pathway to experience Llama 3.1 is through Meta AI.

Meta AI is Meta’s native intelligent assistant integrated across Facebook, Instagram, WhatsApp, and the dedicated Meta AI website. This is where the public experiences the instantaneous responsiveness and enhanced reasoning of the Llama 3.1 model, likely the smaller, more optimized 8B or 70B versions, tuned for chat performance.

- In-App Chat: Users can prompt Meta AI within their existing social media platforms for quick searches, brainstorming, or image generation (leveraging Llama 3.1’s underlying architecture and supplementary models).

- Real-Time Grounding: Meta AI offers real-time information access, a crucial capability that ensures the model provides accurate and up-to-date answers, mitigating the “knowledge cutoff” problem common in older models.

Using Meta AI is the fastest way to get hands-on experience with the model’s chat-optimized capabilities and understand its tone and responsiveness.

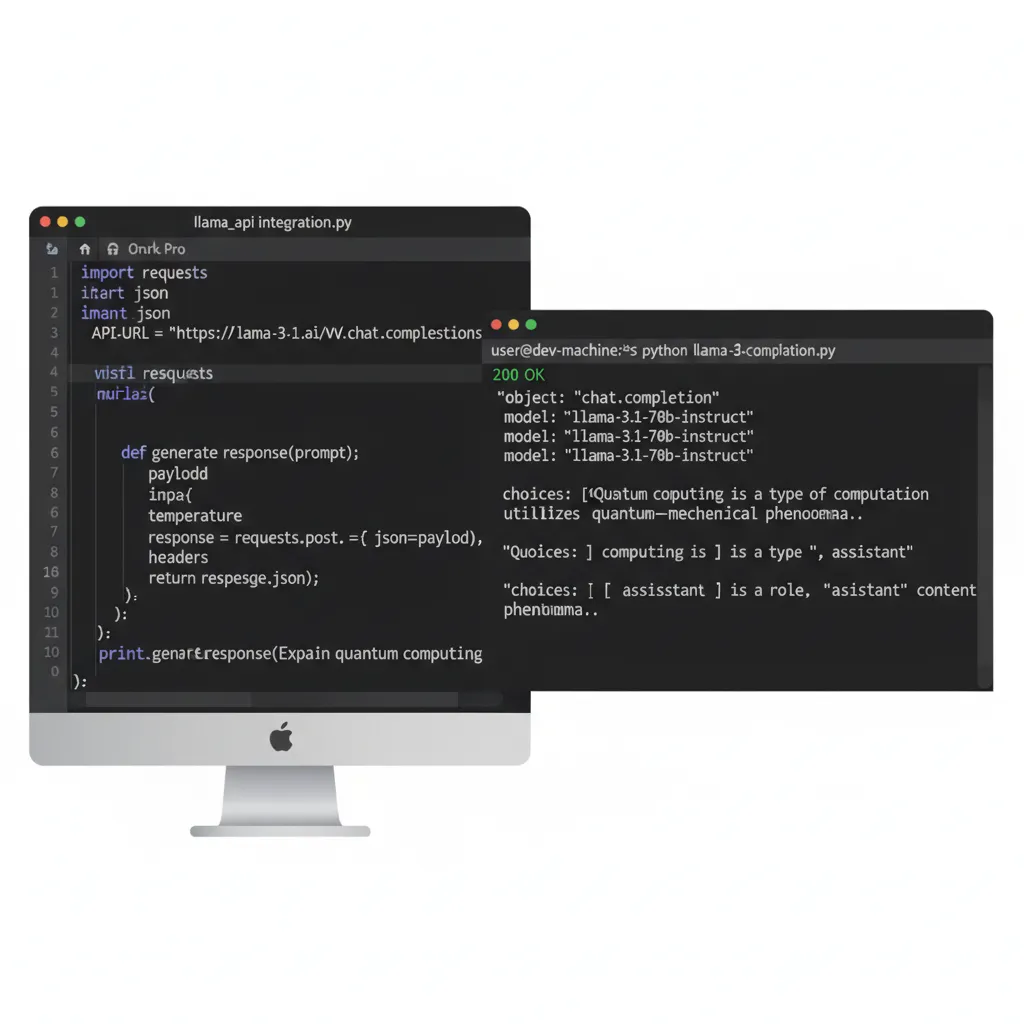

Developer Access: The Llama 3.1 API and Direct Download

For professionals, the true value of Llama 3.1 lies in its deployment flexibility. Meta’s commitment to an open-source AI model approach means they provide multiple avenues for developers to integrate this powerful tool.

The Llama 3.1 API

For developers who prefer managed services without the operational overhead of running massive models like the 405B, the Llama 3.1 API offers a high-performance, scalable solution.

Access through the API is typically offered by cloud providers (like AWS, Microsoft Azure, Google Cloud) or through Meta’s preferred hosting partners. This method is ideal for startups and large enterprises needing guaranteed uptime and straightforward integration into existing cloud architectures.

Direct Download and Deployment

For the purest form of open-source utilization, all major Llama 3.1 models (8B, 70B, and 405B) are available for direct download through platforms like Hugging Face.

This is the preferred route for AI for developers who need to:

- Run the model locally or on proprietary hardware for enhanced security and data privacy.

- Perform advanced Llama 3.1 fine-tuning on highly specific proprietary datasets.

- Experiment with different quantization and deployment techniques.

The open licensing structure (allowing commercial use, subject to specific usage thresholds) dramatically lowers the barrier to entry for innovators seeking to deploy state-of-the-art technology without restrictive vendor lock-in.

V. Strategic Impact: Meta’s New AI Model and the Open-Source Ecosystem

The launch of Llama 3.1 is more than a technical achievement; it is a profound strategic move by Meta that redefines the competitive landscape of large language models 2024. By releasing the most powerful open-source model to date, Meta is accelerating the entire Meta AI ecosystem and forcing proprietary models to continually justify their closed nature and pricing.

Responsible AI Development and Licensing

Meta has approached the release of Llama 3.1 with an emphasis on responsible AI development. The licensing agreement, while generally permissive for commercial use, includes stringent guidelines against using the model for harmful or deceptive practices.

Crucially, the open availability allows for “red-teaming” by the global AI community. Thousands of researchers and developers can rigorously test the model for biases, failure modes, and security vulnerabilities much faster than any single corporate team could, leading to rapid iteration and safer subsequent versions. This collaborative vetting process is one of the strongest arguments in favor of the advancements in open-source AI.

Llama 3.1 Fine-Tuning and Customization Potential

The true revolution brought by Llama is customization. Once developers can download, inspect, and run the model’s weights, the possibilities for specialized application are endless. Llama 3.1 fine-tuning is expected to become a cornerstone of enterprise AI deployment.

Imagine:

- A financial institution fine-tunes the 70B model on decades of proprietary trading documents to create an expert advisor.

- A legal firm customizes the 405B model on jurisdictional case law to create an unparalleled paralegal chatbot technology updates.

- A gaming studio fine-tunes the 8B model on specific character dialogue for hyper-realistic in-game NPCs.

This ability to mold and specialize the base model is why Llama 3.1 is considered a fundamental building block for future technological progress, democratizing the power of AI far beyond what was possible just two years ago.

[Related: ai-personal-growth-master-habits-unlock-potential/]

Impact on AI Industry Trends

The Llama 3.1 release solidifies several major AI industry trends:

- Performance Parity: The expectation that open-source models must lag behind proprietary models is over. Llama 3.1 proves that high-performance, frontier AI can be publicly accessible.

- Hardware Optimization: The success of the smaller 8B and 70B models, optimized for speed and efficiency, will accelerate the development of specialized AI hardware and frameworks tailored for Llama architecture.

- Competition in Context: The push to 100K context is a direct challenge, leading all major competitors to prioritize expanding their context windows, benefiting all users who need to process large amounts of data.

Meta’s relentless pursuit of open excellence is not just altruistic; it ensures that the Meta AI ecosystem remains a key driver in defining the standards and adoption patterns for future generative AI news.

Conclusion: The New Frontier of Open Intelligence

The arrival of Llama 3.1 is a landmark event that signals the maturity and undeniable power of the open-source movement in artificial intelligence. With unparalleled Llama 3.1 model specs, including the behemoth Llama 3.1 405B variant and its game-changing Llama 3.1 context window, Meta has provided the global community with a tool capable of tackling virtually any challenge that a proprietary model can handle, often with greater transparency and customization.

Whether you are a developer looking for the ultimate coding assistant AI, a researcher seeking a platform for Llama 3.1 fine-tuning, or a consumer engaging with the increasingly capable Meta AI assistant, Llama 3.1 represents a massive leap forward. The rigorous Llama 3.1 benchmarks confirm its status as a state-of-the-art LLM, guaranteeing that the competition between Llama 3.1 vs GPT-4o will continue to drive innovation benefiting everyone.

The future of intelligence is increasingly becoming open, and Llama 3.1 is the latest, and most powerful, testament to that vision. Start experimenting today, build something transformative, and join the rapidly growing community accelerating the advancements in open-source AI.

FAQs: Grounded Insights on Llama 3.1

Q1. What is Llama 3.1 and why is it important?

Llama 3.1 is the latest, most powerful family of large language models (LLMs) released by Meta, known for being an open-source AI model. It is important because its flagship 405B version achieves state-of-the-art performance, directly competing with closed-source models while offering the flexibility and transparency of open licensing to developers globally.

Q2. What is the Llama 3.1 release date?

The Llama 3.1 release date was July 2024, marking a significant strategic refresh from its predecessor, Llama 3. The release included the optimized 8B, 70B, and the massive 405B model weights, making them immediately available for download and deployment.

Q3. How does the Llama 3.1 405B model compare to GPT-4o?

The Llama 3.1 405B model is engineered to be highly competitive with GPT-4o. While GPT-4o maintains a strong edge in multimodal integration, Llama 3.1 often shows superior performance in specific areas like complex code generation (as an advanced coding assistant AI) and logical reasoning, according to independent Llama 3.1 benchmarks. The choice often comes down to the user’s need for an open-source model versus a managed API service.

Q4. What is the significance of the 100K Llama 3.1 context window?

The Llama 3.1 context window of 100,000 tokens allows the model to process significantly longer inputs, such as entire legal documents, long technical manuals, or vast code repositories, in a single interaction. This massive capacity improves coherence, reasoning over long distances, and overall Llama 3.1 performance in complex enterprise tasks.

Q5. Is Llama 3.1 fully open-source and can I use it commercially?

Yes, Meta Llama 3.1 is considered an open-source AI model under a permissive license (similar to Llama 3). This license permits both research and commercial use, though enterprises deploying the model at a massive scale (above certain monthly active users) must still notify Meta, ensuring compliance with responsible AI development guidelines.

Q6. How can I start building applications with the Llama 3.1 API?

How to access Llama 3.1 for development involves using the Llama 3.1 API through major cloud providers (like AWS, Azure) or platforms that host the model. You can also download the model weights directly and deploy them on your own infrastructure, which is ideal for advanced Llama 3.1 fine-tuning and complete model customization.

Q7. Does Llama 3.1 support multimodal AI capabilities?

While the initial public release of Meta’s new AI model is primarily a text-based LLM, the architecture is designed to support future multimodal AI capabilities. Meta has demonstrated strong visual and audio processing capabilities internally, and these are expected to be integrated, either through future models or fine-tuned versions of Llama 3.1.

Q8. What does Llama 3.1 mean for the future of the Meta AI ecosystem?

Llama 3.1 is the backbone of the entire Meta AI ecosystem. It powers the Meta AI consumer assistant across all their platforms (WhatsApp, Instagram, etc.). By making the core engine powerful and open, Meta encourages global developers to build tools and services that implicitly rely on and extend the Llama architecture, cementing its dominance in the generative AI news and development community.