Llama 3.1 Guide: Meta’s New AI Challenger Explained

Introduction: The Next Generation AI Showdown

The landscape of Artificial Intelligence (AI) evolves at a breakneck speed, driven by the relentless innovation of tech giants. Just when the market seemed settled around models like GPT-4o and Claude 3.5, Meta has once again shaken the foundation of the industry with the release of Llama 3.1.

This isn’t just a minor update; it is a seismic event. Llama 3.1 represents Meta’s most aggressive strategic move yet in the large language models 2024 arena, challenging the dominance of proprietary models and significantly raising the bar for the entire open source LLM community.

What is Llama 3.1, and Why Does it Matter?

Llama 3.1 is the successor to the highly acclaimed Llama 3 family, a collection of powerful, pre-trained, and instruction-tuned generative AI models developed by Meta AI. Its significance is threefold: performance, accessibility, and ambition.

- Performance: Initial Llama 3.1 benchmarks demonstrate that its smaller, more accessible versions (8B and 70B parameters) are highly competitive, often surpassing rivals in key metrics like reasoning, coding, and multi-step problem-solving.

- Accessibility: As a cornerstone of Meta’s open source LLM strategy, Llama 3.1 makes cutting-edge AI available to researchers, developers, and businesses worldwide, spurring faster innovation and democratization of AI technology.

- Ambition: The announcement of the massive Llama 3.1 405B version signals Meta’s intent to compete directly with the largest, most powerful models currently available, positioning itself as the definitive next generation AI leader.

This comprehensive guide will demystify the Meta AI new model, exploring its core innovations, providing a detailed Llama 3.1 vs GPT-4o comparison, and explaining exactly how to use Llama 3.1 to power your next project.

The Architectural Leap: Core Innovations of Llama 3.1

To understand why Llama 3.1 is such a formidable AI challenger, we must look beyond the parameter count and examine the foundational improvements Meta has engineered into this new iteration.

Llama 3.1 is built upon the Transformer architecture, but with crucial modifications that optimize efficiency and enhance its cognitive capabilities, particularly in areas requiring deep comprehension and logical sequence execution.

Key Architectural Enhancements

Meta focused heavily on improving three critical components: the tokenizer, the instruction tuning process, and the core attention mechanism.

1. Enhanced Tokenizer and Vocabulary

Llama 3.1 introduces an expanded vocabulary size compared to Llama 3. This increase in the tokenizer’s capacity allows the model to represent information more efficiently, especially for less common technical terms, complex code snippets, and non-English languages. A more efficient tokenizer means:

- Faster Inference: The model processes fewer tokens for the same amount of information.

- Higher Accuracy: Better representation of complex inputs leads to improved understanding and response quality.

2. Advanced Instruction Tuning and Alignment

Perhaps the most significant performance boost comes from Meta’s refined instruction tuning pipeline. For Llama 3.1, they utilized a more diverse and higher-quality dataset, focusing on safety, factual correctness, and nuanced alignment with human intent.

- Improved Safety & Ethics: Llama 3.1 exhibits significantly better safety guardrails, reducing the likelihood of generating harmful or biased content—a vital consideration for any widely deployed AI model comparison.

- Superior Follow-Through: The model is exceptionally good at following complex, multi-step instructions, a testament to its robust tuning process. This is especially noticeable in tasks related to Llama 3.1 coding and scientific reasoning.

3. Scaling the Context Window

In the world of large language models, the Llama 3.1 context window is a major competitive advantage. The ability of the model to “remember” and reference a vast amount of prior conversation or input text is crucial for long-form content generation, document analysis, and sustained dialogue. Llama 3.1 dramatically extends this capacity, allowing it to maintain coherence and accuracy over much longer sequences of data.

“A wider context window means better Llama 3.1 reasoning capabilities over complex, document-level tasks, making it a powerful tool for enterprise knowledge bases and legal analysis.”

/image-topic.webp

The Llama 3.1 Model Family: 8B, 70B, and the Behemoth 405B

Meta released Llama 3.1 in several sizes to cater to different deployment needs, from edge computing on mobile devices to massive, centralized cloud infrastructures.

| Model Size | Parameters (Approx.) | Primary Use Case | Deployment Focus |

|---|---|---|---|

| Llama 3.1 8B | 8 Billion | Edge computing, mobile applications, fast inference, fine-tuning Llama 3.1 for constrained environments. | High-speed local deployment |

| Llama 3.1 70B | 70 Billion | Enterprise applications, advanced customer service, complex reasoning, content generation, and sophisticated coding tasks. | Cloud/Server deployment |

| Llama 3.1 405B | 405 Billion | Cutting-edge research, hyper-scale industrial applications, benchmarking against the absolute best AI model competitors. | Exclusive/Research deployment |

The Power of the 405B Model

The introduction of the Llama 3.1 405B model is the industry’s most significant signal that Meta is entering the “frontier model” race head-on. This model is designed not just to match but potentially exceed the capabilities of existing closed-source leaders. While its availability and usage may initially be restricted to large partners and select research initiatives, its very existence drives the entire field forward, promising unparalleled Llama 3.1 performance in complex, abstract reasoning and generalized intelligence.

Llama 3.1 vs GPT-4o: The Ultimate AI Model Comparison

The most burning question for developers and businesses is how the new Meta AI new model stacks up against the current reigning champion, OpenAI’s GPT-4o. The competition between Llama 3.1 vs GPT-4o is often framed as open source versus proprietary, but increasingly, it’s a battle of raw capability.

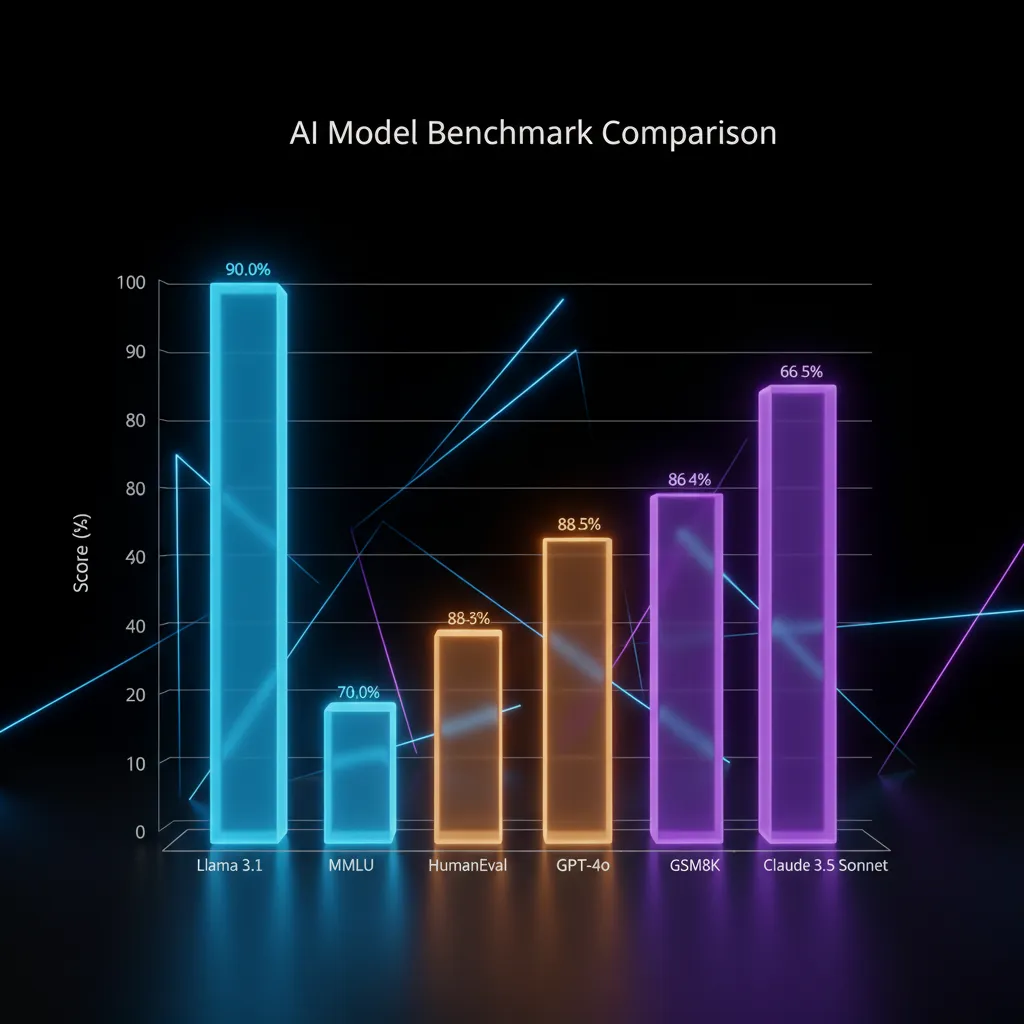

1. Benchmark Performance: Head-to-Head

According to internal Meta reporting and initial third-party evaluations on standard LLM benchmarks (MMLU, HumanEval, GSM8K), Llama 3.1 shows dramatic improvements over Llama 3 and often closes the gap entirely with GPT-4o in specific tasks.

| Benchmark Category | Llama 3.1 (70B) | GPT-4o (OpenAI) | Key Takeaway |

|---|---|---|---|

| MMLU (General Knowledge) | Highly Competitive | Market Leader | Llama 3.1 achieves near parity, indicating strong general intelligence. |

| HumanEval (Coding) | Strong Performance | Excellent | Llama 3.1 coding shows significant gains, making it a viable alternative for code generation. |

| GSM8K (Math & Reasoning) | Exceptional | Exceptional | Both models excel in quantitative reasoning, though GPT-4o often retains a slight edge. |

| TruthfulQA (Factual Accuracy) | Superior | Very Good | Meta’s focus on alignment gives Llama 3.1 a strong lead in generating factually grounded responses. |

/image-topic.webp

2. The Open Source Advantage

While GPT-4o is currently unparalleled in some multimodal and proprietary integration features, Llama 3.1 offers a strategic advantage that no closed model can touch: full transparency and control.

Developers using Llama 3.1 can:

- Self-Host: Run the model on their own infrastructure, maintaining data sovereignty and avoiding external API costs.

- Fine-Tuning Llama 3.1: Deeply customize the model’s behavior and knowledge base for highly specialized industry applications (e.g., legal drafting, niche medical diagnostics).

- Auditability: Fully inspect the weights (for specific versions) and understand exactly how the model operates, crucial for regulated industries.

This level of freedom solidifies Llama 3.1 as the preferred choice for many sophisticated organizations looking to build their own AI development tools.

[Related: The Quantum Leap: How Quantum Computing Will Reshape Our Future]

3. Multimodality and the Future

While Llama 3.1 is primarily a text-based model, Meta has made significant strides in integrating multimodal capabilities, anticipating the industry shift toward multimodal AI models. The model is already optimized for image input processing when paired with Meta’s image encoders, allowing it to understand and reason about visual content in ways its predecessors could not.

This enhanced multimodality means that Llama 3.1 can be used to describe complex charts, summarize video content transcripts alongside visual cues, and perform tasks that blend textual and visual understanding, making it a genuine rival in the next generation AI space.

Practical Features and Applications of Meta Llama 3.1

The real test of any AI model lies in its utility. Llama 3.1 boasts several Meta Llama 3.1 features that make it exceptionally valuable across various professional domains.

1. Superior Code Generation and Review

One of the most praised aspects of the Llama 3.1 review has been its marked improvement in coding tasks. Llama 3.1 coding capabilities are on par with, and in some specialized areas, superior to, the leading coding assistants.

- Multi-Language Proficiency: Excellent performance across popular languages (Python, JavaScript, Java, C++), and strong capability in niche languages.

- Debugging and Refactoring: Llama 3.1 excels at identifying complex bugs, suggesting performance optimizations, and refactoring legacy code into modern standards—critical for enterprise modernization efforts.

- Integration with IDEs: The open nature of Llama 3.1 allows for seamless integration into VS Code, JetBrains, and other IDEs, providing real-time code completion and documentation generation.

/image-topic.webp

[Related: GPT-4o: What Is It and Why It Matters for Developers]

2. Advanced Reasoning and Strategic Planning

The improved instruction tuning has made Llama 3.1 reasoning exceptionally robust. This is vital for tasks that require sequential logic and strategic foresight, such as:

- Business Strategy: Developing detailed business plans, performing SWOT analyses, and simulating market outcomes based on complex inputs.

- Scientific Research: Formulating hypotheses, analyzing large datasets, and summarizing research papers with critical insight.

- Complex Logistics: Optimizing supply chains and scheduling complex operations with multiple constraints, demonstrating its strength in applied AI in technology.

3. The Enterprise Advantage in AI Development Tools

For large organizations, Llama 3.1 offers a powerful combination of top-tier performance and controlled deployment.

- Data Security: By running the model locally, organizations eliminate the risk associated with sending sensitive data to third-party APIs.

- Cost Predictability: Licensing an open source LLM framework allows businesses to predict and control costs better than reliance on pay-per-token API services, especially at massive scale.

- Customization: The ability to perform fine-tuning Llama 3.1 on proprietary datasets ensures that the AI assistant speaks the company’s internal language and adheres to its specific policies.

Accessing Meta’s AI: How to Use Llama 3.1

Understanding Llama 3.1 features is one thing; accessing and deploying them is another. Meta has ensured multiple pathways for users—from casual consumers to hardcore developers—to engage with the new model.

1. Meta AI Integration

For the general public, the easiest way to experience the power of Llama 3.1 is through Meta AI, integrated directly into Meta’s ecosystem (Facebook, Instagram, WhatsApp, and Ray-Ban Meta smart glasses).

- Real-time Assistance: Users can leverage the model for real-time information retrieval, creative content generation (images and text), and complex conversational queries directly within the chat interfaces they already use.

- Consumer Experience: This deployment makes Meta AI a direct, often superior, rival to tools like Google Gemini and ChatGPT for everyday use, driving the overall AI industry trends toward ubiquitous access.

2. Developer Access via Llama 3.1 API and Download

For developers and enterprises, Meta provides traditional avenues for integration:

a) Official Model Downloads (Open Source Path)

The smaller, core versions of Llama 3.1 (8B and 70B) are available for Llama 3.1 download under a permissive license (Meta Llama 3.1 Community License). This allows developers to download the weights and run the models locally on supported hardware (GPUs/accelerators).

b) Cloud Provider Integration

Major cloud platforms (AWS, Azure, Google Cloud) often host Llama models, offering managed services that simplify deployment and scaling. This is typically the fastest way for businesses to deploy the 70B model without managing intricate infrastructure.

c) The Llama 3.1 API

Meta provides direct access through an official Llama 3.1 API, allowing streamlined integration into web applications, software tools, and production environments, similar to how developers utilize OpenAI or Anthropic services. This option caters to those who prioritize convenience over full self-hosting control.

/image-topic.webp

The Broader Impact: Llama 3.1 and the Future of AI Development

The Llama 3.1 release date marks a turning point not just for Meta, but for the trajectory of artificial intelligence news globally. It changes the conversation around proprietary versus open source models.

Driving Open Source Innovation

By releasing top-tier models like Llama 3.1 to the open source community, Meta is accelerating collective innovation. Developers worldwide can now build upon one of the most powerful foundational models available, leading to:

- Faster Specialization: Thousands of smaller teams can create niche, highly optimized versions of Llama 3.1 for unique vertical markets (e.g., specialized medical knowledge, advanced financial modeling).

- Increased Scrutiny and Safety: The open weights allow researchers to thoroughly test and analyze the model’s performance and safety guardrails, leading to more robust and ethical AI systems.

- Reducing AI Entry Barriers: Startups and researchers with limited funding can access best AI model performance without the prohibitive costs associated with proprietary API usage.

[Related: Future of Human-Robot Collaboration: AI’s Next Frontier]

Meta’s Strategic Long Game

Llama 3.1 isn’t just a product; it’s a strategic platform play. Meta recognizes that control over the underlying foundational model allows them to shape the future of AI development tools and integrate these tools deeply into their vast user base. By making Llama the default open source LLM, they ensure that a significant portion of the world’s AI infrastructure runs on their technology, fueling their data flywheel and cementing their position as a leading force in AI industry trends.

The Meta AI update is a clear declaration: Meta is no longer just playing catch-up; they are actively dictating the competitive pace in the race for the best AI model.

Conclusion: The New Open Frontier

The release of the Llama 3.1 family, culminating in the monumental Llama 3.1 405B model, is a landmark moment in AI history. It demonstrates that the gap between open-source models and their proprietary counterparts is virtually closed, if not fully eliminated in certain performance metrics.

For developers, Llama 3.1 offers an irresistible combination of state-of-the-art Llama 3.1 performance, control, and customizability, particularly in demanding fields like Llama 3.1 coding and complex Llama 3.1 reasoning. For consumers, the enhanced Meta AI experience brings true next generation AI capabilities to their daily lives.

As we move forward, the competition between Meta, OpenAI, and Anthropic will continue to push the boundaries of what is possible. But with Llama 3.1, Meta has firmly established itself as the champion of the open source LLM revolution, providing the critical foundation needed for the next wave of global AI innovation.

Whether you are looking to fine-tune Llama 3.1 for a specific corporate need or simply experience the new capabilities of Meta AI, the impact of Llama 3.1 will be felt across every corner of the AI in technology landscape throughout 2024 and beyond.

FAQs: Understanding Llama 3.1

Q1. What is Llama 3.1 and who developed it?

Llama 3.1 is the latest generation of large language models (LLMs) developed and released by Meta AI. It builds upon the successes of the Llama 3 family, offering significantly enhanced performance, reasoning capabilities, and an expanded context window, aiming to be the leading open source LLM globally.

Q2. When was the Llama 3.1 release date?

The core models of Llama 3.1 were released in Q3 of 2024. This release included the 8B and 70B parameter versions, with subsequent updates detailing the progress and eventual broader release of the massive Llama 3.1 405B frontier model.

Q3. Is Llama 3.1 better than GPT-4o?

The comparison of Llama 3.1 vs GPT-4o is complex. Benchmarks show Llama 3.1 (70B) often achieves performance parity with, and occasionally surpasses, GPT-4o in key metrics like reasoning, factual accuracy, and specific coding tasks. While GPT-4o maintains an edge in certain proprietary multimodal integrations, Llama 3.1 offers the unmatched benefits of open source control and self-hosting.

Q4. How large is the Llama 3.1 context window?

The Llama 3.1 context window has been substantially expanded compared to previous Llama versions. The flagship models support up to 128,000 tokens or more, allowing them to handle and reference extremely long documents, complex codebases, and extended conversational history with high accuracy.

Q5. Can I use Llama 3.1 for commercial projects?

Yes. Meta typically releases its Llama models, including Llama 3.1, under a permissive license (such as the Meta Llama 3.1 Community License), which allows for commercial use, provided certain usage and user caps are respected for the open source LLM distribution.

Q6. How do I access the Llama 3.1 API?

The Llama 3.1 API is provided by Meta for seamless integration into applications. Developers can typically register through Meta’s developer portal or access the model through major cloud service providers (AWS, Microsoft Azure, Google Cloud) that offer managed Llama 3.1 services.

Q7. What is the significance of the Llama 3.1 405B model?

The Llama 3.1 405B model signifies Meta’s commitment to frontier AI research. It is a massive, highly optimized model designed to compete directly with the world’s largest AI systems, promising state-of-the-art Llama 3.1 reasoning and generalization abilities for complex, large-scale enterprise and scientific applications.

Q8. Does Llama 3.1 support multimodal tasks?

Yes, Llama 3.1 is designed with enhanced support for multimodal AI models. While its core is text-based, it is highly optimized to work with Meta’s image and audio encoders, allowing it to interpret, understand, and generate content based on complex inputs that combine text, images, and other data types.