Llama 3.1 for Business: Powering Next-Gen Enterprise AI Solutions

The generative AI landscape is evolving at a breakneck pace, and the release of Meta’s Llama 3.1 marks a pivotal moment for businesses. No longer just a tool for developers and researchers, this latest iteration is a powerful, open-source platform poised to become the engine for next-gen enterprise AI. For organizations looking to gain a competitive edge, understanding and adopting Llama 3.1 isn’t just an option—it’s a strategic imperative.

From small businesses seeking to automate customer service to large enterprises aiming to build proprietary data moats, Llama 3.1 offers an unprecedented blend of performance, flexibility, and control. This guide dives deep into the world of Meta Llama 3.1 for companies, exploring its key features, practical business use cases, and a strategic roadmap for implementation. We’ll break down everything from secure LLM deployment to calculating the cost-benefit analysis, giving you the insights needed to harness this transformative technology for tangible business innovation.

What is Llama 3.1 and Why Should Your Business Care?

Llama 3.1 is the latest family of large language models (LLMs) from Meta AI. As an open-source model, it stands in contrast to proprietary systems like OpenAI’s GPT-4o or Google’s Gemini. This “open” nature is its superpower for businesses: it means companies can download, modify, and run the model on their own infrastructure, whether in the cloud or on-premise. This provides unparalleled control over data, customization, and costs.

But it’s not just about being open. Llama 3.1 brings significant architectural improvements that directly translate to business value.

Beyond the Hype: Key Features That Matter for Enterprise

To truly appreciate the Llama 3.1 benefits for organizations, we need to look under the hood at the features specifically designed for demanding enterprise workloads.

- Massive 405B Parameter Model: The new flagship model, Llama 3.1 405B, rivals the performance of the best closed-source models on key industry benchmarks. This means businesses no longer have to compromise on power to gain the benefits of open source.

- Expansive 128K Context Window: Imagine feeding an entire business plan, a complex legal contract, or a year’s worth of customer feedback into the AI at once. The 128K context window allows Llama 3.1 to process and reason over approximately 96,000 words in a single prompt, enabling sophisticated document analysis, RAG (Retrieval-Augmented Generation), and complex multi-step reasoning that was previously impractical.

- Enhanced Reasoning and Coding Prowess: Llama 3.1 demonstrates a significant leap in logical reasoning, planning, and code generation. For businesses, this translates into more reliable automated workflows, powerful data analysis tools, and accelerated software development cycles.

- Unprecedented Efficiency: Despite its power, Meta has optimized Llama 3.1 for efficiency. The smaller 8B and 70B models provide an excellent balance of performance and resource consumption, making them ideal for a wide range of applications, including on-device and edge computing scenarios. This efficiency is a core component of the cost benefit Llama 3.1 analysis.

This combination of raw power, a vast context window, and operational efficiency makes Llama 3.1 a formidable enterprise grade LLM capable of tackling real-world business challenges.

Unlocking Business Value: Top Llama 3.1 Enterprise Applications

Theory is one thing; practical application is another. The true potential of generative AI for business Llama 3.1 is realized when it’s applied to solve specific, high-impact problems. Here are some of the most compelling Llama 3.1 business use cases across various departments.

Hyper-Personalized Customer Experiences

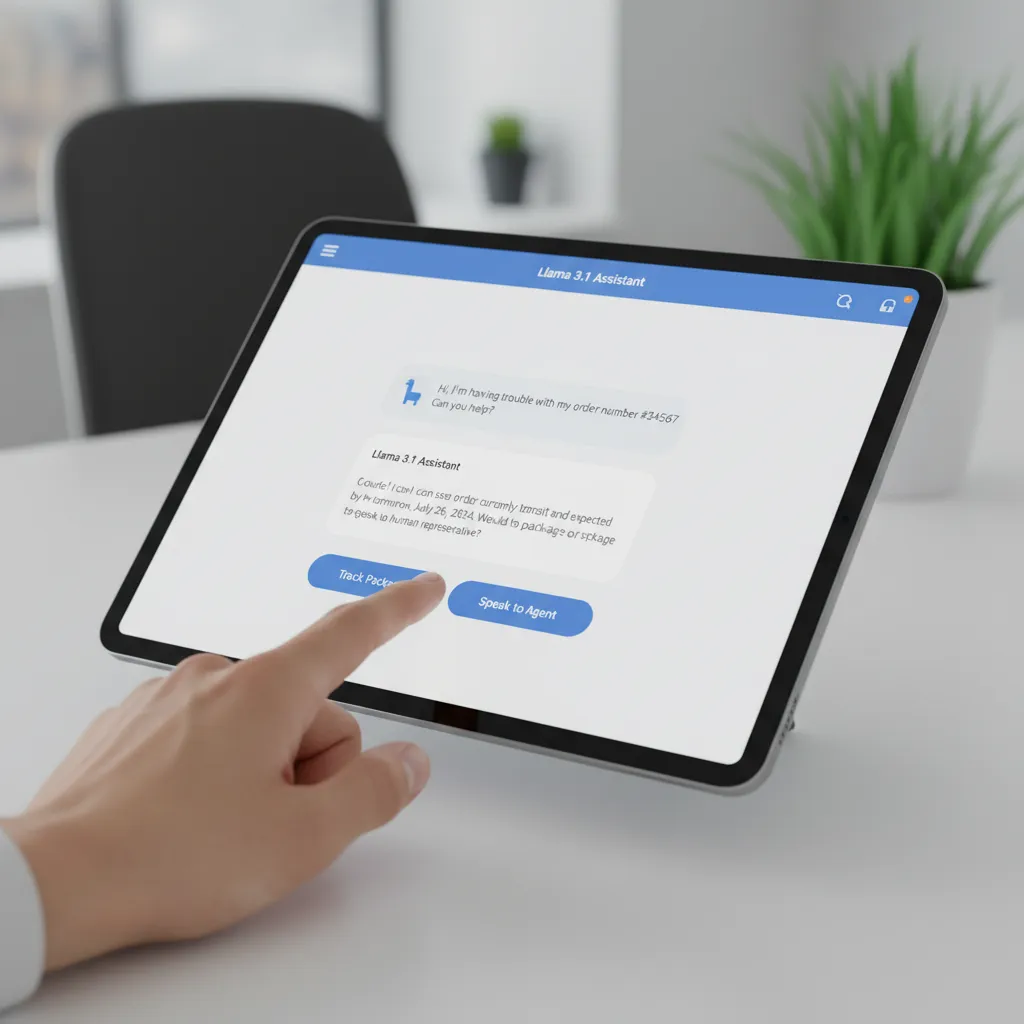

Generic, robotic customer service is a relic of the past. Llama 3.1 enables a new era of dynamic, context-aware customer interactions.

- AI-Powered Support Agents: Deploy chatbots and virtual assistants that can understand complex customer queries, access knowledge bases in real-time (thanks to the large context window), and provide nuanced, empathetic responses. They can handle everything from simple order tracking to complex technical troubleshooting.

- Proactive Customer Outreach: Analyze customer communication logs to identify patterns and sentiment. Llama 3.1 can help draft personalized follow-up emails, special offers, or support check-ins, turning your customer service from a reactive cost center into a proactive revenue driver. Related: AI and the Future of Customer Experience: Personalization and Engagement

Streamlined Operations and Intelligent Automation

Efficiency is the lifeblood of any successful enterprise. Scaling AI with Llama 3.1 can unlock new levels of operational excellence.

- Internal Knowledge Management: Transform your messy internal wikis and document repositories into an intelligent search engine. Employees can ask natural language questions (“What was our Q3 revenue in the EMEA region last year?”) and get precise answers with sources, saving countless hours of searching.

- Automated Report Generation: Connect Llama 3.1 to your business intelligence tools to automatically generate daily, weekly, or monthly reports. It can summarize key metrics, identify anomalies, and even draft the executive summary, freeing up your analytics team for more strategic work.

- Supply Chain and Logistics Optimization: Process unstructured data from shipping manifests, weather reports, and supplier communications to predict potential delays and suggest alternative routes or suppliers, enhancing resilience and efficiency.

Data-Driven Decision Making and Advanced Analytics

Llama 3.1 is a powerful tool for extracting insights from the 80% of your data that is unstructured—text, emails, reports, and more.

- Financial Modeling and Risk Analysis: Accelerate financial modeling by having Llama 3.1 generate boilerplate code, analyze financial reports for key risk factors, and summarize market news to inform investment strategies. Its advanced reasoning can help identify subtle trends missed by traditional algorithms.

- Legal and Compliance: Drastically reduce the time spent on contract review. Fine-tune Llama 3.1 on your legal standards to automatically scan documents for non-compliant clauses, summarize key obligations, and compare versions, empowering legal teams to focus on high-level strategy.

Accelerated Content Creation and Marketing

Content is king, but creation is a bottleneck. Llama 3.1 can act as a tireless creative partner for your marketing teams.

- Personalized Marketing at Scale: Move beyond

[First_Name]tokens. Use Llama 3.1 to generate highly personalized email campaigns, ad copy, and landing pages based on individual customer data, purchase history, and browsing behavior. - Content Strategy and Ideation: Use the model as a brainstorming partner. Feed it market research and competitor analysis to generate blog post ideas, social media calendars, and video scripts that resonate with your target audience. This is a prime example of Llama 3.1 for small business making a huge impact. Related: The Rise of Autonomous AI Agents: Are They Revolutionizing Work?

Strategic Implementation: Your Guide to Adopting Llama 3.1

Successfully integrating Llama 3.1 requires more than just technical expertise; it demands a clear strategy. This Llama 3.1 implementation guide breaks the process down into manageable steps.

Step 1: Define Your AI Strategy and Use Case

Before writing a single line of code, define the business problem you want to solve.

- Start with “Why”: Identify a specific pain point or opportunity. Is it reducing customer support tickets? Speeding up market research? Automating compliance checks?

- Assess ROI: A good starting point is a use case that is high-impact but low-complexity. Calculate the potential time savings, cost reduction, or revenue increase to build a strong business case. A well-defined Llama 3.1 AI strategy is crucial for securing buy-in and measuring success.

Step 2: Choose Your Deployment Model: Cloud vs. On-Premise

One of the biggest Llama 3.1 competitive advantages is deployment flexibility.

- Managed Cloud Services: Platforms like AWS, Google Cloud, and Microsoft Azure offer Llama 3.1 as a managed service. This is the fastest way to get started, with less operational overhead.

- Private Cloud / On-Premise: For businesses with strict Llama 3.1 data privacy requirements or those operating in highly regulated industries (like finance or healthcare), a private Llama 3.1 deployment is the gold standard. This gives you complete control over your data and infrastructure, ensuring nothing leaves your secure environment.

Step 3: Customization and Fine-Tuning

This is where the magic happens. A generic Llama 3.1 is powerful, but a fine-tuned version is a game-changer.

- What is Fine-Tuning? It’s the process of further training the base Llama 3.1 model on your company’s specific data. This could be your customer support tickets, internal documentation, or marketing copy.

- The Result: The model learns your unique language, tone, and context. A fine-tuned model for customer support will sound like your brand, not a generic AI. This process is the core of custom LLM development Llama 3.1.

Step 4: Integration and MLOps

An AI model is only useful if it’s integrated into the workflows where your employees actually work.

- API Integration: Use the Llama 3.1 API integration to connect the model to your existing CRM, ERP, or internal chat applications. This brings the power of AI directly to your team’s fingertips.

- MLOps Pipeline: Implementing a robust Llama 3.1 MLOps (Machine Learning Operations) pipeline is essential for long-term success. This includes processes for monitoring the model’s performance, retraining it with new data, and managing different versions to ensure reliability and continuous improvement.

The Competitive Landscape: Llama 3.1 vs. GPT-4o for Business

The inevitable question for any business leader is: “How does this compare to what I’m already using, like GPT-4o?” The Llama 3.1 vs GPT-4o for business debate isn’t about which is “better” overall, but which is right for a specific job.

| Feature | Meta Llama 3.1 | OpenAI GPT-4o |

|---|---|---|

| Model Access | Open Source. Full access to model weights. Can be self-hosted. | Proprietary. Accessed via a paid API. The underlying model is a black box. |

| Data Privacy | Maximum Control. With self-hosting, no data ever needs to leave your servers. | Good, but requires trust. Data is sent to OpenAI’s servers for processing. |

| Customization | Deep. Can be extensively fine-tuned on proprietary data for specific tasks. | Limited. Some fine-tuning is possible, but less control over the process. |

| Cost Structure | Compute Costs. You pay for the hardware (rented or owned) to run the model. No per-token fees. | Usage-Based. You pay per input and output token. Can become expensive at scale. |

| Ease of Use | Higher Initial Hurdle. Requires technical expertise to set up and manage. | Very Easy. Simple API makes it fast to get started for general tasks. |

| Best For… | Building proprietary AI solutions, applications with high data sensitivity, and cost control at scale. | Rapid prototyping, general-purpose applications, and leveraging cutting-edge multi-modality. |

The key takeaway is control. With Llama 3.1, you are building an asset. With proprietary models, you are renting a service.

Navigating the Challenges: Security, Ethics, and Governance

Harnessing the power of Llama 3.1 comes with responsibilities. A proactive approach to security and ethics is non-negotiable.

Fortifying Your Fortress: Llama 3.1 Security for Business

Secure LLM deployment is paramount, especially when handling sensitive corporate or customer data.

- Infrastructure Security: Whether on-premise or in the cloud, ensure your underlying infrastructure is hardened, with strict access controls and network segmentation.

- Input and Output Sanitization: Implement filters to prevent prompt injection attacks (where malicious users try to manipulate the model’s behavior) and to scan outputs for sensitive data leaks before they are displayed.

- Data Anonymization: When fine-tuning, use anonymized data whenever possible to minimize the risk of the model memorizing and regurgitating personally identifiable information (PII). This is a cornerstone of Llama 3.1 security business best practices.

Building Trust: Ethical AI and Responsible Implementation

An effective Llama 3.1 ethical AI business strategy is built on transparency and fairness.

- Bias Detection and Mitigation: All LLMs can inherit biases from their training data. Regularly audit your model’s outputs for demographic, cultural, or other biases, especially in applications like hiring or customer service.

- Transparency and Explainability: Be transparent with users and employees about when they are interacting with an AI. While true “explainability” is a challenge, document the model’s intended use, limitations, and the data it was trained on.

- Human-in-the-Loop: For high-stakes decisions (e.g., medical diagnoses, large financial transactions), use Llama 3.1 as a co-pilot to assist a human expert, not as a fully autonomous decision-maker.

The Future of Enterprise AI is Open

The launch of Llama 3.1 isn’t just an iteration; it’s an invitation. It invites businesses of all sizes to move from being passive consumers of AI to active builders of intelligent systems. The future of enterprise AI Llama 3.1 is one where customized, secure, and cost-effective models are the norm, not the exception. By providing the foundational blocks, Meta is empowering companies to create unique AI capabilities that are deeply integrated with their data and workflows, creating a durable competitive advantage.

Conclusion: From Potential to Performance

Meta’s Llama 3.1 is more than just a powerful new language model; it’s a strategic asset. For businesses ready to move beyond off-the-shelf AI, it offers a clear path toward building proprietary, high-performance solutions. By enabling deep customization, ensuring data privacy through self-hosting, and providing a cost-effective alternative to proprietary APIs, Llama 3.1 democratizes access to state-of-the-art AI.

The journey to integrate Llama 3.1 solutions requires careful planning, from defining a clear AI strategy to establishing robust security and MLOps pipelines. But for organizations that make the investment, the payoff is immense: streamlined operations, hyper-personalized customer experiences, and a powerful engine for sustained business innovation. The era of open, enterprise-grade AI is here. Are you ready to build?

FAQs

Q1. Is Llama 3.1 free for commercial use?

Yes, Llama 3.1 is available under a permissive, custom open-source license that allows for both research and commercial use. Businesses can use, modify, and distribute the models and fine-tuned versions. However, companies with over 700 million monthly active users may require a special license from Meta.

Q2. What are the main differences between Llama 3.1 and Llama 3?

Llama 3.1 introduces several key improvements over Llama 3. The most significant is the new 405B parameter model, which is much larger and more capable. It also features a longer context window (128K vs. 8K in Llama 3), improved reasoning and coding abilities, and greater computational efficiency.

Q3. How does Llama 3.1 ensure data privacy for businesses?

Llama 3.1’s primary data privacy advantage comes from its open-source nature. Because businesses can perform a private Llama 3.1 deployment on their own servers (on-premise or in a private cloud), sensitive data never has to be sent to a third-party vendor for processing, providing maximum control and confidentiality.

Q4. What technical skills are needed to implement Llama 3.1?

Implementing Llama 3.1 from scratch requires expertise in machine learning, Python programming, and cloud infrastructure (like AWS, GCP, or Azure). Skills in MLOps are also crucial for managing the model in production. However, many cloud providers and third-party platforms are making it easier to deploy and fine-tune Llama 3.1 with less specialized knowledge.

Q5. Can Llama 3.1 run on-premise?

Absolutely. This is one of its core strengths for enterprises. The ability to download and run the model on your own hardware is a key differentiator from closed-source models and is essential for organizations with strict data sovereignty or security requirements.

Q6. How does the cost of using Llama 3.1 compare to proprietary models like GPT-4o?

The cost benefit Llama 3.1 is significant, especially at scale. Instead of paying a per-token fee to an API provider, you pay for the computing infrastructure to run the model. While this requires an upfront investment in hardware or cloud instances, it can be substantially cheaper for high-volume applications, as you are not charged for usage.

Q7. What is fine-tuning and why is it important for Llama 3.1?

Fine-tuning is the process of taking the pre-trained Llama 3.1 model and continuing its training on a smaller, specific dataset. This is critically important for business because it adapts the model to your company’s unique vocabulary, style, and data, dramatically improving its performance on tasks like branded content creation or specialized customer support.