GPT-4o: What Is It & Why It Matters

Introduction: The ChatGPT Update That Changed Everything

In the rapidly evolving world of artificial intelligence, advancements often feel incremental—a little faster, a bit smarter. Then, there are moments of true discontinuity. The unveiling of GPT-4o, OpenAI’s OpenAI new model, was one of those moments.

Dubbed the “omni-model,” the “o” in GPT-4o stands for omni, signifying its ability to natively process and generate content across text, audio, and vision. This isn’t just another ChatGPT update; it’s a foundational shift in how humans interact with AI, pushing us toward the age of true conversational AI.

For anyone following AI technology news or concerned with the future of AI, understanding what is GPT-4o and why it matters is critical. This model doesn’t just improve on its predecessor, GPT-4; it fundamentally changes the user experience, particularly through its dramatically improved speed, latency, and seamless multimodal AI capabilities.

User Intent Satisfied: This comprehensive guide will dissect the key GPT-4o features, provide a detailed GPT-4o vs GPT-4 comparison, explain how to use GPT-4o, and answer the most pressing question: is GPT-4o free? By the end, you’ll grasp why this next generation AI is set to redefine productivity and creativity across every industry.

The Dawn of Omni-Modal AI: Defining GPT-4o

The core revolution of OpenAI GPT-4o lies in its architecture. Previous versions of ChatGPT handled voice and vision through a complex pipeline: voice input was transcribed by one model (latency), processed by GPT-4 (intelligence), and then the response was turned back into audio by another model (more latency). This stacking introduced delays and stripped away non-verbal communication cues.

GPT-4 omni changes this entirely.

The “O” in GPT-4o: What Omni Truly Means

When we talk about multimodal AI, we mean an AI that can understand and generate multiple types of data. GPT-4o is the first model from OpenAI to be natively trained end-to-end across text, audio, and vision. This unified approach means the model doesn’t just pass data between specialized systems; it perceives, reasons, and responds to all modalities simultaneously, in real-time.

This unified architecture unlocks stunning GPT-4o capabilities:

- Seamless Interruption: Users can interrupt the AI mid-sentence, just like a natural human conversation.

- Emotional Nuance: The model can listen for tone, detect emotional states (like excitement or frustration), and adjust its own vocal delivery accordingly.

- Cross-Modal Reasoning: It can analyze a live video feed, discuss a chart, and generate supporting text, all within the same interaction loop.

Speed, Latency, and Performance Metrics

Perhaps the most immediately impactful change is the GPT-4o performance boost. Speed is crucial for creating a truly natural real-time AI conversation.

According to OpenAI announcements, GPT-4o boasts:

- Speed: It processes text and vision up to 2x faster than GPT-4 Turbo.

- Audio Latency: Response time for audio inputs dropped dramatically. While GPT-4 averaged 5.4 seconds, GPT-4o can respond to voice prompts in as little as 232 milliseconds, often averaging only 320 milliseconds—a rate comparable to human conversation.

- Cost Efficiency: For developers using the GPT-4o API, the model is half the price of GPT-4 Turbo and runs at five times the capacity limit.

This combination of speed, cost reduction, and superior intelligence makes GPT-4o not just a better model, but a truly scalable one for vast industrial applications.

[Related: AI Content Creation Master Generative AI Digital Marketing]

A Deep Dive into GPT-4o Features and Capabilities

The array of GPT-4o features goes beyond mere performance metrics. They unlock entirely new forms of human-computer interaction, essentially turning the AI from a helpful tool into an almost indistinguishable digital colleague or intelligent assistant.

1. Real-Time Conversational AI: The Voice Revolution

The demonstration of GPT-4o’s voice capabilities felt like a scene from a sci-fi movie. When using the model as an AI voice assistant, the experience is fundamentally different from older models like Siri or Alexa.

- Emotional Responsiveness: The AI can detect a user’s hurried or stressed tone and respond with empathy or an immediate, concise answer.

- Dynamic Vocal Styles: During the GPT-4o demo, the AI was shown shifting between various speaking styles—singing a response, speaking dramatically, or adopting a monotone, demonstrating unprecedented control over vocal output.

- Coaching and Tutoring: Imagine practicing a speech or preparing for a presentation. GPT-4o can listen to your vocal delivery, analyze your pacing and tone, and provide real-time feedback, making it an invaluable tool for personalized learning.

This is the pinnacle of conversational AI to date, eliminating the frustrating delays that plagued previous systems and enabling true, natural dialogue.

2. Enhanced Vision and Image Analysis

The visual comprehension of ChatGPT-4o is significantly enhanced. The model can process images, charts, and even live video streams with greater speed and accuracy.

- Real-Time Environment Interaction: Users can point their camera at something—a complex math equation on a whiteboard, a difficult cooking technique, or a foreign menu—and the AI can offer instantaneous guidance, solving the problem or translating the text on the fly.

- Complex Data Interpretation: It excels at analyzing visual data like graphs, generating summaries of dense scientific papers based solely on embedded visual data, or comparing complex financial charts.

3. Multilingual Mastery

GPT-4o significantly improves language quality and speed in non-English languages. It is reportedly the best model on the market for 50 different languages, providing faster translation and more nuanced understanding across global communication barriers. This expansion drastically increases accessibility and utility for users outside the English-speaking world.

4. Availability and Accessibility: Is GPT-4o Free?

One of the most disruptive aspects of the OpenAI announcements surrounding the GPT-4o release date was the democratization of access.

The short answer is yes, GPT-4o is free—to a certain extent.

- Free ChatGPT-4o Users: All free users of ChatGPT gain access to GPT-4o. This is a massive leap, as previous top-tier models like GPT-4 were strictly reserved for paid subscribers (ChatGPT Plus, Team, Enterprise). Free users benefit from the superior speed and intelligence, though with usage limits that replenish daily.

- Paid Users (Plus/Team): Subscribers receive up to 5x the usage capacity of free users, making it the default, unrestricted experience for heavy use.

- Developers (GPT-4o API): Developers benefit from the lower cost and higher rate limits, enabling them to build faster, more complex applications leveraging its multimodal features.

This strategic decision ensures that the latest AI technology is immediately available to the widest possible audience, rapidly accelerating adoption and application development.

[Related: Google AI Overviews Future of Search and SEO]

GPT-4o vs. GPT-4: The Crucial Comparison

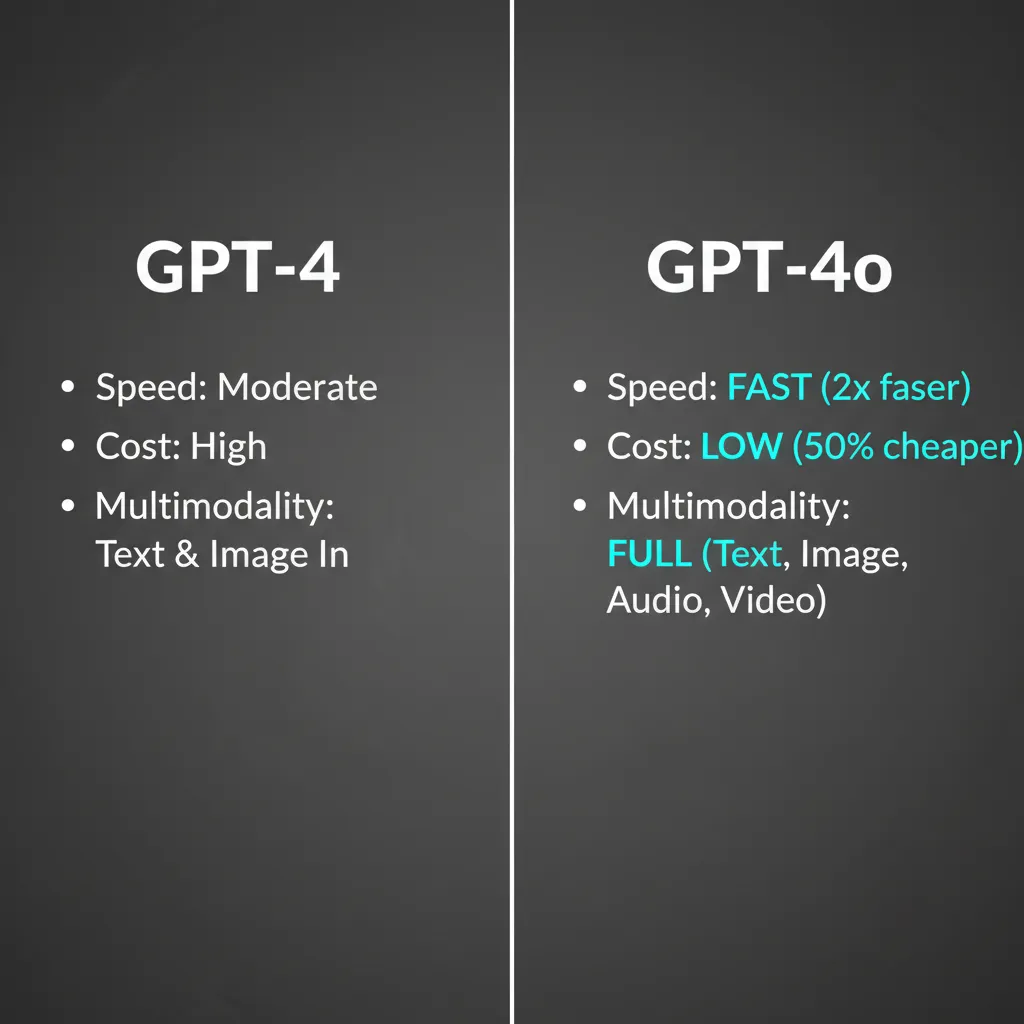

Understanding the significance of GPT-4o requires a clear AI model comparison against its celebrated predecessor, GPT-4 (including GPT-4 Turbo). This comparison moves beyond marginal improvements and highlights fundamental architectural changes.

| Feature | GPT-4 / GPT-4 Turbo | GPT-4o (GPT-4 omni) | Significance |

|---|---|---|---|

| Multimodality Architecture | Stacked models (Separate text, voice transcription, and voice generation models). | Native Omni-Model (Trained end-to-end across text, audio, and vision). | Eliminates processing handoffs, ensuring seamless, real-time interaction. |

| Response Speed (Text) | Good, but often noticeable latency. | Up to 2x faster than GPT-4 Turbo. | Immediate conversational flow and improved productivity. |

| Audio Latency | Averages ~5.4 seconds (due to pipeline delays). | Averages ~320 milliseconds (closer to human reaction time). | Enables true, interruptible, real-time AI conversation and emotion recognition. |

| API Pricing | Standard high-tier pricing. | 50% cheaper than GPT-4 Turbo. | Significantly reduces development costs for the most advanced model. |

| Vision Performance | Competent, but slower processing of visual data. | State-of-the-art vision processing. Better at recognizing complex scenes and emotions. | Superior for live video analysis, complex chart interpretation, and teaching assistance. |

| Accessibility (Is it Free?) | Strictly reserved for paying subscribers. | Available to all free users (with limits). | Massive democratization of next generation AI. |

Architecture Shift: Native Multimodality

The key differentiator is the architectural shift. GPT-4 and its variants used separate specialized neural networks to handle different input types. This is akin to hiring three different contractors (one for audio, one for thinking, one for speaking) for a single job—there is inevitable friction and time loss in the handoffs.

GPT-4o functions as a single, unified network. It treats text, audio, and visual data as intrinsically linked inputs from the moment of ingestion. This is why the AI can listen to the tone of your voice while simultaneously reading the words and processing a related image, ensuring a more integrated and empathetic response.

Benchmarks: Intelligence and Emotional Nuance

While traditional benchmarks show slight, consistent improvements in core intelligence metrics (like MMLU and coding tasks) over GPT-4 Turbo, the more compelling improvements are in the soft metrics.

The most exciting aspect of the GPT-4o review is its ability to understand and participate in emotional dialogue. In the GPT-4o demo, the AI responded to a stressed user by offering encouragement and advice in a calming voice, something previous models struggled to do believably. This level of emotional intelligence is crucial for complex customer service applications, therapeutic use cases, and for building rapport with users.

How to Access and Use the Next Generation AI

Since the GPT-4o release date following the major OpenAI announcements, accessing the model has become remarkably straightforward, although access tiers still determine your usage limits. Learning how to use GPT-4o is now essential for every ChatGPT user.

Using Free ChatGPT-4o vs. Paid Tiers

OpenAI has deliberately made the highly capable free ChatGPT-4o model available to its entire user base, making it a powerful free offering.

- Free Users: When you log into ChatGPT, GPT-4o is often the default or easily selectable option. You receive a generous allowance of interactions per day. When your limits are reached, the platform will automatically switch you back to the GPT-3.5 model until the limit resets.

- ChatGPT Plus/Team/Enterprise: Paid users get the full, unrestricted experience. They receive priority access to new features, higher limits (often 5x the free tier), and continued ability to use the full features of the model without interruption.

- API Users: Developers access the model via the GPT-4o API. If you are integrating AI into your own applications, using the API is the only way, and its reduced cost makes it highly appealing for startups and large enterprises alike.

Step-by-Step Guide to Enabling GPT-4o

If you are a ChatGPT user, enabling the model is simple:

- Log In: Access the official ChatGPT web interface or the mobile app.

- Model Selector: Locate the model selector dropdown menu, usually found at the top center of the screen or in the left-hand sidebar (depending on the interface).

- Select GPT-4o: Choose “GPT-4o” from the list of available models. It is usually clearly labeled.

- Start Interacting: You can now begin a text conversation.

- Voice and Vision: To use the AI voice assistant features or vision capabilities on the mobile app, tap the headphone icon (voice mode) or the image upload button (vision mode) and follow the prompts. Ensure your microphone and camera permissions are granted.

Mastering how to use GPT-4o means experimenting with its multimodal inputs. Don’t just type—upload charts, take pictures of your code, or engage in a real-time AI conversation using the voice features to experience the full potential of this next generation AI.

[Related: Apple Intelligence iOS 18 New AI Features Explained]

Real-World GPT-4o Use Cases and Future Impact

The introduction of GPT-4o is more than a benchmark improvement; it’s an inflection point for productivity and user experience. The GPT-4o use cases span nearly every sector, driven by its speed and native multimodality.

Education and Personalized Learning

GPT-4o excels as an on-demand, adaptive tutor.

- Real-Time Math and Science Help: A student can point their phone camera at a complex physics problem. GPT-4o instantly recognizes the problem, hears the student’s confusion in their voice, and offers step-by-step guidance in a measured, encouraging tone.

- Language Fluency: Its improved audio latency and multilingual mastery make it the ideal language exchange partner. Users can practice conversational skills, receiving instant, nuanced feedback on pronunciation and grammar in a manner that feels genuinely natural.

- [Related: Unlocking Potential AI Revolutionizing Personalized Learning]

Professional Workflow and Coding

In the professional realm, the increased speed and GPT-4o capabilities translate directly into efficiency gains.

- Rapid Data Analysis: A business analyst can upload a complex Excel sheet or graph (vision input) and then use voice commands to ask intricate questions about trends and projections. The AI provides text and visual summaries instantly.

- Coding Assistance: Coders can verbally describe a complex function they need to write, and GPT-4o can generate the code significantly faster than previous models. Furthermore, they can share screenshots of error messages, and the AI can diagnose and suggest fixes immediately, acting as a true pair programmer.

Customer Service and Intelligent Assistants

This is arguably where the “omni-model” shines brightest. Intelligent assistants powered by GPT-4o can handle customer interactions with unprecedented sophistication.

- Enhanced Empathy: By analyzing vocal tone, the AI can prioritize callers expressing high levels of frustration or distress, offering superior service.

- Multichannel Support: A single AI can manage a live text chat, analyze an uploaded document (vision), and conduct a rapid voice conversation simultaneously, providing integrated, personalized support.

Creative and Artistic Applications

Creatives are using the superior input quality and speed to iterate rapidly. A graphic designer can upload a mockup, verbally describe five color palettes and stylistic adjustments, and receive revised descriptions or code snippets instantly. The initial GPT-4o review from creators highlights the seamless feedback loop as its most valuable asset.

The Significance of GPT-4o: Why It Matters to Everyone

The question isn’t just what is GPT-4o, but what its existence implies for the trajectory of latest AI technology and society at large. This model is significant because it simultaneously advances three critical pillars of AI adoption: performance, accessibility, and human-computer interaction.

1. Setting a New Benchmark for Interaction

GPT-4o is the first widely available model that makes AI interaction feel truly human-like. The minimal latency means the friction—that annoying lag—is largely eliminated. When you can interrupt an AI voice assistant and have it understand your intent instantly, the line between digital tool and genuine collaborator blurs. This model sets a new gold standard that all future AI systems must strive to meet.

2. Accelerating the Future of AI Development

By making the most powerful model available (in part) for free, OpenAI has essentially handed the keys to the next generation AI to millions of developers and users who previously couldn’t afford the API cost or the subscription. This democratization will exponentially increase the number of experiments, innovations, and niche applications built on the back of GPT-4o, leading to rapid advancements in the future of AI.

The cost reduction in the GPT-4o API solidifies this trend, allowing businesses to integrate highly capable multimodal intelligence without prohibitive expenses.

3. A Critical Step Towards AGI

While GPT-4o is not Artificial General Intelligence (AGI), it is a major step toward it. Its ability to unify text, audio, and vision within a single system mimics the way the human brain processes different sensory inputs to form a coherent understanding of the world. The unified architecture demonstrates a pathway toward creating increasingly holistic and generally capable AI systems.

[Related: Mind Meld Rise Neurotech Brain Computer Interfaces]

Conclusion: The Omni-Model Era Is Here

GPT-4o is not merely a faster, cheaper version of GPT-4; it represents a paradigm shift toward genuine, real-time, conversational AI. By unifying text, audio, and vision processing natively, the OpenAI new model delivers an experience that is faster, more natural, and exponentially more useful across a host of applications, from personalized education and complex data analysis to empathetic customer service.

The fact that free ChatGPT-4o access is being rolled out globally is the ultimate statement on the model’s importance. OpenAI is ensuring that this foundational shift in latest AI technology is accessible to everyone, cementing its place as the current standard-bearer for next generation AI.

If you haven’t yet experimented with its voice or vision GPT-4o features, now is the time to see how this AI model comparison winner can revolutionize your workflow and your digital life. The age of the GPT-4 omni model is officially here, and it matters because it is fast, free, and flawlessly multimodal.

FAQs (Frequently Asked Questions)

Q1. What is GPT-4o, and what does the “o” stand for?

GPT-4o is OpenAI’s latest flagship generative AI model. The “o” stands for “omni,” signifying its unique capability to natively and seamlessly process and generate content across text, audio, and visual modalities (multimodal AI) within a single neural network, enabling faster and more integrated responses.

Q2. How does GPT-4o performance compare to GPT-4 Turbo?

GPT-4o significantly outperforms GPT-4 Turbo in speed and latency. It processes text and vision up to 2x faster and reduces audio response latency from several seconds down to an average of 320 milliseconds, making real-time AI conversation possible. It also has superior vision and multilingual capabilities.

Q3. Is GPT-4o free to use, and how can I access it?

Yes, free ChatGPT-4o is available to all ChatGPT users, although with usage limits that refresh daily. Paid users (Plus, Team, Enterprise) receive significantly higher usage limits. You can access it by logging into ChatGPT and selecting “GPT-4o” from the model selector menu.

Q4. When was the GPT-4o release date announced?

GPT-4o was announced by OpenAI during its Spring Update event in May 2024. Following the OpenAI announcements, the rollout began immediately for both API developers and free and paid ChatGPT users.

Q5. Can GPT-4o genuinely understand emotions?

Yes, the unified architecture of GPT-4o allows the AI voice assistant features to analyze a user’s vocal tone and pace, enabling the model to detect and respond appropriately to emotions like excitement, confusion, or distress, offering a much more nuanced form of conversational AI.

Q6. What are the primary GPT-4o use cases in professional settings?

The primary GPT-4o use cases include real-time language translation, rapid coding assistance (especially through verbal input and error screenshot analysis), accelerating complex data analysis from charts and graphs (vision input), and powering highly efficient and empathetic intelligent assistants for customer service.

Q7. How does the GPT-4o API affect developers?

The GPT-4o API is significantly more cost-effective, running at half the price of the GPT-4 Turbo API while offering a higher rate limit. This makes the next generation AI model more accessible and scalable for developers building applications.