GPT-4o: The Future of AI is Here (And It’s Free)

Introduction: The Dawn of the “Omni Model AI”

The landscape of advanced artificial intelligence 2024 shifted seismically the moment OpenAI unveiled GPT-4o. This isn’t just an incremental update; it is a fundamental redesign of how AI perceives and interacts with the world.

For years, we’ve used separate models for different tasks: one for writing, another for image analysis, and yet another for speech. GPT-4o shatters this siloed approach. The ‘o’ in GPT-4o stands for “omni,” signaling its revolutionary ability to process and generate output across text, audio, and vision natively, all within one seamless, unified model.

The core promise of OpenAI GPT-4o is two-fold: superior performance that achieves near-human response times and, crucially, unprecedented accessibility. If you’ve been asking, “is GPT-4o free?” the answer is a resounding yes, making the latest AI technology available to the masses. This marks a turning point where genuine, high-caliber conversational AI becomes a standard utility, not a premium luxury.

In this deep dive, we will explore what is GPT-4o, analyze its game-changing GPT-4o features, compare it directly to its predecessor (GPT-4 vs GPT-4o), and show you how to use GPT-4o right now. This is more than a ChatGPT new update; it’s the definitive step toward a future of true natural human-computer interaction.

What Makes GPT-4o the Definitive “Omni Model AI”?

Before GPT-4o, real-time voice conversations with AI were clunky. When you spoke to the AI, your voice had to go through a cascade of different models: a speech-to-text model transcribed your words, then a text-based LLM (like GPT-4) processed the request, and finally, a text-to-speech model generated the robotic audio response. This hand-off created noticeable delays—often several seconds—breaking the illusion of a natural conversation.

GPT-4o eliminates this chain. It is the first truly multimodal AI model designed from the ground up to understand and generate text, audio, and visual data all at once, using the same neural network.

The Key Technical Breakthroughs

- Unified Processing: The ‘omni’ architecture means input (like a picture, spoken word, or typed sentence) is processed by a single network. This integration is why GPT-4o achieves intelligence on par with humans in tasks requiring rapid context switching.

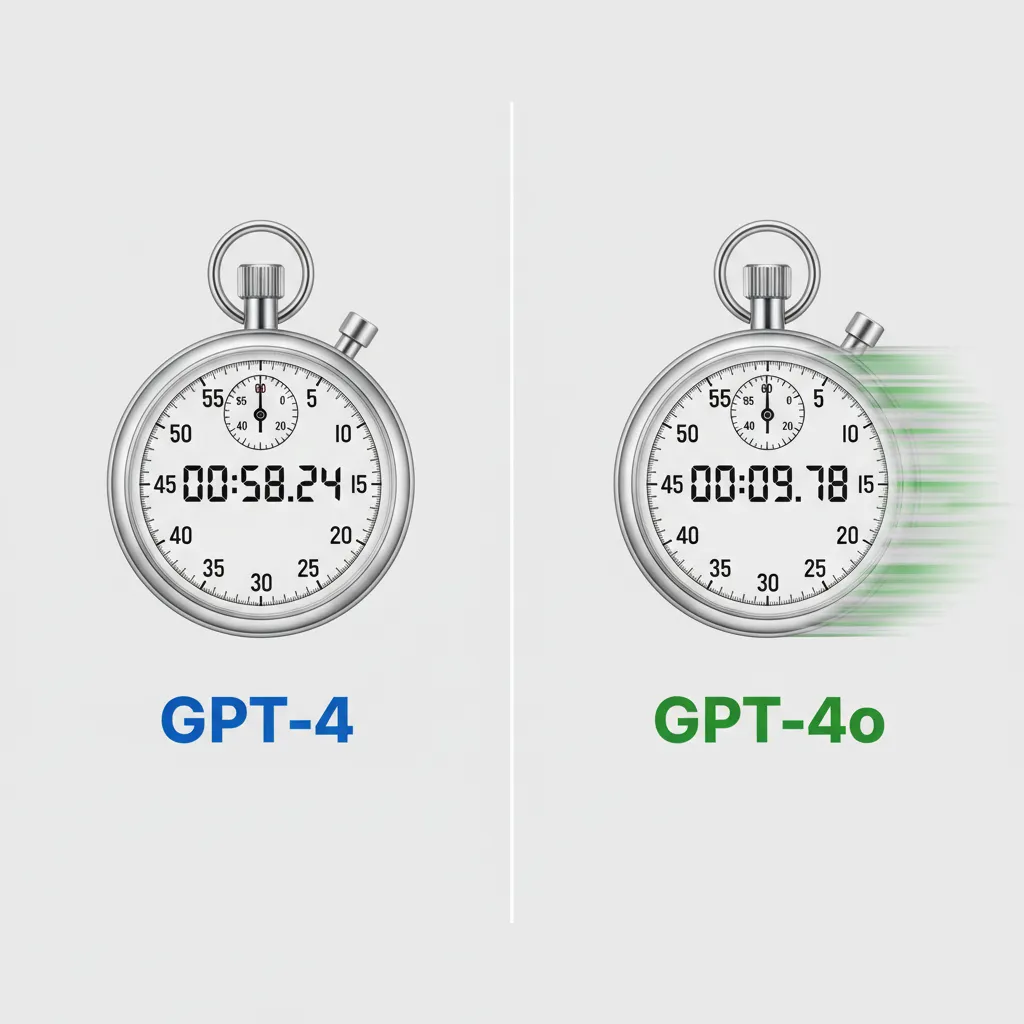

- Dramatic Latency Reduction: The most impressive feat is its speed. GPT-4o can respond to audio inputs in as little as 232 milliseconds (ms), with an average response time of 320ms. For context, the average human reaction time is about 250ms. This is faster than any widely accessible large language model to date, transforming AI interaction from a turn-taking exercise into a fluid, real-time exchange.

- Enhanced Emotion and Nuance: Unlike its predecessors, GPT-4o is trained to interpret and even mimic human emotion in voice. It can detect frustration, excitement, or confusion and adjust its tone and response accordingly, making the real-time AI conversation feel far more intuitive.

GPT-4o vs GPT-4: Speed, Cost, and Capability

The biggest question for existing users is: how does the new model stack up against the established champion, GPT-4? The differences are significant across performance, accessibility, and cost—even for developers utilizing the GPT-4o API.

Performance Metrics: A Clear Step Up

GPT-4 was a remarkable tool, but GPT-4o is superior across almost every benchmark, particularly in non-text modalities.

| Feature | GPT-4 Turbo | GPT-4o (New Omni Model) | Significance |

|---|---|---|---|

| Response Speed | Multiple seconds | Average 320 milliseconds | Unlocks true real-time AI conversation. |

| Multimodality | Chained models (A to T, T to V, etc.) | Natively unified processing (Omni Model) | Higher context and nuanced understanding. |

| Vision Capabilities | Good (but slower) | State-of-the-art; faster analysis. | Enables complex AI vision capabilities for data and real-world scenes. |

| Language Support | Strong (but slower processing for low-resource languages) | Significantly faster and higher quality for 50+ languages. | Improved global accessibility. |

| Cost (API Access) | Standard high pricing | 2x faster and 50% cheaper than GPT-4 Turbo. | Massive reduction in operational costs for developers. |

| Free Access | Limited/Paywalled | Available for free to all ChatGPT users. | Democratizes the future of AI assistants. |

The speed increase is the most palpable user experience improvement. Imagine using an AI assistant that no longer requires you to wait for it to “think.” This reduction in friction is central to the shift toward natural human-computer interaction.

The Game-Changing GPT-4o Features in Detail

The technical prowess of GPT-4o translates into three major practical feature sets that change how we use AI.

1. Real-Time Conversational AI and Voice Mode

The new GPT-4o voice mode transcends previous AI assistants. It’s no longer just about generating speech; it’s about mimicking human-level conversational ability.

Seamless Interpretation and Translation

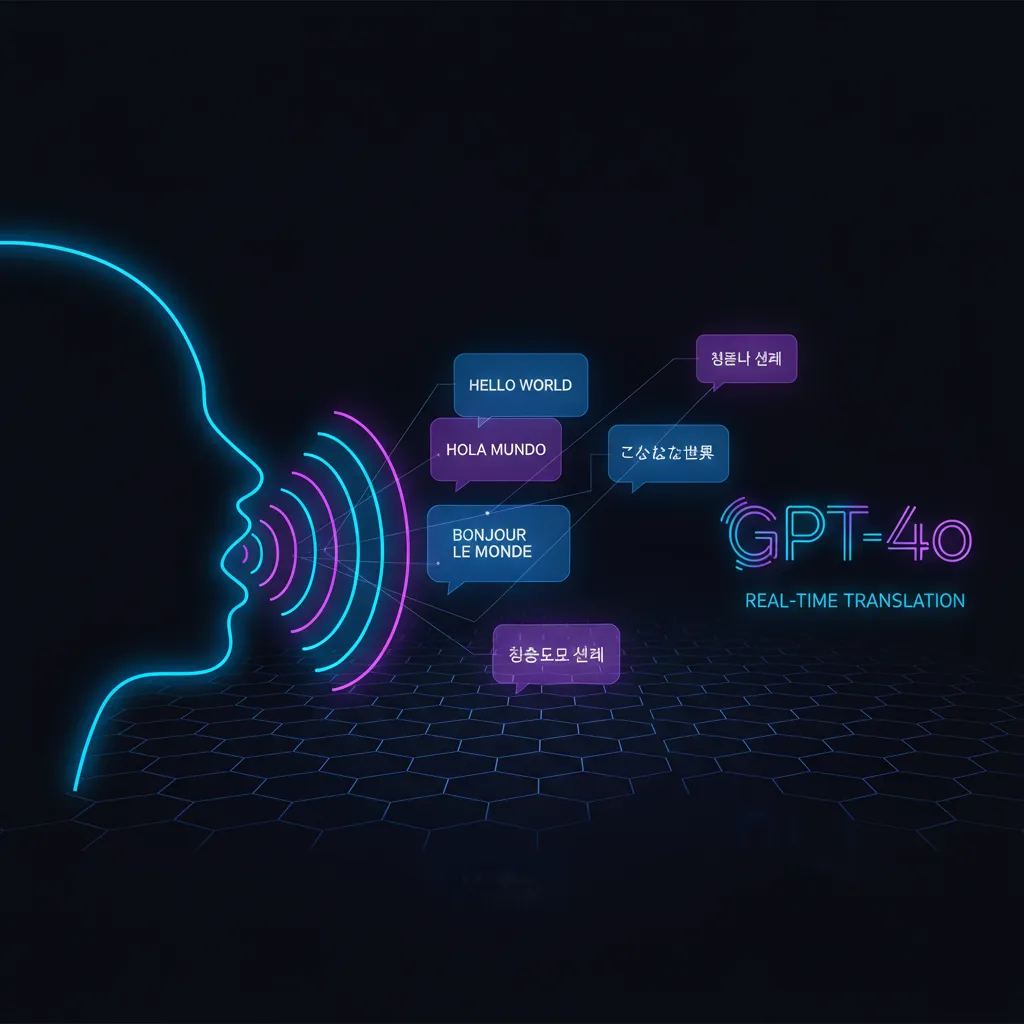

The most exciting aspect shown during the GPT-4o demo was its ability to perform real-time, two-way language translation with almost no delay.

- Scenario: Imagine speaking Spanish into your phone, and the AI instantly translates and speaks your words in Japanese to your counterpart, then instantly translates their Japanese reply back to you in Spanish.

- Practical Use: This feature alone is set to revolutionize global business communication, travel, and international collaboration. It provides a near-perfect universal translator built into the core AI assistant.

Emotional Resonance and Interruptibility

GPT-4o can be interrupted mid-response, just like a human, and immediately adjust its context. It also offers five distinct voices with varying emotional tones, allowing users to choose an assistant that sounds warm, stoic, or professional.

[Related: AI Revolutionizing Mental Wellness]

2. Advanced Vision Capabilities and Data Analysis

GPT-4o processes images and video streams faster and with greater understanding than any prior model. This dramatically enhances its utility as a visual assistant.

Real-World Context and Tutoring

Using the camera on your phone or computer, GPT-4o can interpret complex visual scenes in real-time.

- Live Tutoring: You can point your phone at a complex algebraic equation, and GPT-4o won’t just solve it; it will guide you step-by-step through the solution while recognizing where you’ve drawn a diagram or pointed to a specific part of the problem.

- Data Insight: Point the camera at a physical printout of a sales chart or complex diagram. The model instantly performs AI text and vision processing, extracting data points, explaining trends, and providing concise summaries faster than you could manually input the data.

Enhanced Accessibility

For those needing visual assistance, GPT-4o’s ability to rapidly describe and interpret the world around them—reading labels, identifying objects, and navigating environments—is a massive leap in functional technology.

[Related: Personalized Health Tech: The Future of Wellness]

3. Text Generation and Intelligence Benchmarks

While the focus has been on multimodality, GPT-4o also shines in traditional text tasks. Across standard industry benchmarks (MMLU, HumanEval, etc.), GPT-4o consistently matches or slightly surpasses the performance of GPT-4 Turbo, maintaining state-of-the-art advanced artificial intelligence 2024 quality while being significantly faster and cheaper to run.

Accessibility for All: Is GPT-4o Truly Free?

The democratization of high-level AI is arguably the most impactful outcome of the OpenAI Spring Update 2024. The question “is GPT-4o free?” has a nuanced but overwhelmingly positive answer.

Free Access via ChatGPT

OpenAI has made GPT-4o features accessible to all ChatGPT users, including those on the free tier. This means the vast majority of users can now experience the speed, vision, and multimodal capabilities that were previously restricted to expensive paid tiers.

What Free Users Get:

- GPT-4o Access: Free users can now access the intelligence and speed of GPT-4o for standard text, code, and image generation requests.

- Vision Capabilities: Free users can upload images and documents for analysis, leveraging the model’s AI vision capabilities.

- Data Analysis: Access to advanced data analysis features, including charting and detailed summaries of uploaded files.

It’s important to note the limitations: free users will have a cap on how many GPT-4o messages they can send per time period. Once the cap is reached, their service reverts temporarily to GPT-3.5. However, the cap is generous enough to allow most daily users substantial access to the premium model.

[Related: AI Customer Experience: Personalization and Engagement]

Paid Tiers and API Pricing

For power users, businesses, and developers, the paid tiers (Plus, Team, Enterprise) still offer significant advantages:

- Higher Message Caps: Subscribers get substantially higher usage limits for GPT-4o, ensuring continuous access during high-volume workdays.

- Priority Access: During peak load times, paid users maintain priority access.

- Upcoming Features: Paid users will likely be the first to receive updates like the enhanced Voice and Video modes (including the ultra-low latency voice mode) as they roll out.

For developers, the GPT-4o API pricing is revolutionary: it is half the cost of GPT-4 Turbo while delivering superior performance. This massive cost reduction accelerates the adoption of advanced AI into thousands of new applications and services, making it a critical factor for the future of AI assistants.

How to Use GPT-4o Today: The Desktop App and Beyond

Accessing the power of GPT-4o is simpler than ever before, largely thanks to the parallel launch of the new ChatGPT desktop app.

Step 1: Download the ChatGPT Desktop App

OpenAI released a dedicated desktop application for macOS (with Windows coming later) that integrates directly into your operating system.

- Quick Shortcut: Users can use a simple keyboard shortcut (Option + Space) to instantly pull up a ChatGPT overlay, allowing for rapid queries without switching browser tabs.

- Screen Capture: The desktop app allows users to take a screenshot and instantly feed it to GPT-4o for analysis. This is essential for utilizing the AI text and vision processing capabilities on-the-fly, such as troubleshooting code errors or getting immediate feedback on a design mockup.

Step 2: Utilizing the Multimodal Interface

To fully leverage the “omni” experience, focus on the new input types:

- Voice Mode: Open the mobile or desktop app and click the headphone icon (the Voice Mode). Initiate a natural conversation. Test its interruptibility and its capacity for real-time translation. This is the best way to experience the true conversational AI improvements.

- Vision Mode: Upload an image or use the desktop app’s screen capture feature. Ask it to perform complex analysis, such as:

- “Analyze this market growth chart and summarize the three biggest risks.”

- “What is the Latin name for this plant in the photograph, and where does it typically grow?”

- Creative Tasks: Use GPT-4o for traditional text tasks like drafting marketing copy, summarizing lengthy reports, or generating sophisticated code, noting the significant improvement in speed compared to previous models.

[Related: AI Career: Mastering the Future Job Market]

Comprehensive GPT-4o Use Cases: Transforming Work and Life

The fusion of speed, vision, and real-time audio capability means GPT-4o isn’t just a chatbot; it’s a revolutionary universal assistant ready for professional and personal tasks alike. These GPT-4o use cases demonstrate the practical power of the omni model AI.

1. Education and Personalized Learning

GPT-4o acts as a patient, always-available tutor.

- Interactive Learning: Students can ask complex questions via voice and receive immediate, personalized feedback.

- Visual Problem Solving: A physics student can draw a free-body diagram, snap a picture, and ask GPT-4o to check their vectors and solve the associated equations, getting instantaneous visual and textual feedback.

- Language Acquisition: Using the real-time translation feature, learners can practice conversational skills with a native-sounding partner in a simulated environment, receiving pronunciation and grammar corrections instantly.

2. Professional and Creative Workflows

The speed and multimodal ability make GPT-4o indispensable for productivity.

- Coding and Debugging: Developers can quickly upload screenshots of error messages or sections of their code and receive immediate explanations and suggested fixes, minimizing downtime.

- Data Visualization and Analysis: Consultants can upload raw data, ask the model to generate specific charts (pie, bar, trendlines), and then ask follow-up questions about the visualized data—all in a single, rapid session.

- Content Generation: Marketing teams can use the model to generate ad copy, adjust tone based on audience input, and brainstorm visual concepts for accompanying images, accelerating the entire content pipeline.

3. Daily Life and Home Assistance

GPT-4o brings sophisticated AI out of the office and into the home.

- Real-Time Troubleshooting: Point your phone at a flickering light switch or a complex instruction manual. The AI reads the visual information and provides immediate, step-by-step vocal instructions on how to proceed.

- Recipe Conversion and Kitchen Help: Need to scale a recipe from 4 servings to 11? Point your camera at the ingredients list, and GPT-4o can vocalize the new measurements while you cook, ensuring your hands stay clean.

- Smart Home Integration (Future): While not fully integrated yet, the potential for GPT-4o to become the ultimate hub for sustainable smart homes and personalized automated tasks is immense, offering contextual awareness based on visual cues and ambient sound.

The Implication: Intelligence on Par with Humans

The launch of GPT-4o signals a critical shift in how we define and interact with artificial intelligence. The latency barrier has been broken. The fragmented nature of AI systems has been resolved. What remains is a unified, highly responsive entity.

This new standard for natural human-computer interaction means we are moving beyond simply asking an AI to perform a task. We are entering an era of collaboration where the AI acts as a true cognitive partner—a partner that can see what we see, hear what we hear, and respond with the speed and nuance required for effective, high-stakes communication.

For consumers, this means the free ChatGPT-4o access gives everyone a cutting-edge future of AI assistants experience. For businesses, the cheaper, faster GPT-4o API means the integration of advanced artificial intelligence 2024 into products and services will accelerate dramatically, leading to a new wave of innovation across nearly every sector.

The takeaway is clear: the GPT-4o release date was the moment the future arrived. The omni model is here, and it is setting the stage for AI interactions that feel less like talking to a machine and more like talking to a highly knowledgeable and responsive human colleague.

[Related: Mind Meld: The Rise of Neurotech and Brain-Computer Interfaces]

Conclusion: Embracing the Omnipresent AI

GPT-4o is not merely an improvement on GPT-4; it’s a paradigm shift in the accessibility and capability of sophisticated AI. By unifying text, audio, and vision processing into a single, high-speed omni model AI, OpenAI has created the most fluid and powerful conversational AI tool available to the public.

The commitment to making core GPT-4o features available for free ensures that the benefits of this latest AI technology are not limited to a select few. Whether you are using the new ChatGPT desktop app for quick screen analysis, leveraging the GPT-4o voice mode for real-time translation, or simply enjoying the unprecedented speed in your daily queries, GPT-4o defines a new benchmark.

The future of AI assistants is fast, multimodal, and accessible. Start exploring how to use GPT-4o today and experience the revolutionary step towards intelligence on par with humans.

FAQs: Your Questions About OpenAI GPT-4o Answered

Q1. What is GPT-4o?

GPT-4o is OpenAI’s newest flagship large language model (LLM), where the ‘o’ stands for “omni.” It is a unified multimodal AI model designed to natively process and generate outputs across text, audio, and vision, significantly enhancing speed and conversational fluidity compared to previous models like GPT-4.

Q2. Is GPT-4o truly free, or do I need a ChatGPT Plus subscription?

Core GPT-4o features are available to all users on the free ChatGPT tier. This includes access to its faster intelligence, AI text and vision processing, and access to the ChatGPT desktop app. Paid users (Plus, Team, Enterprise) receive higher message caps and priority access to new features like the low-latency voice and video modes.

Q3. How does GPT-4o vs GPT-4 compare in terms of speed?

GPT-4o is dramatically faster. While GPT-4 and GPT-4 Turbo often had response latencies measured in multiple seconds, GPT-4o achieves response times as low as 232 milliseconds in its voice mode, making the real-time AI conversation feel instantaneous and natural. The GPT-4o API is also twice as fast and half the cost of the GPT-4 Turbo API.

Q4. What are the key new GPT-4o features announced in the OpenAI Spring Update 2024?

The key features include native multimodal AI model capabilities (seamless integration of text, audio, and vision), ultra-low latency GPT-4o voice mode for real-time translation, and advanced AI vision capabilities that allow it to analyze complex data charts or real-world scenes instantly.

Q5. When was the official GPT-4o release date?

GPT-4o was officially announced during the OpenAI Spring Update 2024 event in May 2024. Features began rolling out immediately to both free and paid ChatGPT users shortly thereafter.

Q6. Can GPT-4o analyze live video or only still images?

While the initial public rollout focuses on images and audio inputs, the underlying omni model AI is capable of processing video. OpenAI has demonstrated GPT-4o demo capabilities involving live video analysis, such as coaching a user through a presentation or explaining a live scene, indicating these advanced visual interaction features will be rolled out progressively, starting with paid tiers.

Q7. Is GPT-4o considered “intelligence on par with humans”?

GPT-4o moves closer than any preceding widely available model to human-level interaction, especially in terms of speed and nuance in conversation. Its low latency and ability to maintain context across multiple modalities (text, voice, vision) create a more natural human-computer interaction experience. While AGI (Artificial General Intelligence) is still a goal, GPT-4o represents the state-of-the-art in advanced artificial intelligence 2024.

Q8. What does “omni model” mean in the context of GPT-4o?

The term “omni model” refers to the architecture of OpenAI GPT-4o, meaning it was trained end-to-end as a single neural network that processes all input modalities (text, audio, vision) simultaneously, rather than relying on a chain of separate, specialized models. This unified approach is what allows for the dramatic speed and capability improvements.