GPT-4o: The Multimodal AI Revolution is Here

Introduction: The Dawn of Truly Conversational AI

The world of artificial intelligence moves fast. Just when users were getting comfortable with the groundbreaking power of GPT-4, OpenAI dropped an announcement that fundamentally changes the landscape: GPT-4o.

Dubbed the “omnimodel” (where “o” stands for omni), GPT-4o represents a seismic shift toward truly multimodal AI. It’s not just an incremental update; it’s a complete reimagining of how humans interact with intelligent systems. Where previous models handled text, audio, and vision through separate pipelines, GPT-4o integrates all modalities natively, delivering unprecedented speed, emotional understanding, and seamless real-time interaction.

This OpenAI new model has dramatically accelerated AI technology trends 2024, pushing the future of artificial intelligence toward highly sophisticated, conversational AI agents that feel less like software and more like a helpful colleague or friend. If you’re wondering what is GPT-4o and how it impacts everything from daily tasks to professional development, you are in the right place.

In this comprehensive guide, we will dive deep into the specific ChatGPT-4o features, compare it directly in the GPT-4 vs GPT-4o battle, explore its profound impact on developers and consumers alike, and detail how to use GPT-4o right now, even as one of the many free AI tools available. Prepare to witness the next generation AI model that is already redefining human-computer interaction.

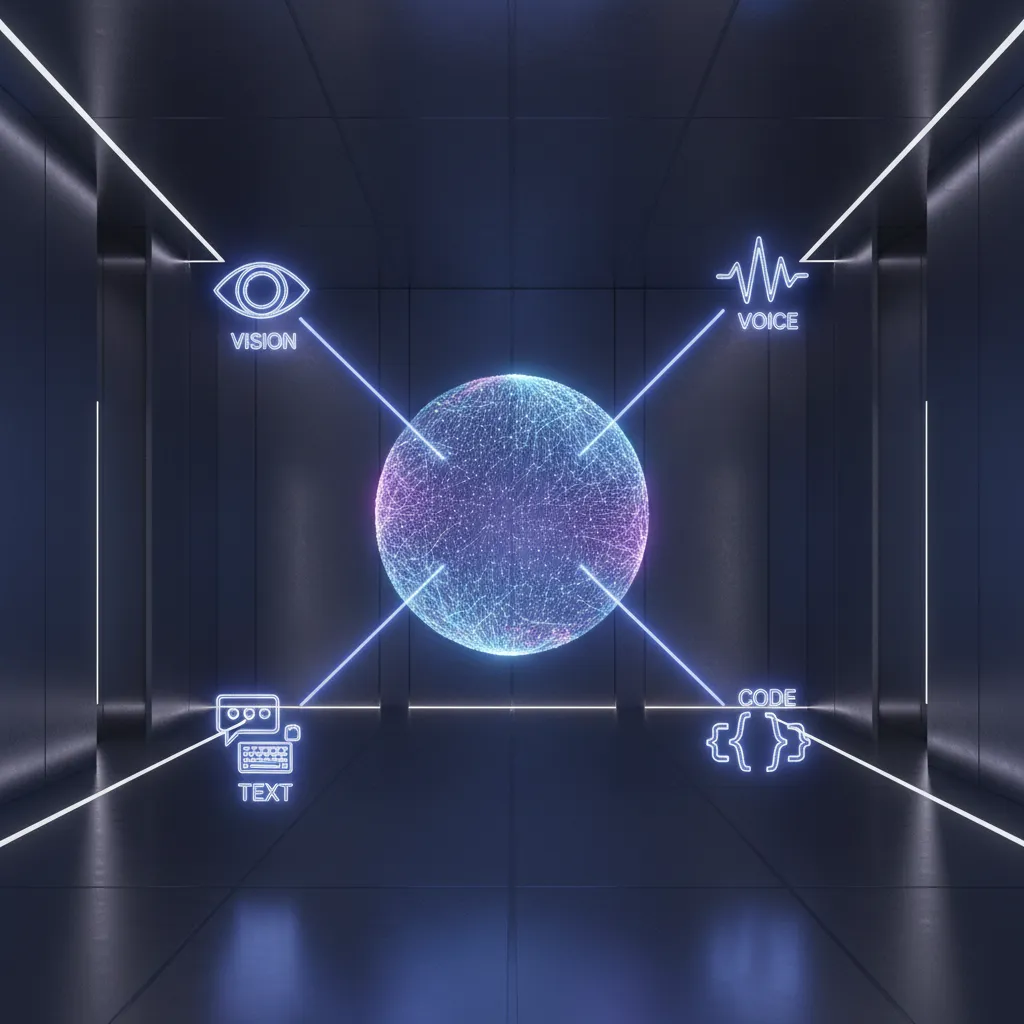

Understanding the “O” in GPT-4o: Multimodal Mastery

To grasp the revolution, we must first understand the core concept: multimodal AI.

The Shift from Pipeline to Native Multimodality

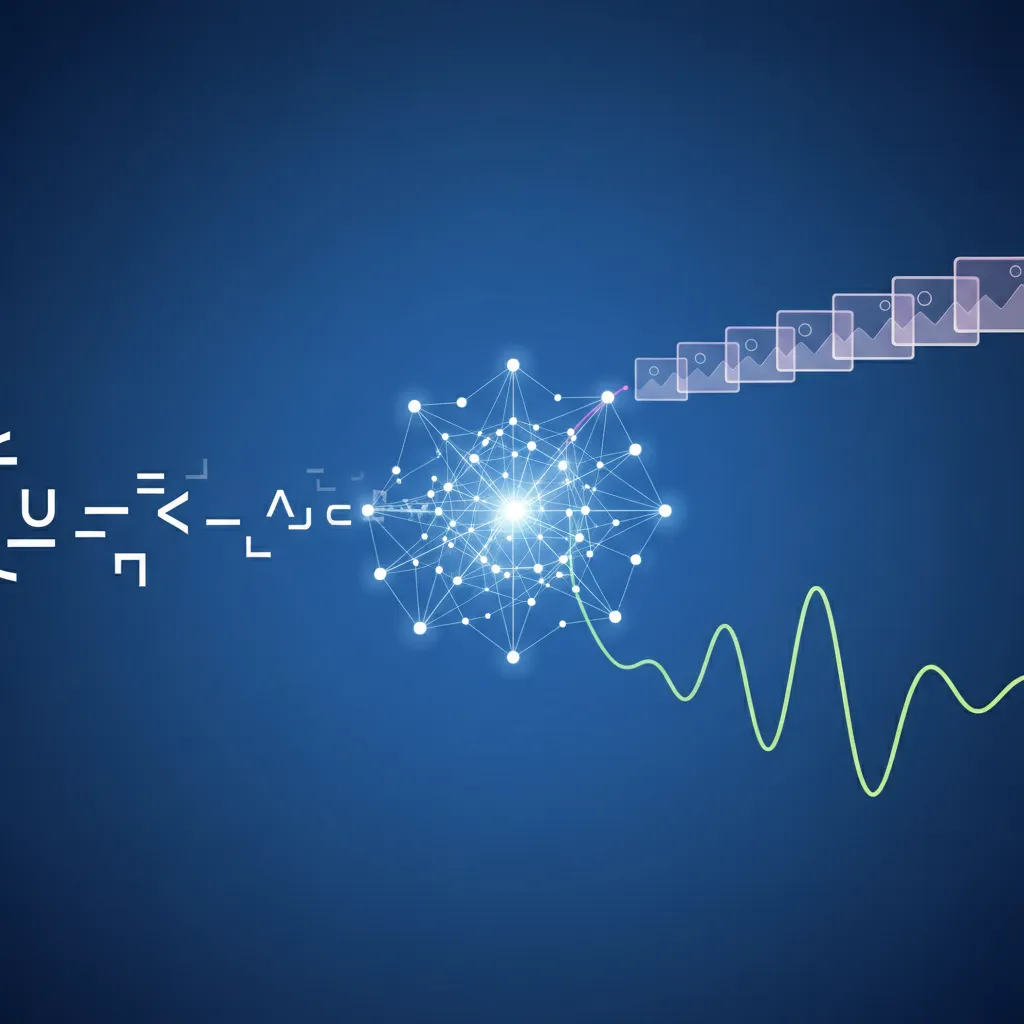

In the previous generation of models, including standard GPT-4, different modalities (like voice or vision) were often processed sequentially.

- Voice Input: Audio was transcribed into text by a smaller model.

- Text Processing: GPT-4 processed the text query.

- Voice Output: The resulting text was synthesized back into speech using a separate text-to-speech model.

This process introduced latency, leading to unnatural, delayed interactions. The model couldn’t genuinely listen to tone or interpret visual cues mid-conversation.

GPT-4o obliterates this pipeline bottleneck. It is a single neural network trained across text, audio, and vision simultaneously.

“GPT-4o is natively multimodal. The text, vision, and audio are all processed by the same model, dramatically improving performance and allowing for emotional and contextual nuances.” — OpenAI

This integration allows the model to:

- Hear and respond to vocal tone (e.g., detecting frustration or excitement).

- Process video or images in real-time, understanding the context of the visual input as the user speaks.

- Respond in just 232 milliseconds (median), matching human conversation speed.

This foundational change makes GPT-4o the ultimate AI assistant and a benchmark for all competitors, especially when considering the ongoing rivalry between Google I/O vs OpenAI announcements.

Key Performance Upgrades: Speed, Cost, and Intelligence

While speed is the most immediate user benefit, the performance gains are comprehensive:

| Feature | GPT-4 Turbo | GPT-4o | Improvement |

|---|---|---|---|

| Response Time (Voice) | ~5.4 seconds | ~0.3 seconds | Near Real-Time |

| Price (API) | $10 / 1M input tokens | $5 / 1M input tokens | 50% Cheaper |

| Context Window | 128K tokens | 128K tokens | Equal (Massive) |

| Native Modality | Text-focused, separate APIs | Text, Vision, Audio Integrated | Truly Multimodal |

| Availability | Paid (Plus, API) | Free (Tiered Access) | Widely Accessible |

The combination of being dramatically faster and 50% cheaper in the GPT-4o API signals a massive democratization of advanced AI capabilities, making it highly attractive for AI for developers and large-scale applications.

ChatGPT-4o Features: What Can This New Model Really Do?

The leap in capability isn’t just about faster text generation; it’s about the entirely new categories of interaction unlocked by seamless, real-time voice AI and advanced AI vision capabilities.

1. Real-Time Conversational Mastery

GPT-4o’s voice mode is the star of the show. It transcends mere voice command, offering the experience of talking to an incredibly articulate and emotionally aware human.

- Emotional Range: The AI can detect and respond to subtle shifts in your voice—if you sound excited, it can mirror that enthusiasm. If you sound distressed, it can ask how to help. It can even generate responses in different synthesized voices and tones, like singing or speaking dramatically.

- Interruption Tolerance: Unlike older assistants that forced you to wait, GPT-4o handles interruptions gracefully, making for fluid, natural dialogue—a critical component of high-quality conversational AI.

- Live Translation: It can act as a real-time interpreter, listening to two people speaking different languages and translating the conversation instantaneously. This feature alone has massive implications for travel, business, and education.

2. The Power of AI Vision Capabilities

The model’s ability to process visual input in real-time opens doors to hyper-practical applications that blur the line between the digital and physical worlds.

Imagine pointing your phone camera at a complex technical diagram, asking the AI to explain it, and having it walk you through the steps verbally while visually annotating the image on your screen.

/image-topic.webp

Practical AI Vision Applications:

- Real-time Assistance: Point your camera at a complicated math problem, and the personal AI assistant guides you through the solution step-by-step.

- Object Identification and Context: Show it a plant, and it instantly identifies it, discusses its history, and advises on care.

- Data Interpretation: Show it a physical chart or graph, and it performs rapid AI data analysis, summarizing trends or predicting outcomes.

- Creative Inspiration: Show it a photograph, and ask it to write a poem or song lyrics inspired by the visual elements, making it a powerful tool among creative AI tools.

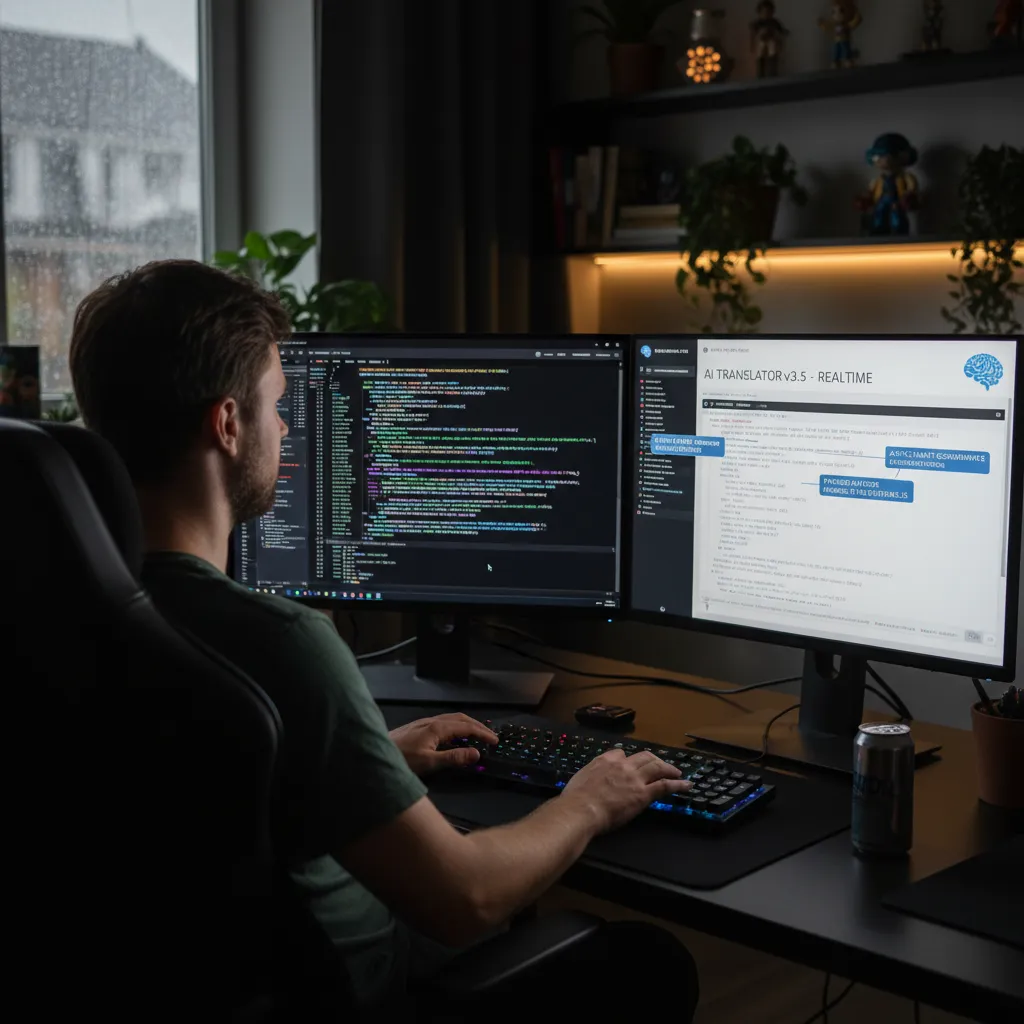

3. Enhanced Text and Coding Prowess

Even in pure text tasks, GPT-4o shows superior performance over GPT-4 Turbo, particularly in non-English languages, which it processes much faster and more accurately.

For Developers: The GPT-4o API makes it an indispensable tool for coders. It handles complex coding tasks, debugging, and cross-language translation with ease, often serving as a highly effective pair programmer.

[Related: Guardians of the Digital Frontier: AI Revolutionizing Cybersecurity]

For Content Creators: Its ability to generate highly human-like text, combined with its creative vision capabilities, makes it a dominant force in content generation and conceptualization.

GPT-4 vs GPT-4o: The Comprehensive Comparison

Understanding the differences between the predecessor and the successor is crucial for anyone relying on these models for professional work or development.

The Architectural Divide

The central difference is architectural purity. GPT-4 was a sophisticated transformer model, but its multimodal functions were often layered on top. GPT-4o is a single, cohesive model from the ground up that processes the different input types together.

| Aspect | GPT-4 / GPT-4 Turbo | GPT-4o (Omnimodel) | Significance |

|---|---|---|---|

| Multimodal Processing | Pipelined (separate steps) | Native, Single Network | Drastically Reduced Latency |

| Speed | Excellent, but slow in voice | Extremely Fast (Human-level) | Essential for real-time voice AI |

| Emotional Intelligence | Limited (text-only interpretation) | High (interprets vocal tone and visual cues) | Enables true intelligent assistants |

| Cost | Premium pricing | 50% cheaper API usage | Democratizes access |

| Availability | Mostly paid access | Widely available as a free AI tool | Faster adoption and iteration |

The User Experience Shift

The difference between using GPT-4 and GPT-4o is akin to the jump from dial-up internet to fiber optics—the underlying technology enables a fundamentally different user experience.

With GPT-4, tasks like analyzing an image and generating text based on it felt transactional: upload image, receive text. With GPT-4o, the interaction is continuous and immersive: show image, talk about image, receive real-time updates and guidance. This fluency is the engine behind the multimodal AI revolution.

/image-topic.webp

The Impact: AI Applications Across Industries

The capabilities of GPT-4o extend beyond novelty; they fundamentally change operational efficiencies and learning methods across numerous sectors, proving that this is more than just another OpenAI announcement.

1. Revolutionizing Education and Personal Learning

For students and lifelong learners, GPT-4o acts as the ultimate, infinitely patient tutor.

- Personalized Pace: The AI can observe a student solving a problem via the camera, pinpoint where they are struggling, and offer customized hints and explanations rather than just providing the answer.

- Accessibility: Its real-time translation and multimodal input make complex subjects accessible to individuals with diverse learning styles or language barriers. This advances the use of AI in education dramatically.

[Related: AI Classroom Revolution: Personalized Learning and Future Skills]

/image-topic.webp

2. Enhancing Business and Data Analysis

The model’s efficiency and low API cost make it ideal for integration into enterprise tools, driving new machine learning innovations.

- Customer Service: The conversational AI features allow for hyper-realistic chatbots that handle complex customer service issues with emotional sensitivity, reducing call volume and increasing satisfaction.

- Market Analysis: Companies can feed massive datasets, including images of competitor products or transcripts of focus groups, into the GPT-4o API for rapid, comprehensive AI data analysis.

3. Creative and Design Fields

Creative AI tools are moving from static image generation to dynamic, concept-to-execution assistance.

- Design Iteration: A designer can show the AI a sketch and ask, “What if the logo was warmer and used an Art Deco style?” The AI can instantly provide verbal feedback and suggest visual changes.

- Multilingual Content: Generating content that is nuanced and culturally appropriate for dozens of languages simultaneously is now faster and more accessible, leveraging its superior natural language processing capabilities.

[Related: Ethical AI Content Creation: Navigating Bias and Trust]

Getting Started: How to Use GPT-4o Today

One of the most appealing aspects of the OpenAI Spring Update was the commitment to making this powerful technology widely accessible.

1. Accessing ChatGPT-4o as a User

GPT-4o’s capabilities are being rolled out across both free and paid tiers of ChatGPT:

- Free Tier: Users in the free tier now have access to many GPT-4o capabilities, including higher speed, improved intelligence, and access to advanced features like data analysis and image uploads (though with usage limits). This makes GPT-4o one of the most powerful free AI tools on the market.

- ChatGPT Plus/Team/Enterprise: Paid subscribers receive higher usage limits, priority access to new features, and the full extent of the multimodal capabilities, including the full real-time voice and vision modes as they become widely available.

How to Start: Simply log into ChatGPT. If your account has been updated, you will see “GPT-4o” listed as a model option in the dropdown menu.

2. Utilizing the GPT-4o API for Developers

For businesses and developers, the GPT-4o API is the gateway to integrating this next generation AI into custom products and services.

- Cost Efficiency: The halved price relative to GPT-4 Turbo makes large-scale deployments, such as powering high-traffic customer service bots or complex data processing pipelines, significantly more economical.

- Integrated Endpoints: Developers now only need to interact with a single model endpoint for text, vision, and audio, simplifying development and deployment architecture.

/image-topic.webp

Key Use Cases for Developers:

- Cross-Platform Integration: Building native mobile apps that use the phone’s camera and microphone to provide real-time, context-aware assistance.

- Automated UX Testing: Feeding video clips of users interacting with a product to the AI and having it analyze, in real-time, the emotional state (via tone and facial expressions) and highlight friction points.

- Advanced Translation Services: Creating enterprise tools that translate complex technical discussions or legal documents in real-time.

The Broader Landscape: GPT-4o in AI Technology Trends 2024

The release of GPT-4o didn’t happen in a vacuum. It solidified several critical AI technology trends 2024 and raised the bar in the competitive landscape.

A. The Race for Real-Time and Efficiency

The biggest trend is the emphasis on near-zero latency. AI is moving out of the realm of asynchronous query-and-wait and into dynamic, synchronous interaction.

- Competitive Pressure: The speed of GPT-4o places intense pressure on competitors like Google and Anthropic to match or exceed this real-time performance, particularly in voice and vision processing. The performance differences often become a main topic in any AI model comparison.

- The Utility Shift: When an AI assistant can provide instantaneous, accurate help, its utility skyrockets. Users stop using it for complex, one-off tasks and start using it as a persistent, indispensable tool throughout their day.

[Related: AI Powered Personalized Travel Planning]

B. Safety, Ethics, and Multimodality

As the models become more capable, the discussion around AI ethics and safety becomes even more critical. OpenAI has emphasized that GPT-4o includes extensive safety measures built into its training.

- Guardrails for Vision: Preventing the model from interpreting or generating harmful content based on visual inputs is paramount. This includes sophisticated filtering to manage sensitive scenarios or illegal requests related to human identification.

- Misinformation Control: Since GPT-4o is excellent at generating highly convincing, multimodal content (including voice cloning), strong protective mechanisms are needed to prevent the malicious creation of deepfakes or misinformation. The responsibility for OpenAI announcements now includes a deep focus on controlled, ethical rollout.

C. The Proliferation of Intelligent Assistants

The superior capabilities of GPT-4o ensure that the era of the intelligent assistants is fully realized. We are seeing a move away from generalized AI to specialized, highly capable agents that can handle complex, industry-specific tasks.

For example, imagine a specialized GPT-4o agent designed for financial planning. It could listen to a complex client meeting (audio), analyze financial spreadsheets (vision/text), and instantaneously draft a comprehensive summary and proposed strategy.

[Related: Financial Freedom Blueprint: A Guide to Wealth]

Conclusion: GPT-4o and the Future of Interaction

GPT-4o is much more than just the OpenAI new model; it is a critical milestone in the journey toward the future of artificial intelligence. By natively integrating text, voice, and vision into a single, cohesive entity, it has eliminated the friction that previously separated humans from their AI tools.

This release not only democratizes access to elite machine learning innovations through its generous free tier and reduced API costs but also establishes a new performance benchmark for multimodal AI. Whether you are a developer looking for a cheaper, faster API, an educator seeking better ways to reach students, or an everyday user desiring a truly intuitive personal AI assistant, GPT-4o delivers capabilities that were purely science fiction just a few years ago.

The AI applications are limitless. As you begin exploring how to use GPT-4o, remember that you are interacting with a system that learns, sees, and hears in a manner closer to human perception than any model before it. The revolution is indeed here, and it speaks, sees, and understands in real time.

FAQs: Answering Your Questions About GPT-4o

Q1. What is the main difference between GPT-4 and GPT-4o?

The main difference lies in architecture. GPT-4 processed voice and vision via separate, smaller models (a pipeline), creating latency. GPT-4o is a single, multimodal AI model trained across text, audio, and vision simultaneously, allowing for real-time interaction, speed matching human conversation, and better emotional understanding.

Q2. Is GPT-4o a free AI tool?

Yes, many of the advanced features of GPT-4o are available to users on the free tier of ChatGPT, including its superior intelligence, faster response times, and general text/vision capabilities, though paid subscriptions (Plus/Team) still receive higher usage caps and priority access to the newest real-time voice AI features.

Q3. How much faster is GPT-4o in voice mode compared to GPT-4?

GPT-4o is dramatically faster in its voice mode. Where GPT-4 previously took an average of 5.4 seconds to process and respond to an audio prompt, GPT-4o has a median response time of just 232 milliseconds (less than a quarter of a second), which is virtually instantaneous and key to its conversational AI appeal.

Q4. Can GPT-4o perform AI data analysis?

Yes. Thanks to its integrated AI vision capabilities, GPT-4o can analyze uploaded images of charts, graphs, and spreadsheets, performing complex AI data analysis and summarizing findings in text or through verbal explanation. Its faster processing power makes this analysis quicker and more efficient.

Q5. Is GPT-4o available for developers via API, and what are the costs?

Yes, the GPT-4o API is fully available for AI for developers. Significantly, its input and output costs are 50% cheaper than the previous GPT-4 Turbo model, making it a highly cost-effective option for integrating next generation AI into large-scale applications and services.

Q6. What languages does GPT-4o support?

While its primary English performance is groundbreaking, GPT-4o exhibits superior performance in numerous non-English languages, including better tokenization and generation speeds. This enhances its utility for global AI applications and real-time translation features.

Q7. What kind of AI vision capabilities does GPT-4o have?

GPT-4o can process and interpret visual information in real-time. This includes identifying objects, describing scenes, analyzing physical handwritten notes or technical diagrams, providing step-by-step guidance based on what the camera sees, and making it an invaluable intelligent assistant in practical, real-world scenarios.