GPT-4o: The AI That Hears, Sees, and Speaks is Here

Introduction: The Dawn of the Omni-Model AI

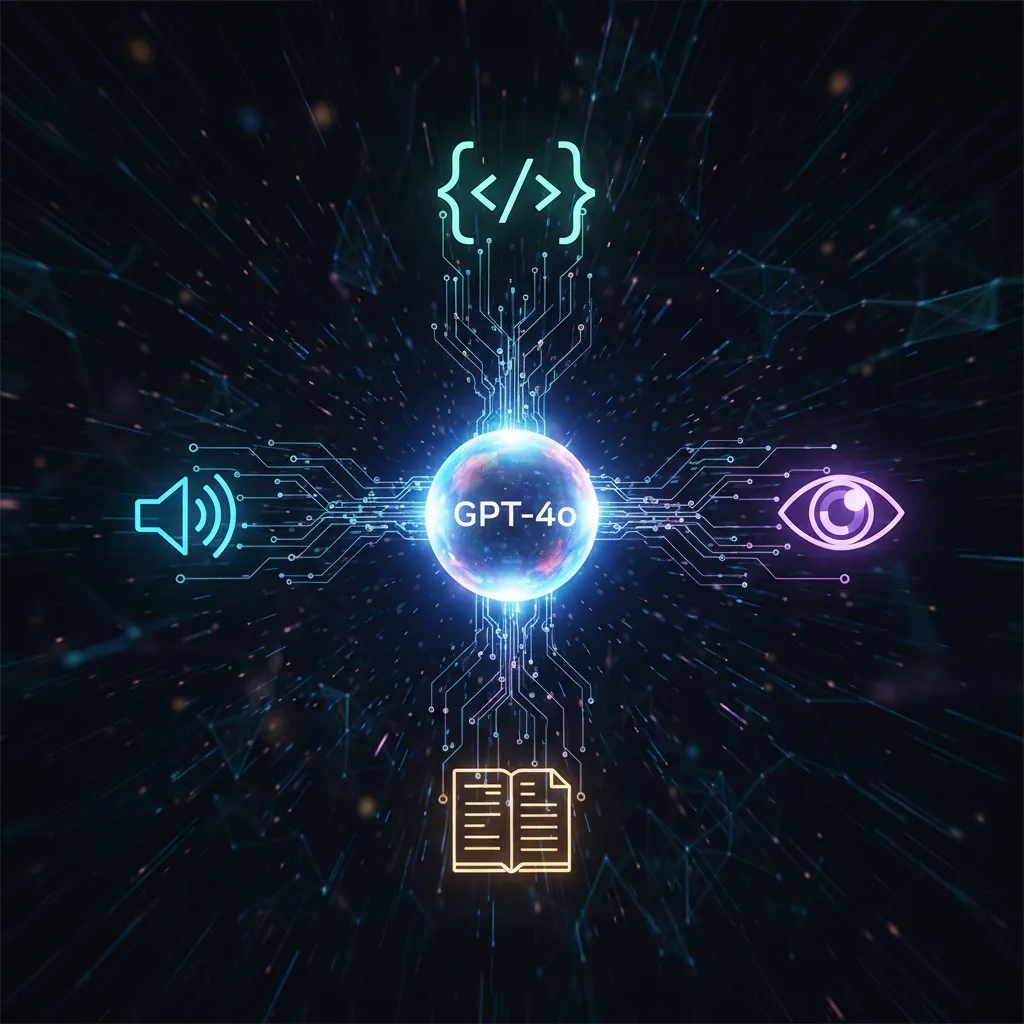

In the world of artificial intelligence, breakthroughs are measured in milliseconds and modalities. For years, we’ve interacted with AI systems that excelled in one area—text generation (like GPT-3.5) or image recognition (like specialized computer vision AI). The dream has always been a truly multimodal AI—an intelligent assistant that seamlessly processes and generates human-like output across text, voice, and vision.

That dream became reality during the recent OpenAI Spring Update with the announcement of GPT-4o, an OpenAI new model that fundamentally changes the landscape of conversational AI.

GPT-4o—where the ‘o’ stands for “omni”—is not just an incremental update to its predecessor, GPT-4. It is a architectural shift. This single, cohesive model processes audio, vision, and text natively, rather than chaining together separate specialized models. The result is an unprecedented leap in speed, capability, and the sheer human-like quality of interaction.

This post will delve into what is GPT-4o, exploring its revolutionary GPT-4o features, benchmarking GPT-4o vs GPT-4, explaining how to use GPT-4o, and analyzing the profound implications of this omni-model AI for the future of artificial intelligence. If you’ve ever imagined talking to an AI that truly understands nuance, emotion, and context in real-time, your digital partner has arrived.

The “O” is for Omni: Understanding GPT-4o’s Architecture

The term “multimodal” has been thrown around for a while, but GPT-4o redefines it. Previous AI models handled different inputs sequentially. For example, when you used the previous ChatGPT voice mode:

- Audio was transcribed to text by a separate speech-to-text model.

- The text was processed by the LLM (e.g., GPT-4).

- The text output was converted back into audio by a separate text-to-speech model.

This complex pipeline introduced significant latency (delays) and degraded subtle contextual information, particularly tone and emotion.

GPT-4o changes everything. It is a single, unified neural network that has been trained end-to-end across text, vision, and audio. When you speak to GPT-4o, your voice is no longer stripped down to plain text; the model processes the raw audio, including pitch, cadence, and emotional tone detection AI, making the response far more contextually rich and expressive.

This omni-model AI architecture means the model can observe, listen, speak, and write with near-human speed and coherence.

The Breakthrough of Real-Time, Expressive Communication

The most immediate and stunning change is the response speed. Latency is the enemy of natural conversation. Before GPT-4o, the average time from finishing a sentence to hearing the AI response was around 2.8 seconds. This is noticeable and unnatural.

GPT-4o drastically cuts this down. Its response time to audio inputs averages 232 milliseconds (ms), with some responses clocking in as fast as 100 ms. For context, 100 ms is roughly the speed of a human conversation partner. This level of real-time AI conversation makes the interaction feel fluid, engaging, and genuinely human-like AI.

The model is not just fast; it’s also highly expressive. It can adopt five different, distinct tones—dramatic, robotic, expressive, empathetic, or even a singing voice—based on the conversational context, a critical step towards creating truly empathetic and effective next-generation AI assistants.

GPT-4o Feature Highlight: Expressiveness GPT-4o can detect subtle cues like sarcasm, hesitation, or excitement in a user’s voice and tailor its response accordingly, a capability that was almost non-existent in earlier versions.

An AI robot and a human having a natural, face-to-face conversation in a cafe, illustrating the advanced conversational abilities of GPT-4o.

An AI robot and a human having a natural, face-to-face conversation in a cafe, illustrating the advanced conversational abilities of GPT-4o.

[Related: AI Tutors Revolutionizing Personalized Education]

Vision Unlocked: The AI That Can Truly See

While audio speed defines the human-machine interaction, the new vision capabilities define its utility. GPT-4o’s visual processing is integrated into the same core model, meaning its ability to understand and reason about images is significantly faster and more capable than before. This is a powerful application of computer vision AI.

Imagine holding up your phone and asking the AI a question about what it sees. This isn’t just basic object recognition; this is deep, contextual understanding:

- Real-time Analysis: Hold up a complex graph or a financial chart, and GPT-4o can analyze the data and explain the trends instantly.

- Contextual Problem Solving: Point the camera at a challenging algebraic equation on a whiteboard. GPT-4o won’t just tell you the answer; it can solve the steps, explain the method, and detect if your handwriting is difficult to read. This makes it an unparalleled tool for AI problem solving.

- Guidance and Support: Fixing a broken pipe? The AI can observe the parts, identify the problem, and verbally guide you through the repair process step-by-step, referencing manuals or instructional videos in real-time.

This profound integration of sight and language elevates GPT-4o from a sophisticated chatbot to a truly comprehensive digital partner, making it the most powerful example of AI that can see and talk to date.

GPT-4o vs. GPT-4: Speed, Cost, and Accessibility

When an OpenAI new model drops, the first question everyone asks is: Is it really better? The answer for GPT-4o vs GPT-4 is a resounding yes, specifically due to performance and accessibility gains.

| Feature | GPT-4o | GPT-4 Turbo | GPT-3.5 | Implications |

|---|---|---|---|---|

| Architecture | Native Omni-Model (Text, Audio, Vision) | Chained Models (Text core, separate Audio/Vision) | Text-Only (Primarily) | Superior context and speed across modalities. |

| Speed (Text/Code) | 2x Faster than GPT-4 Turbo | Fast | Standard | Immediate productivity boost. |

| Audio Latency (Avg.) | 232 ms | ~5.4 seconds | Not applicable | Enables natural, real-time conversation. |

| API Pricing (Input Tokens) | 50% Cheaper than GPT-4 Turbo | Standard | Lowest | Significant cost savings for developers. |

| Multimodality | Best-in-Class Integrated | Advanced, but slower | Minimal/None | Unlocked capabilities for complex tasks. |

| Accessibility | Available on the Free Tier | Paid (Plus/Teams) | Free | Democratization of powerful AI. |

Performance Benchmarks That Redefine Latency

The gains aren’t just subjective; they are quantifiable. In tests, GPT-4o achieves state-of-the-art results across various modalities:

- MMLU (Massive Multitask Language Understanding): GPT-4o maintains the high-level reasoning and knowledge retention that made GPT-4 a leader, proving it did not sacrifice intelligence for speed.

- Audio Recognition: In multilingual speech tasks, GPT-4o significantly outperforms older models, especially when transcribing noisy or complex language patterns.

- Vision Reasoning: Its ability to interpret visual data in context makes it a breakthrough in AI vision capabilities. It excels at tasks requiring cross-modal reasoning (e.g., “What is the mood of the person in this photo, and how should I respond?”).

This high-speed, high-intelligence combination makes GPT-4o a foundational piece of AI technology 2024.

Democratizing Power: Free ChatGPT-4o Access

Perhaps the most significant strategic move by OpenAI, especially considering the competition (such as the developments showcased around Google I/O vs OpenAI events), is the decision to offer free ChatGPT-4o access.

While paid subscribers (Plus, Team, Enterprise) receive higher usage limits, all free users now have access to the intelligence of GPT-4o for standard text and image tasks. This move effectively closes the gap between the free tier and the cutting-edge of AI.

The implication is profound: the most powerful and capable AI model to date is now available to anyone, instantly boosting the quality of daily interactions for millions of users worldwide. This commitment to ChatGPT update access cements OpenAI’s place in the market and accelerates global AI adoption.

[Related: The AI Classroom Revolution: Personalized Learning and Future Skills]

Practical Applications of GPT-4o: Beyond the Hype

The true measure of any technological breakthrough is its utility. GPT-4o’s unique fusion of speed, vision, and real-time audio opens up entirely new categories of use cases, moving AI from a sophisticated tool to a genuine co-pilot in daily life.

The Next-Generation AI Voice Assistant

The latency drop transforms the voice mode experience. Instead of feeling like you’re waiting for a computer, the interaction feels like a genuinely natural, back-and-forth chat.

Key Voice Mode Use Cases:

- Live AI Translation: Need to speak with someone who doesn’t share your language? GPT-4o can act as a live AI translation interpreter, listening in real-time, translating both ways, and even mimicking emotional tone, making international conversations seamless.

- Emotional Coaching: The model’s ability to detect emotion allows it to adjust its feedback. If you sound frustrated while trying to write a complex email, the AI can pivot its tone to be more encouraging or suggest taking a break, fulfilling the promise of truly empathetic AI voice assistant functionality.

- Hands-Free Productivity: Dictate complex instructions, summarize lengthy documents, or draft emails while driving or cooking. The real-time feedback eliminates the awkward pauses that plagued older systems.

Enhancing Productivity and Problem Solving

The combination of text, vision, and speed creates powerful new productivity pipelines, especially in professional environments.

Real-Time Multimodal Multitasking

Imagine a business meeting where you share your screen with GPT-4o. As the sales data is presented on a chart, you can verbally ask, “Why did this metric drop in Q3?” GPT-4o processes the visual data (the chart) and the auditory query simultaneously, providing a concise, factual answer about the potential economic factors in Q3 (from its knowledge base) almost instantly.

A split-screen image showing GPT-4o translating a live conversation on one side and providing real-time data analysis on a chart on the other, demonstrating its multitasking power.

A split-screen image showing GPT-4o translating a live conversation on one side and providing real-time data analysis on a chart on the other, demonstrating its multitasking power.

Pair Programming and Coding

For developers, GPT-4o accelerates the iterative coding process. Its enhanced vision allows it to debug code displayed on a screen, and its superior text generation provides faster, more accurate code snippets and documentation. This is especially helpful for complex operations requiring rapid feedback.

A programmer and GPT-4o on a large monitor pair-programming, with the AI suggesting code snippets and debugging in real-time.

A programmer and GPT-4o on a large monitor pair-programming, with the AI suggesting code snippets and debugging in real-time.

[Related: Copilot+ PCs and AI Laptops Guide: Snapdragon X Elite and the Future of Computing]

AI for Desktop: A Seamless Digital Partner

The new native AI for desktop application provides an overlay that allows you to integrate GPT-4o directly into your macOS or Windows workflow. You can hit a shortcut, ask a question, share an image from your screen, or start a voice conversation without switching applications. This level of seamless integration turns the AI from a web tool into a genuine, persistent operating system co-pilot.

Deep Dive into Multimodal Mastery

To fully appreciate the scope of the new model, it’s important to look at how different modalities are now intertwined, leading to better outcomes in areas like natural language processing and complex reasoning.

Enhanced Contextual Awareness

Because GPT-4o processes all inputs through a single model, it maintains a deeper, more unified understanding of the context.

Consider a user showing the AI a picture of their garden (vision) and asking, “Why do my tomatoes look sickly?” (text/voice).

- Old Model: Sees the image, interprets the text, and tries to match text to a visual database entry. The process is slow and often misses nuances.

- GPT-4o: Processes the visual information (e.g., yellow leaves, small black spots) simultaneously with the query. It might immediately infer the user’s emotional state (frustration) and respond with an empathetic tone while giving a precise diagnosis like “early blight,” followed by visual confirmation and care instructions.

This holistic approach results in not only more accurate diagnoses but responses that are delivered in a format and tone that maximize user understanding and comfort.

The Role of Emotional Tone Detection

The ability of GPT-4o to utilize emotional tone detection AI is a cornerstone of its “human-like” qualities. This isn’t just a parlor trick; it’s a vital component of advanced communication.

In high-stakes scenarios, such as customer support, counseling, or education, the tone of the response is as important as the content. If a customer service AI can detect rising frustration in a user’s voice, it can automatically elevate the ticket, soften its language, and offer solutions rather than boilerplate responses. This elevates the standard for intelligent assistants and vastly improves user experience.

[Related: Google AI Overview: The Ultimate 2024 Guide to Search Generative Experience]

The Road Ahead: Why GPT-4o is Pivotal for the Future of Artificial Intelligence

The release of GPT-4o is a landmark moment, not just for OpenAI, but for the entire trajectory of AI technology 2024. It solidifies the shift toward omni-model AI architectures, setting a new benchmark for speed and integration.

Setting the Stage for AGI

The push for human-level artificial general intelligence (AGI) requires a system that can process the world the way a human does—through sight, sound, and language, interpreted instantly and cohesively. GPT-4o represents the most significant step toward achieving this seamless integration.

By making real-time, multimodal interaction a reality, OpenAI has moved the focus away from simply generating longer, more coherent text and toward enabling sophisticated, moment-to-moment interaction with the digital world. This paves the way for truly autonomous agents capable of complex AI problem solving in dynamic, real-world environments.

The Competitive Landscape

The OpenAI Spring Update and the subsequent release of GPT-4o also intensify the competition with other tech giants. As companies like Google push forward with their models (often showcased at events like Google I/O vs OpenAI product launches), the bar for performance is continuously being raised. GPT-4o’s emphasis on speed and accessibility forces competitors to innovate similarly in unified, low-latency, multimodal systems. This arms race ultimately benefits users, driving faster development and greater features in conversational AI.

Ethical and Safety Considerations

As the AI becomes more “human-like,” capable of detecting and mimicking emotion, the ethical implications grow. OpenAI has implemented safeguards, particularly in the most advanced voice mode features, to mitigate risks such as identity impersonation or manipulative use. For example, some highly expressive voice capabilities are being rolled out slowly and deliberately to ensure safety and responsible deployment. This careful approach to scaling the human-like AI features is essential for maintaining trust and compliance.

[Related: AI Content Creation: Master Generative AI for Digital Marketing]

Conclusion: An AI That Finally Feels Natural

GPT-4o is more than just an iteration; it is a revolution in interaction design. By unifying text, audio, and vision into a single, highly efficient omni-model AI, OpenAI has broken the latency barrier that made previous voice assistants feel mechanical and slow.

The introduction of free ChatGPT-4o access ensures that the world’s most powerful multimodal AI is available to everyone, accelerating both personal and professional productivity across the globe. Whether you’re a developer utilizing its 50% cheaper API, a student using its AI vision capabilities to solve a complex math problem, or simply someone having a real-time, expressive conversation with an AI voice assistant, GPT-4o fundamentally changes what we expect from intelligent assistants.

The future of artificial intelligence is here, and it looks, sounds, and feels remarkably like a natural conversation partner. Now is the time to explore how to use GPT-4o and integrate this next-generation tool into your workflow, moving beyond simple chatbots to truly symbiotic digital collaboration.

FAQs (Frequently Asked Questions)

Q1. What is GPT-4o, and how does it differ from GPT-4?

GPT-4o (where ‘o’ stands for omni) is OpenAI’s newest flagship model designed to be natively multimodal, processing text, audio, and vision inputs and outputs through a single neural network. Unlike GPT-4, which relied on separate models chained together for voice and vision, GPT-4o is dramatically faster (up to 2x) and offers superior real-time responsiveness (average 232ms audio response time) and enhanced emotional and contextual understanding.

Q2. How can I get free ChatGPT-4o access?

GPT-4o’s core text and image capabilities are available on the free tier of ChatGPT. All users can access its high intelligence and speed for general tasks. Paid subscribers (Plus, Team, Enterprise) receive higher message caps and priority access to new features like the advanced GPT-4o voice mode and vision capabilities as they roll out.

Q3. What does “omni-model AI” mean in the context of GPT-4o?

“Omni-model AI” refers to GPT-4o’s unified architecture. It means the model handles all modalities (text, vision, audio) intrinsically from start to finish. For example, when processing audio, it doesn’t just convert it to text; it understands the raw audio, including tone and pitch, which allows for much more expressive and contextual interaction, making it the first true omni-model AI.

Q4. Can GPT-4o perform live AI translation?

Yes, one of the most exciting GPT-4o features is its enhanced capability for live AI translation. Because it processes audio in real-time with ultra-low latency, it can listen to a conversation between two different languages and translate back and forth instantly, acting as a seamless, high-speed interpreter.

Q5. Is GPT-4o available as a desktop application?

Yes, OpenAI released a new native AI for desktop application for macOS, with a Windows version planned. This application allows users to interact with GPT-4o using a simple keyboard shortcut, enabling screen sharing, immediate voice conversation, and seamless integration into everyday desktop workflows.

Q6. How does GPT-4o enhance AI vision capabilities?

GPT-4o’s integrated vision processing allows it to analyze images or live video feeds in real-time, linking visual information directly to its natural language understanding. This means it can solve complex visual puzzles, analyze charts, explain technical diagrams, and provide step-by-step instructions based on what it sees with unparalleled speed and contextual accuracy, excelling at computer vision AI tasks.

Q7. What major event led to the release of GPT-4o?

GPT-4o was officially unveiled by OpenAI during their OpenAI Spring Update in mid-2024. This launch was strategically timed to showcase the company’s advancements in AI technology 2024 and compete with similar developments announced by other major technology players, such as those often highlighted around the time of Google I/O vs OpenAI events.

Q8. Does GPT-4o detect and respond to emotional tone?

Yes, GPT-4o features highly sophisticated emotional tone detection AI. By processing the raw audio data (pitch, speed, cadence), the model can identify the user’s emotional state (e.g., happiness, frustration, confusion) and adjust its response tone and content accordingly, contributing significantly to its reputation as a human-like AI.