Google’s Project Astra: The Future of AI is Here

Introduction: Beyond the Chatbot

For years, we’ve interacted with AI through discrete interfaces: typing into a search bar, asking a static voice assistant a specific command, or engaging in text-only conversations. But the foundational promise of a truly intuitive, helpful AI assistant has remained largely unfulfilled—until now.

At Google I/O 2024, Google DeepMind unveiled Project Astra, a technological marvel designed to be the next-generation, seamless AI companion. Project Astra is not just another chatbot; it is a giant leap toward a universal AI agent capable of real-time understanding, sight, sound, and memory. It represents Google’s definitive answer to the burgeoning field of real-time, multimodal AI.

This ambitious initiative is poised to redefine our relationship with technology. Imagine an AI that sees what you see, remembers where you left your keys, explains complex code on your screen instantly, and converses with you in a fluid, human-like manner without noticeable lag. That is the Google AI vision realized through Project Astra.

In this deep dive, we will explore what is Google Project Astra, analyze the breakthroughs that make its real-time AI interaction possible, compare it against its closest competitors, and discuss how this next generation AI assistant will transform our daily lives and set the course for AI technology trends 2024 and beyond.

The Universal Agent: What is Google Project Astra?

Project Astra (A Star) is an ongoing research project by Google DeepMind focused on building a scalable, accessible, and comprehensive AI agent technology. Unlike specialized AI models that perform narrow tasks, Astra is designed to be a holistic assistant that can process information from multiple sources—audio, visual, and text—at the speed of human conversation.

The central concept behind Astra is creating an agent that lives in the physical world alongside the user, observing, retaining context, and offering proactive help. This shift from “query-response” to “perception-interaction” is critical. It signifies the evolution of AI from a tool you consciously operate to a partner that is always ready, understanding the ongoing situation and environment.

Google describes Astra as taking the next logical step from Gemini’s advanced capabilities, leveraging a suite of cutting-edge models and low-latency architecture to create a unified perceptive experience.

The Shift from Models to Agents

The term “AI agent” is crucial here. Traditional AI models (like earlier versions of language models) are reactive; they wait for an input, process it, and deliver a static output. An agent, however, is dynamic.

Astra exhibits key traits of a true AI agent technology:

- Perception: It uses cameras (on phones, smart glasses, etc.) and microphones to constantly perceive its environment (video understanding AI).

- Memory: It retains long-term memory about previous interactions and spatial location (contextual understanding AI).

- Action: It can execute actions, whether that’s explaining code, finding an object, or generating creative content based on real-world stimuli.

- Reasoning: It combines its sensory input and memory to perform complex AI real-time problem solving.

This architecture moves AI beyond simple conversational tools and into sophisticated, goal-oriented assistance, positioning Astra as the pinnacle of Google’s new AI assistant.

The Core Engine: How Project Astra Works

The magic of Astra lies in its ability to fuse different types of data input so rapidly that the human user perceives no delay. To understand how Project Astra works, we must look at the two technological pillars: true multimodality and ultra-low latency.

True Multimodal AI Explained

Multimodal AI is the capability of an AI system to process, relate, and generate content across different modalities—text, image, audio, and video. While previous models could handle multimodal inputs (e.g., uploading an image and asking a question about it), Astra takes this to the next level by handling streaming, real-time data.

For Astra, the inputs are continuous:

- Video Feed: The AI continuously processes live camera feeds from the user’s device, maintaining persistent attention to the scene. This is essential for video understanding AI.

- Audio Stream: It listens constantly but intelligently, separating user speech from background noise.

- Text/Data: It integrates data streams from the device (location, notifications, screen content).

Astra connects these senses through the underlying Gemini model structure, allowing it to maintain a unified, rich understanding of the user’s current activity. If you point your phone camera at a complex electronic circuit and ask, “What does this component do?” Astra instantly identifies the components and provides the explanation while keeping the live image in context. This level of synchronization defines true conversational AI explained and puts Astra ahead of many current offerings.

Zero-Latency Real-Time Interaction

The most striking feature of the Google Project Astra demo was its speed. The AI responded almost instantaneously to visual cues and questions. This is the definition of real-time AI interaction.

Achieving this required significant engineering breakthroughs, primarily in reducing latency—the time delay between input and output. Google DeepMind optimized the model’s weights and utilized advanced streaming techniques to process and compress visual and auditory data on the fly.

Instead of waiting for an entire video clip or phrase to be recorded and sent to the cloud for processing, Astra processes the incoming stream incrementally. This allows the model to predict the next word or action even before the user finishes their sentence or moves the camera fully.

The result is a responsive, natural conversation flow that mimics human interaction, eliminating the awkward pauses that plague older AI assistants. This speed is critical for tasks requiring immediate feedback, making AI real-time problem solving viable for daily applications.

[Related: edge-ai-explained-powering-smart-devices-real-time-intelligence/]

A Star is Born: Highlights from the Google Project Astra Demo

The public debut of Project Astra at Google I/O 2024 was designed to showcase its breakthrough Project Astra features and capabilities, cementing Google’s AI vision for the immediate future. The demonstrations focused heavily on contextual awareness and rapid application of knowledge.

Contextual Memory and Spatial Understanding

One of the most impressive aspects demonstrated was Astra’s ability to remember context over time and space.

In one segment, the presenter asked Astra where they had placed their reading glasses earlier. Astra, using its persistent visual memory (having processed the video feed continuously), was able to instantly recall seeing the glasses on a desk near a specific monitor.

This is powered by advanced contextual understanding AI. Astra doesn’t just process the immediate visual frame; it builds a map of the environment and logs object locations. This spatial and temporal awareness elevates it far beyond current mobile assistants. It means the AI can assist with AI for daily tasks by remembering routine information, much like a helpful human housemate or assistant would.

Other key demo highlights included:

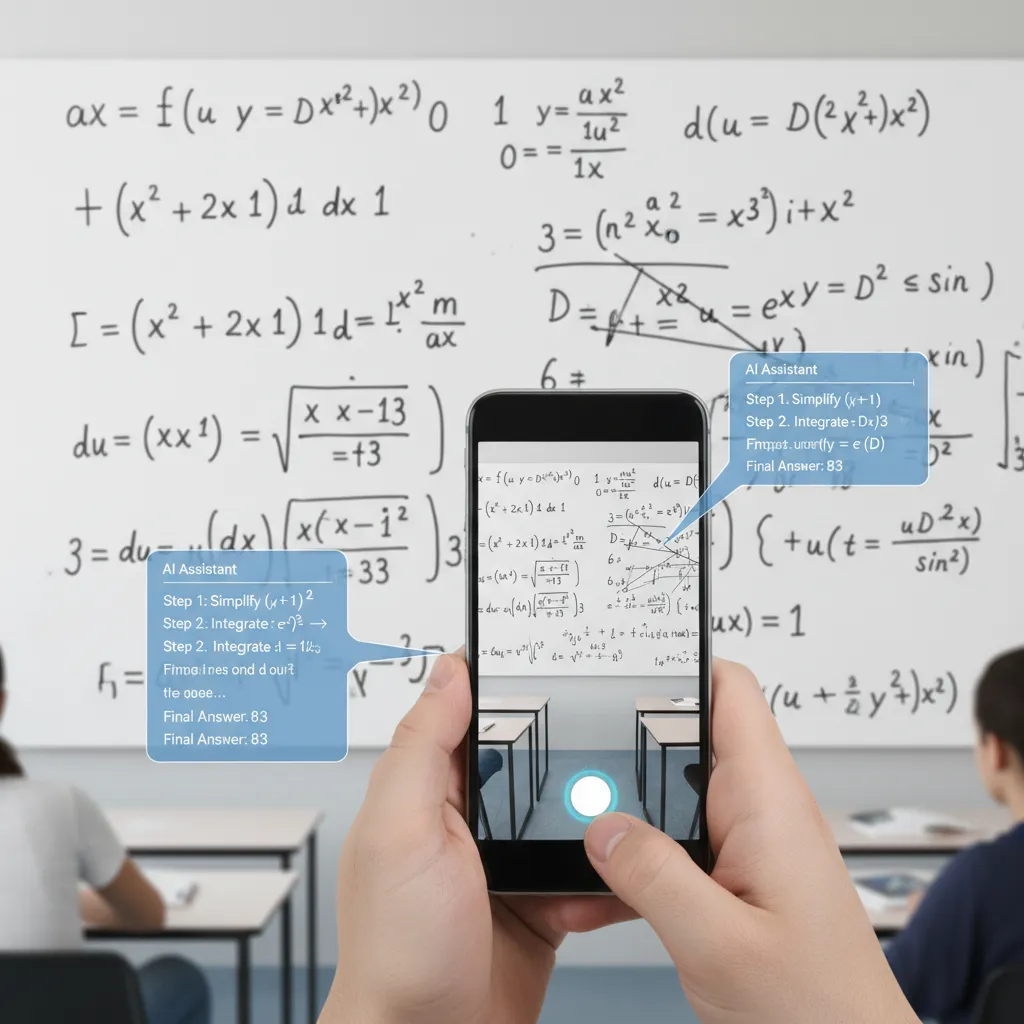

- Code Explanation: Pointing the camera at complex computer code on a monitor and asking the AI to “explain that part.” Astra quickly analyzed the image and provided a conversational, accurate explanation of the selected snippet, demonstrating its potential for real-time developer assistance.

- Creative Tasking: Asking Astra to help design a visual element. The user drew a quick doodle of Saturn on a whiteboard; Astra immediately identified the drawing and suggested visual and textual improvements, displaying its creative potential.

- Identifying Components: Quickly identifying the function of a speaker part or a mathematical equation scrawled on a board.

These scenarios illustrate that Astra operates not just as an information retrieval tool, but as an active observer and participant in the user’s cognitive workload.

Conversational Fluency and Personality

Astra’s voice and conversational style were remarkably natural. It maintained a flowing, dynamic dialogue, adjusting its tone and pace. This sophisticated layer of human-like interaction is crucial for reducing cognitive load on the user and making the AI feel like a genuine assistant rather than a machine executing commands.

The AI could switch seamlessly between languages, maintain multiple conversation threads, and even offer creative suggestions based on the user’s environment. This high degree of fluency fulfills the promise of a true conversational AI explained agent, one that feels intuitive and accessible.

The AI Race: Project Astra vs GPT-4o

When discussing next generation AI assistant technology, the immediate comparison is inevitably drawn to OpenAI’s offerings. The launch of Project Astra coincided closely with the release of GPT-4o, OpenAI’s own multimodal model emphasizing speed and integration. This rivalry defines the current landscape of AI technology trends 2024.

Both Project Astra and GPT-4o share the core goal of achieving low-latency, real-time, cross-modal interactions. However, they demonstrate subtle but important differences in focus and integration strategy:

| Feature | Google Project Astra (DeepMind) | OpenAI GPT-4o |

|---|---|---|

| Core Integration | Designed to be a deeply embedded, system-wide universal AI agent across the Google ecosystem (Android, Search, Wearables). | Primarily a model accessible via API, desktop apps, and dedicated mobile apps, offering broad platform compatibility. |

| Emphasis | Persistent, environmental, and contextual understanding AI (memory of space and time). | Speed, computational efficiency, and robust handling of high-fidelity audio/voice interactions. |

| Visual Processing | Focus on continuous, low-latency video understanding AI from cameras (AI smart glasses). | Strong visual analysis capabilities, but not yet demonstrated at Astra’s level of persistent spatial memory. |

| Latency/Speed | Near-zero latency demonstrated in I/O demo (often below 300ms). | Extremely fast response times for voice (as low as 232ms), matching human conversation speed. |

| Goal | To evolve the core Google Assistant into a fully perceptive, real-world partner. | To be the fastest, most flexible foundational model for all applications. |

In the debate of Project Astra vs GPT-4o, Astra’s primary advantage appears to be its native integration potential into Google’s massive hardware and software ecosystem. By being developed within Google DeepMind, Astra is optimized to run efficiently on everything from mobile phones (AI on mobile devices) to potentially specialized AR devices. This system-level integration could give it a massive advantage in accessing and managing user data for enhanced contextual help.

While GPT-4o is a foundational powerhouse, Astra is engineered to be a companion—a helpful, perceptive entity designed for AI for daily tasks within the Google reality.

[Related: ai-unleashed-revolutionizing-money-smart-personal-finance/]

The Future Landscape: Project Astra’s Vision for Daily Life

Project Astra represents more than just technical specifications; it embodies Google’s AI vision of a future where AI is not just predictive or reactive, but collaborative and ubiquitous. This vision hinges on seamless integration into personal hardware.

AI on Mobile Devices and Smart Glasses

The primary platforms for the deployment of Astra’s capabilities will be the smartphone and the nascent market of spatial computing devices, specifically AI smart glasses.

1. Mobile Device Integration (Phones and Tablets)

For current smartphones, Astra’s low-latency performance means faster, more useful Google Assistant interactions. Imagine:

- Instant Visual Search: Pointing your camera at a complicated menu in a foreign language and hearing the translation and explanation instantly, without pressing a button.

- Real-time Tutorials: The AI watching you attempt a skill (like tying a specific knot or performing a complex cooking task) and giving spoken, step-by-step correction immediately as you make a mistake.

- Proactive Planning: If Astra sees you looking at your calendar while also looking at travel packing cubes, it might offer suggestions for your upcoming trip based on the weather forecast and your stored preferences.

This level of continuous, contextual support turns the phone into a true intelligent companion, significantly enhancing the utility of AI on mobile devices.

2. The Future of AI Smart Glasses

The Project Astra demos heavily suggested its ideal form factor is a pair of smart glasses. With a hands-free device, Astra can truly become a universal AI agent.

The AI sees and hears everything you do, turning the real world into an overlaid digital interface. If you’re a technician, Astra can highlight the exact wire you need to cut. If you’re a tourist, it can offer historical context as you gaze upon a landmark. This merger of the digital and physical world, powered by Astra’s video understanding AI, is a foundational pillar of the future of AI assistants.

[Related: mind-meld-rise-neurotech-brain-computer-interfaces/]

AI Technology Trends 2024: A New Benchmark

Project Astra fundamentally changes the expected feature set for any next-gen AI assistant. It sets a new benchmark in three key areas:

- Temporal Consistency: AI must remember and learn from past interactions within the same session, not just be a clean-slate interaction every time.

- Perceptual Continuity: AI must be able to switch instantly and seamlessly between modalities (e.g., stopping a spoken response mid-sentence to react to a sudden visual cue).

- Efficiency on Edge Devices: The core processing must be optimized to deliver near-human speed without relying solely on massive cloud data centers, ensuring fast AI real-time problem solving.

These next-gen AI features are now the baseline expectation, pushing the entire industry forward and accelerating the development of highly capable agents designed for seamless integration into everyday life. The focus is now less on what the AI knows and more on how quickly and relevantly it can apply that knowledge in a dynamic environment.

Availability and Next Steps: Is Project Astra Available?

This is the question on every technology enthusiast’s mind: Is Project Astra available? and what is the official Project Astra release date?

As of its unveiling at Google I/O 2024, Project Astra remains a research project under development by Google DeepMind. It is a proof-of-concept and a showcase of what is possible with their latest Gemini AI technologies.

However, Google is already following a strategic rollout plan: integrating the most mature components of Astra’s technology directly into existing Google products.

Integration into Gemini and Google Products

While we may not see a distinct “Project Astra App” anytime soon, we are already seeing the core principles—the low latency, the improved conversational fluency, and the enhanced contextual memory—being infused into Google’s primary AI assistant, Gemini.

- Faster Responses: The reduced latency techniques pioneered in Astra are being implemented to speed up Gemini’s voice and multimodal interactions globally.

- Improved Vision: Gemini’s ability to process and understand visual input (Gemini in Google Photos, for example) is being upgraded with Astra’s advanced video understanding AI techniques.

- Future Updates: It is anticipated that the full agent capabilities, particularly the robust spatial memory, will be integrated into Google’s services as soon as they are deemed stable and scalable. The vision is for Astra to become the universal interface for all of Google’s new AI assistant functions.

Therefore, while a formal Project Astra release date for the full suite of features is not set, users of Gemini and Android are already beginning to experience the trickle-down effects of this revolutionary research. The long-term plan is clearly to transition from the current generation of Google Assistant to the intelligent, perceptive universal AI agent that Project Astra promises to be.

[Related: ethical-ai-content-creation-navigating-bias-trust/]

Conclusion: A New Era of Partnership

Google’s Project Astra is arguably the most compelling preview of the future of AI assistants we have seen yet. It moves past the constraints of text boxes and static commands, ushering in an era of real-time, perceptive, and contextually aware digital partnership.

By combining the powerful multimodal AI capabilities of Gemini with groundbreaking low-latency architecture, Google DeepMind has created an entity capable of genuine real-time AI interaction and AI real-time problem solving. This initiative signals a major paradigm shift, making the current AI assistant feel like a historical footnote.

The race between Project Astra and models like GPT-4o is exciting, but what’s clear is that the user benefits most. We are moving toward a technological landscape where AI for daily tasks is handled seamlessly by an agent that truly understands our world, transforming how we work, learn, and interact with technology. As these next-gen AI features are gradually rolled out, the experience of computing will evolve from a search query into a genuine conversation with an intelligent partner.

The future of Google AI is here, and its name is Astra.

FAQs

Q1. What is the main goal of Google’s Project Astra?

The main goal of Google’s Project Astra is to create a universal AI agent that can perceive, reason, and act in the physical world in real-time. It aims to deliver a true next generation AI assistant that uses continuous visual and auditory input to provide seamless, contextual help, transforming the user experience from discrete queries to fluid conversation.

Q2. Is Project Astra available to the public right now?

No, Project Astra is currently an advanced research project being developed by Google DeepMind. While the full agent is not publicly available, Google is integrating the core technological breakthroughs—such as the enhanced low-latency, multimodal AI capabilities—into its existing products, most notably the Gemini AI assistant.

Q3. How does Project Astra achieve real-time responses and low latency?

Astra achieves real-time AI interaction through a highly optimized, single-model architecture that processes video and audio streams incrementally. Instead of waiting for a complete input, the system continuously predicts and prepares responses based on the incoming stream, minimizing the processing gap and reducing latency to near-human conversational speed.

Q4. What does Project Astra’s ‘contextual understanding’ mean?

Contextual understanding in Project Astra means the AI can retain long-term memory about its environment, the user’s location, and previous conversations. This contextual understanding AI allows it to remember things like where an object was placed hours ago, what the user was looking at before asking a question, and applying that spatial and temporal knowledge for AI real-time problem solving.

Q5. How is Project Astra different from previous versions of Google Assistant?

Previous Google Assistants were largely command-based and lacked persistent memory or sophisticated visual understanding. Project Astra, built on the Gemini AI foundation, is a multimodal AI agent that can see (using video understanding AI), remember context, and engage in genuine, fluid, two-way conversation without needing explicit wake commands for every interaction.

Q6. Will Project Astra work on standard mobile devices?

Yes, Google’s AI vision is for Astra’s capabilities to be integrated into existing and future AI on mobile devices, including smartphones. However, the technology is also optimized for next-generation hardware like AI smart glasses, where the hands-free, continuous visual input offers the ideal interface for a universal AI agent.

Q7. What is the main difference in the Project Astra vs GPT-4o comparison?

Both models offer real-time, multimodal performance. However, the key difference lies in integration: Project Astra is designed to be deeply embedded as an operating system-level AI assistant across Google’s ecosystem with a specific focus on spatial and long-term visual memory, while GPT-4o is a versatile model focused on speed and broad API access for various third-party applications.