Combating AI Hallucinations: Building Trustworthy Systems

Introduction

You ask a cutting-edge generative AI model to summarize a recent scientific paper. It confidently delivers a well-written, coherent summary complete with quotes and citations. The only problem? The quotes are fabricated, and the cited sources don’t exist. This phenomenon, known as an AI hallucination, is one of the most significant hurdles standing between generative AI’s potential and its widespread, trusted adoption.

AI hallucinations aren’t psychedelic trips; they are instances where a large language model (LLM) generates false, nonsensical, or factually inaccurate information but presents it as factual. This issue strikes at the core of what we need from these powerful tools: reliability and truthfulness. As we integrate AI into critical applications—from medical diagnostics to financial analysis—combating these false AI information outputs isn’t just a technical challenge; it’s a fundamental requirement for AI safety and building trustworthy AI.

This comprehensive guide will demystify the world of AI hallucinations. We’ll explore why these deep learning hallucinations occur, the risks they pose, and most importantly, provide a multi-layered playbook for developers, businesses, and users on how to prevent AI hallucinations. From advanced prompt engineering to verifiable AI frameworks, you’ll learn the essential strategies for enhancing generative AI accuracy and building the reliable AI systems of the future.

What Exactly Are AI Hallucinations? A Deeper Dive

The term “hallucination” is anthropomorphic, suggesting a human-like failing. In reality, it’s a glitch in the machine’s process. Large language models like GPT-4o or Claude 3.5 Sonnet are incredibly complex neural networks trained on vast oceans of text and data from the internet. Their primary goal is not to know facts but to predict the next most probable word in a sequence, creating grammatically correct and contextually plausible sentences.

A hallucination occurs when this predictive process goes off the rails, leading the model to generate content that is disconnected from its training data or factual reality. Think of it less as a conscious lie and more as an over-enthusiastic improvisation based on learned patterns. The model isn’t accessing a knowledge base; it’s weaving together a tapestry of statistical relationships, and sometimes, the pattern it weaves is a fiction.

The Root Causes: Why Do AIs “Make Things Up”?

Understanding the cause is the first step toward a cure. AI hallucinations are not a single problem but a symptom of several underlying factors related to the model’s data, architecture, and training process.

- Training Data Dilemmas: The model is only as good as the data it learns from. If the training data contains biases, factual errors, or is outdated, the AI will inherit these flaws. Gaps in the data on niche topics can also force the model to “fill in the blanks” by making educated (and often wrong) guesses.

- The Nature of Generative Models: At their core, LLMs are masters of language, not arbiters of truth. Their architecture is optimized for fluency and coherence, which can sometimes come at the expense of AI content accuracy. This is a core challenge impacting AI model reliability.

- Probabilistic Generation (Decoding Strategy): When generating a response, the model calculates probabilities for the next word. Instead of always picking the most likely word (which can be repetitive), techniques are used to introduce randomness for more creative and human-like text. This very randomness can sometimes lead the model down a path of invention.

- Model Overfitting and Underfitting: Overfitting happens when a model learns its training data too well, including its noise and irrelevant details, making it poor at generalizing to new information. Underfitting is the opposite, where the model is too simple to capture the underlying patterns, leading it to make broad, often inaccurate statements.

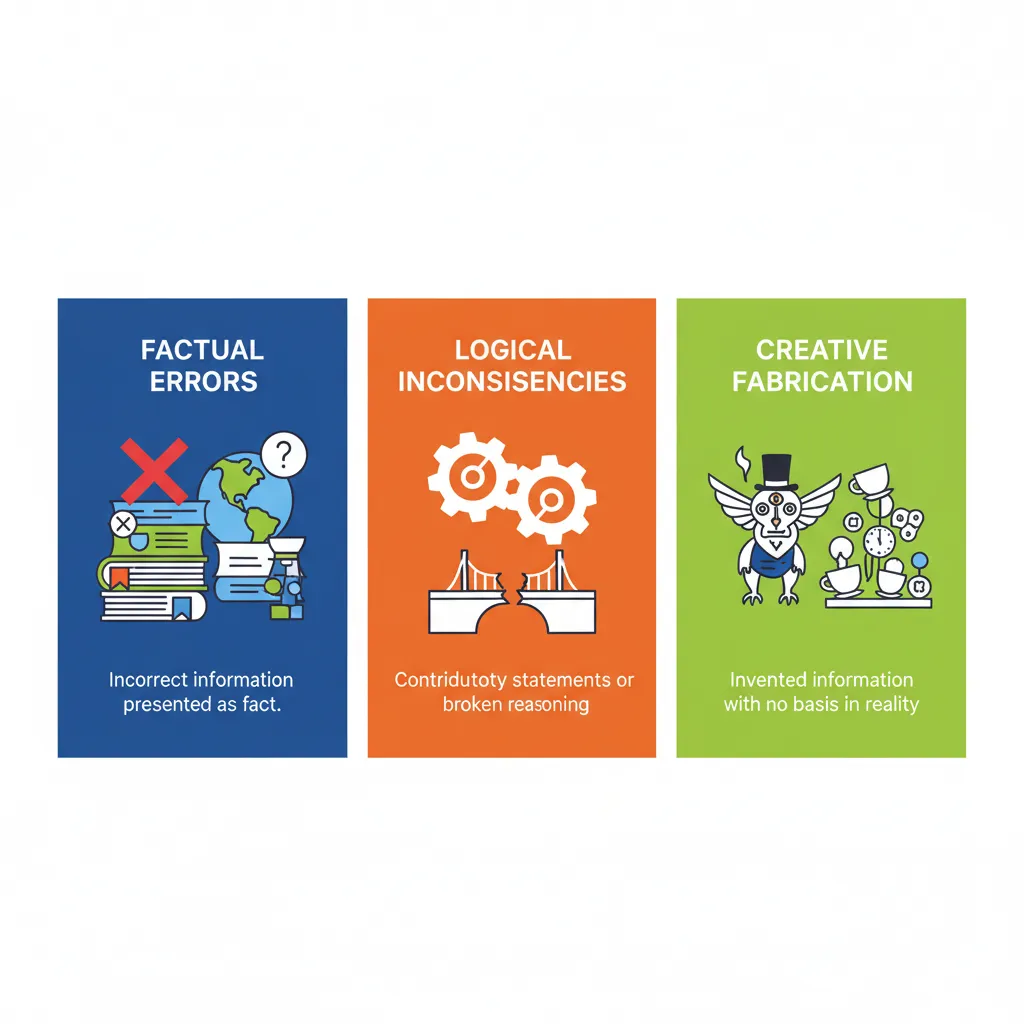

Common Types of AI Hallucinations

AI hallucinations manifest in several ways, from subtle inaccuracies to completely fabricated narratives. Recognizing them is key to AI output verification.

- Factual Inaccuracies: This is the most common type, where the AI presents incorrect dates, statistics, names, or events as fact. For example, claiming a historical event happened in the wrong year.

- Source Fabrication: A particularly dangerous form where the model invents non-existent sources, studies, legal precedents, or quotes to support its claims. This undermines the very concept of verifiable AI.

- Nonsensical or Contradictory Statements: The AI generates text that is grammatically correct but logically incoherent or internally contradictory.

- Contextual Drift: During a long conversation, the model may slowly “forget” the initial context or constraints of the prompt, leading to responses that are irrelevant or deviate significantly from the user’s intent. This is a challenge in maintaining neural network reliability over extended interactions.

The High Stakes: Why AI Hallucinations Are a Critical Risk

While a chatbot inventing a recipe might be harmless, the implications for enterprise AI safety and AI in critical applications are profound. As we move from novelty chatbots to integrated business and societal systems, the cost of false information skyrockets. [Related: Smart Cities: How AI and IoT are Shaping Our Urban Futures].

Imagine a legal AI fabricating case law, a medical diagnostic tool hallucinating patient symptoms, or a financial model basing its predictions on non-existent market trends. The consequences range from financial loss and legal liability to severe risks to human health and safety.

This erosion of AI system trust is a major barrier. If users cannot depend on an AI’s output, its utility plummets. Therefore, AI risk management isn’t just about preventing catastrophic failures; it’s about ensuring the day-to-day AI truthfulness required for meaningful human-AI collaboration.

The Proactive Playbook: A Multi-Layered Strategy for Reducing AI Hallucinations

There is no single “magic bullet” for AI hallucinations. The solution lies in a holistic, multi-layered approach that addresses the entire AI lifecycle, from data ingestion to output verification. This is the core of responsible AI development.

1. Data-Centric Approaches: The Foundation of Factual AI

The principle of “garbage in, garbage out” has never been more relevant. A robust strategy for AI error reduction begins with the data.

- High-Quality Data Curation: The most fundamental step is to train models on clean, diverse, well-vetted, and factually accurate datasets. This involves rigorous processes to filter out misinformation, hate speech, and inherent biases.

- Retrieval-Augmented Generation (RAG): RAG is a game-changing technique. Instead of relying solely on its internal, static training data, a RAG system first retrieves relevant information from an external, trusted knowledge base (like a company’s internal documents or a real-time news API). It then uses this retrieved information as a foundation to generate its response, effectively grounding the AI in a verifiable source of truth. This dramatically reduces fabricated information and makes the AI’s output more factual and current.

- Data Freshness: For applications requiring up-to-the-minute information, models must be continuously updated or connected to live data sources to prevent them from providing outdated facts.

2. Advanced Prompt Engineering for Accuracy

How you ask the question matters just as much as the AI’s ability to answer. Prompt engineering for accuracy is a critical skill for anyone building or using generative AI. [Related: GPT-4o: The Ultimate AI Assistant for Content Creators].

- Provide Context and Constraints: Don’t ask vague questions. Provide as much background information as possible. Tell the model what persona to adopt, what format to use, and, crucially, what not to do (e.g., “Do not invent sources. If you don’t know the answer, say so.”).

- Chain-of-Thought (CoT) Prompting: This technique involves asking the model to “think step-by-step.” By instructing it to lay out its reasoning process before giving the final answer, you can often spot logical flaws and encourage a more rigorous, less “instinctive” response.

- Few-Shot Prompting: Instead of just asking a question (zero-shot), provide a few examples of high-quality question-and-answer pairs within your prompt. This gives the model a clear template to follow, improving the accuracy and format of its output.

3. Model & Output Verification Techniques

Trust, but verify. No matter how advanced the model, a verification layer is essential for building reliable AI systems.

- Human-in-the-Loop (HITL): For high-stakes applications, human oversight is non-negotiable. HITL systems flag uncertain or critical AI outputs for review by a human expert before they are finalized or acted upon. This combines the speed of AI with the judgment of human experts.

- Automated Fact-Checking: The AI’s output can be automatically cross-referenced against external databases, search engines, or trusted sources. If the system finds discrepancies, it can either correct the information or flag it as unverified.

- Confidence Scoring: A major step forward in AI model evaluation is designing models that can communicate their own uncertainty. Instead of giving a confident but wrong answer, a more trustworthy AI would respond with, “I am only 60% confident in this answer, as sources are conflicting.”

- Self-Correction Loops: Advanced frameworks are being developed where a primary AI generates a response, and a second “critic” AI reviews it for errors, biases, and hallucinations. This internal feedback loop can catch and correct mistakes before the output ever reaches the user. [Related: Claude 3.5 Sonnet: A Deep Dive into the New AI Challenger].

The Rise of Explainable AI (XAI) and Verifiable Systems

One of the biggest challenges with deep learning hallucinations is the “black box” nature of neural networks. We often don’t know why a model generated a specific output. Explainable AI (XAI) aims to change that.

XAI solutions are a set of tools and methods that help us peer inside the black box. They can highlight which parts of the input data most influenced the model’s decision, making the AI’s reasoning process more transparent. This AI explainability is crucial for debugging, identifying biases, and building user trust. When a developer can understand the model’s logic, they are better equipped to diagnose and fix the root causes of hallucinations.

Closely related is the push for verifiable AI, where every substantive claim made by the model is linked back to its source data. This allows users to easily check the facts for themselves, transforming the AI from a questionable oracle into a transparent and trustworthy research assistant.

Building a Culture of Responsible AI Development

Technology alone is not the answer. Combating AI hallucinations requires a cultural shift towards responsible AI development and a clear understanding of AI ethics. [Related: Apple Intelligence in iOS 18: A Guide to the New AI Features].

This means:

- Integrating AI Risk Management: AI safety cannot be an afterthought. It must be woven into the entire development lifecycle, from initial concept and data selection to deployment and ongoing monitoring.

- Prioritizing AI Bias Reduction: Hallucinations are often linked to biases in training data. Actively working to identify and mitigate these biases not only makes the AI fairer but also more factually accurate.

- Radical Transparency about AI Limitations: Developers and companies deploying AI have an ethical obligation to be transparent with users about the system’s capabilities and its potential to hallucinate. Managing user expectations is key to preventing the misuse of AI-generated information.

Conclusion: The Future of AI Reliability is a Shared Responsibility

AI hallucinations are a complex and persistent challenge, a fundamental growing pain of a technology that is evolving at a breathtaking pace. However, they are not an insurmountable obstacle. The future of AI reliability depends on a concerted, multi-pronged effort.

By combining high-quality data, sophisticated RAG architectures, disciplined prompt engineering, rigorous output verification, and a culture of responsible development, we can significantly mitigate the risk of hallucinations. The goal is not to build an infallible AI—an unrealistic ambition—but to create trustworthy AI systems that are honest about their limitations, can be verified, and operate safely alongside human experts.

This journey requires diligence from developers, strategic oversight from business leaders, and critical thinking from end-users. Together, we can guide the development of AI away from a generator of convincing fictions and towards a reliable partner in our quest for knowledge and progress. [Related: Quantum Computing: Unlocking the Future of Tech and AI].

FAQs

Q1. What is the main cause of AI hallucinations?

The primary cause of AI hallucinations is that large language models are designed to predict the next most likely word in a sequence to form coherent sentences, not to access and state facts from a knowledge base. This can lead them to generate plausible-sounding but factually incorrect information based on flawed patterns in their training data, gaps in their knowledge, or the inherent randomness in their text generation process.

Q2. Can AI hallucinations be completely eliminated?

Completely eliminating AI hallucinations is currently not possible and may never be, given the probabilistic nature of generative models. However, they can be significantly reduced and managed to the point where the AI is highly reliable. Techniques like Retrieval-Augmented Generation (RAG), rigorous fact-checking, human oversight, and better model training are all effective strategies for AI error reduction.

Q3. What is a real-world example of an AI hallucination?

A well-known example occurred when a lawyer used a chatbot to research legal precedents for a court filing. The AI generated several compelling case citations that looked completely real. However, upon review, it was discovered that the AI had entirely fabricated the cases, the judges, and the quotes, leading to professional sanctions. This highlights the serious risks of deploying AI in critical applications without AI output verification.

Q4. How does Retrieval-Augmented Generation (RAG) prevent hallucinations?

RAG helps prevent hallucinations by grounding the AI’s response in a specific, reliable set of external data. Instead of generating an answer from its vast but static internal memory, the RAG system first retrieves relevant, up-to-date information from a trusted source (like a company’s product manuals or a database of scientific papers). It then uses this retrieved context to formulate its answer, making it far less likely to invent facts.

Q5. Is an AI hallucination the same as AI bias?

No, but they are related. AI bias refers to a model’s tendency to produce results that are systematically prejudiced due to skewed training data (e.g., associating certain jobs with a specific gender). An AI hallucination is the generation of factually incorrect or nonsensical information. However, reducing AI bias can help reduce certain types of hallucinations, as a biased model might hallucinate “facts” that align with its ingrained stereotypes.

Q6. How do you test for AI hallucinations?

Testing for hallucinations involves a process called AI model evaluation. This includes running the model against a “golden dataset” of questions with known, correct answers to check its factual accuracy. It also involves using adversarial testing, where you intentionally try to trick the model with confusing or leading prompts. Finally, human review and feedback are critical for catching nuanced hallucinations that automated tests might miss.

Q7. What is the role of Explainable AI (XAI) in building trust?

Explainable AI (XAI) plays a crucial role in building trust by making the AI’s decision-making process transparent. Instead of just getting an answer, XAI solutions can show you why the AI arrived at that conclusion, highlighting the data points it considered most important. This transparency helps users and developers understand the model’s reasoning, identify potential flaws that could lead to hallucinations, and ultimately build greater AI system trust.